How is Apache Airflow utilized in practice?

-

First of all, set all configuration-related options.

-

Initialize the database at the backend.

-

Initialized the use of the operators. These main operators include PythonOperator, BashOperator, and Google Cloud Platform Operators.

Manage the connections by following the steps -

-

Develop a connection with the User Interface.

-

Edit the connection with the User Interface.

-

Develop a connection with variables related to the environment.

-

Configure the type of connections.

-

Configure the Apache Airflow to write the logs.

-

Scale out it first with Celery then with Dask and with Mesos.

-

Run Airflow with the system and with Upstart.

-

For testing, always use the test mode configuration.

Best Practices of Apache Airflow

| Things to be Considered | Best Practices |

| The composition of the Management | Give concern on the definition of Built-ins such as Connections, Variables. There are also other tools that are non-python and present in Airflow; forget their usability also. Target a single source of configuration. |

| Fabricating and Cutting the Directed Acyclic Graph | There should be one DAG per data source, one DAG per project and one DAG per data sink. The code should be kept in template files. The Hive template used for the Hive. The template search path is used for the template search. The template files are kept "Airflow agnostic." |

| Generating Extensions and Plugins | It is easy to write plugins and extensions, but it is a needed thing Extension paths that should be considered are operators, hooks, executors, macros, and UI adaption (views, links). Writing of plugins and extensions should be started from existing classes and then adapted. |

| Generating and Expanding Workflows | For this point, the Database should be considered at three levels: Personal level, Integration level, and Productive level. Data engineers or scientists handle the personal level, and at this level, testing should be done by "airflow test." At the integration level, Performance testing and Integration testing are considered. At the productive level, monitoring is handled. |

| Accommodating the enterprise | The existing workflow tools are considered for scheduling. There are tools in Airflow for integration, and considering them is a nice practice. |

Apache Airflow Use cases

Apache Airflow is an open-source platform used for programmatically defining, scheduling, and monitoring workflows. Here are some common use cases for Apache Airflow:

-

ETL (Extract, Transform, Load): Airflow can automate ETL processes, extracting data from various sources, transforming it into a usable format, and loading it into a target system.

-

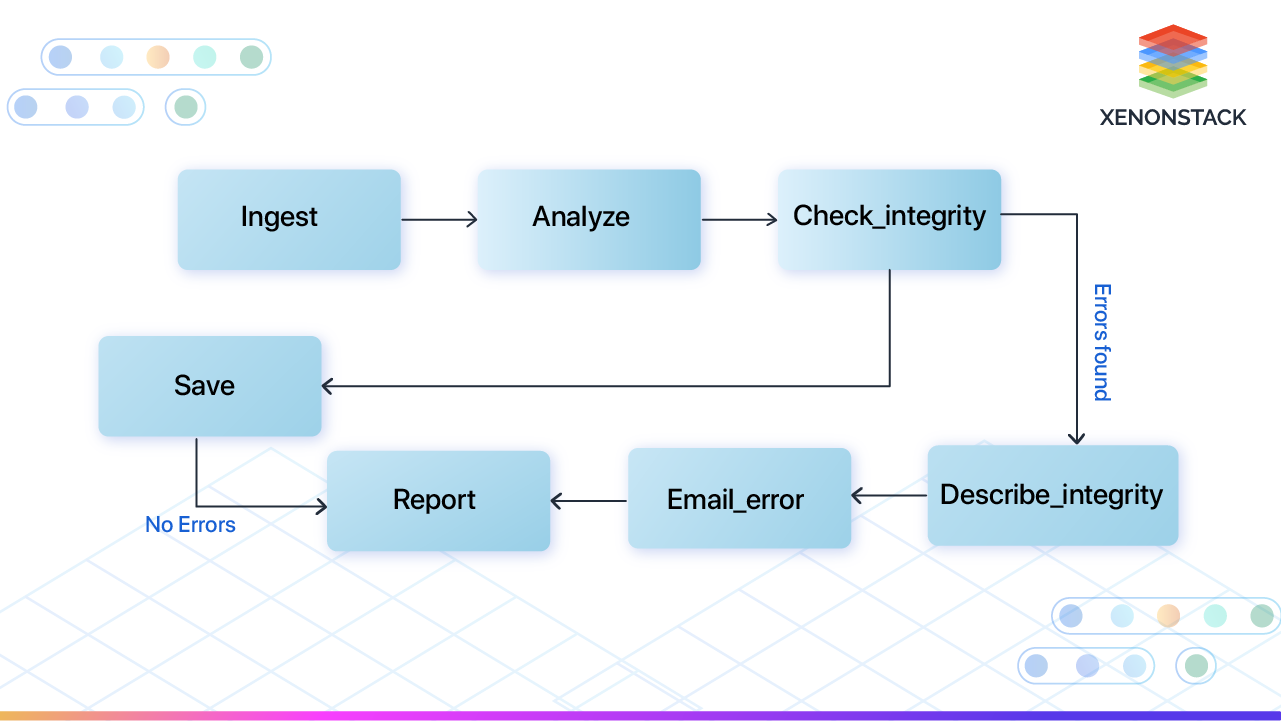

Data Pipelines: Airflow can create data pipelines that automate data processing tasks, such as data ingestion, processing, and delivery.

-

Machine Learning: Airflow can automate machine learning workflows, including data preprocessing, model training, and model deployment.

-

Job Scheduling: Airflow can schedule jobs to run at specific times or intervals, making it a great tool for automating routine tasks.

-

Batch Processing: Airflow can automate batch processing tasks, such as processing large datasets or running complex calculations.

-

Data Warehousing: Airflow can automate loading data into data warehouses, such as Amazon Redshift or Google BigQuery.

-

Cloud-based Data Processing: Airflow can automate cloud-based data processing tasks, such as processing data in AWS S3 or Google Cloud Storage.

-

Data Integration: Airflow can be used to integrate data from multiple sources and systems, such as integrating data from multiple APIs or databases.

-

DevOps Automation: Airflow can automate DevOps tasks such as deploying code changes or running automated tests.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)