Steps for Hyperparameter Tuning

-

When developing a hyperparameter tuning work, the metrics are specified for testing a training task. Only 20 criteria can be specified for a single task; the parameters have a unique name and regular expression to extract information from the logs.

-

The defined hyperparameter ranges for the parameter type, i.e., a distinction between the parameters in the ParameterRanges JSON object.

-

Create a notebook on SageMaker and connect with SageMaker’s Boto3 client.

-

Specify the bucket and data output location and launch the configured hyperparameter tuning job defined in steps A and b.

-

You can monitor the progress of concurrently running hyperparameter tuning jobs and find the best model on SageMaker’s console by clicking on the best training job.

Amazon SageMaker Best Practices

-

Defining the number of parameters: SageMaker allows using 20 parameters in a hyperparameter tuning job to limit the search space and find the best variables for a model.

-

Defining the range of hyperparameters: Defining a broader range for hyperparameters allows finding the best possible values, but it is time-consuming. Find the best value by limiting the range of benefits and limiting the search space for that range.

-

Logarithmic scaling of hyperparameters: If the search space is small, define hyperparameters' scaling as linear. If the search space is a large opt logarithmic scaling, it decreases the running time of jobs.

-

Finding the best number of concurrent training jobs: More work is done quickly in concurrent jobs, but the tuning jobs depend upon the results of previous runs. In other words, running a job can achieve the best results with the least amount of computing time.

-

Running training jobs on multiple instances: Running a training job on multiple instances uses the last-reported objective metric. To examine all the parameters, design a distributed training job architecture to get the logs of the desired metric.

ML pipeline helps to automate ML Workflow and enable the sequence data to be transformed and correlated together in a model to analyzed and achieve outputs. Click to explore about, ML Pipeline Deployment and Architecture

What is Amazon SageMaker Studio?

SageMaker Studio is a fully functional Integrated Development Environment (IDE) for machine learning. It unifies all the key features of SageMaker. In SageMaker Studio, the user can write code in the notebook environment, perform visualization, debugging, model tracking, and monitor the model performance in a single window. It uses the following features of SageMaker.

Amazon SageMaker Debugger

The SageMaker debugger will monitor the values of feature vectors and hyperparameters. Store the logs of a debug job in CloudWatch, check the exploding tensors, examine the vanishing gradient problems, and save the tensor values in the s3 bucket. By placing SaveConfig from the debugger SDK at the instance where the value of the tensors needs to be checked and SessionHook will be associated at the start of every debugging job run.

Amazon SageMaker Model Monitor

SageMaker model monitors the model performance by examining the data drift. The defined constraints and statistics file of features are in JSON. The constraint.json file contains the list of features with their type, and the required status is defined by the completeness field, whose value ranges from 0-1.

The file contains the mean, median, quantile, etc., information for each feature. The reports are saved in s3 and can be viewed in detail under constraint_violations.json , which consists of feature names and type of violation (data type, min or max value of a feature, etc.)

Amazon SageMaker Model Experiment

Tracking several experiments (training, hyperparameter tuning jobs, etc.) is easier than SageMaker. Just initialize an Estimator object and log the experiment values. It is possible to import values stored in the experiment into a pandas data frame, which makes analysis easier.

Amazon SageMaker AutoPilot

ML using AutoPilot is just a click away. Specify the data path and the target attribute(regression, binary classification, or multi-class classification) type. If not specified, the built-in algorithms automatically specify the target type and run the data preprocessing and model according to it. The data preprocessing step automatically generates Python code to use for further jobs. A defined custom pipeline is used for it. DescribeAutoMlJob API.

The Objective of the Machine learning pipeline is to exercise control over the ML model. Source – Machine Learning Pipeline

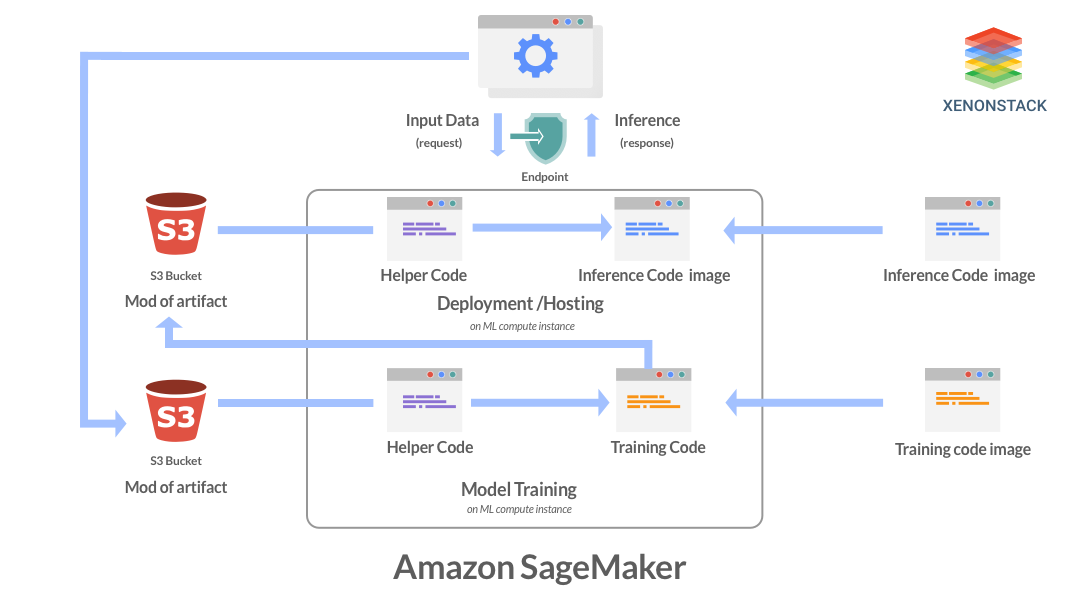

Running custom training algorithms

-

Run the dockerized training image on SageMaker

-

The SageMaker calls a

CreateTrainingJobthe function which runs training for a specific period -

Specify the hyperparameters in

TrainingJobName -

Check the status by

TrainingJobStatus

Security at SageMaker

-

Cloud Security: AWS uses a shared responsibility model, which involves security in the cloud by AWS for securing the infrastructure and security of the cloud, which involves the services opted by a customer, IAM key management, and privileges to different users, keeping the credentials secure, etc.

-

Data Security: SageMaker keeps the data and model artefacts encrypted in transit and elsewhere. Requests for a secure (SSL) connection to the Amazon SageMaker API and console are encrypted. Encrypted notebooks and scripts use the AWS KMS (Key Management Service) Key. If the key is unavailable, they are encrypted using the transient key. After the decryption, this transient key becomes obsolete.

What are the advantages of SageMaker?

-

It uses a debugger in training that has a specified range of hyperparameters automatically.

-

Helps to deploy End-to-end ML pipeline quickly.

-

It helps to deploy ML models at the edge using SageMaker Neo.

-

ML compute instance suggests the instance type while running the training.

Our solutions cater to diverse industries, focusing on serving ever-changing marketing needs. Click to explore our Machine Learning Services for Productionizing Models

Conclusion

AWS charges each SageMaker customer for the computation, storage, and data processing tools used to build, train, perform, and log machine learning models and predictions, along with the S3 costs to maintain the data sets used for training and ongoing predictions. The SageMaker framework's design supports ML applications' end-to-end lifecycle, from model data creation to model execution, and the scalable construction also makes it versatile. That means you can use SageMaker independently for model construction, training, or deployment.

Discover more about Cloud Managed Services

Explore More about Kubernetes Managed Platforms

Know More about Managed Security Services

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)