What is Apache Beam?

Apache Beam is a product of Apache Software Foundation, which is in an open-source unified programming model and is used to define and execute data processing pipelines, which include ETL i.e., Extract, Transform, Load and both batch and stream data processing. This model was written using two programming languages, and that are Python and Java.

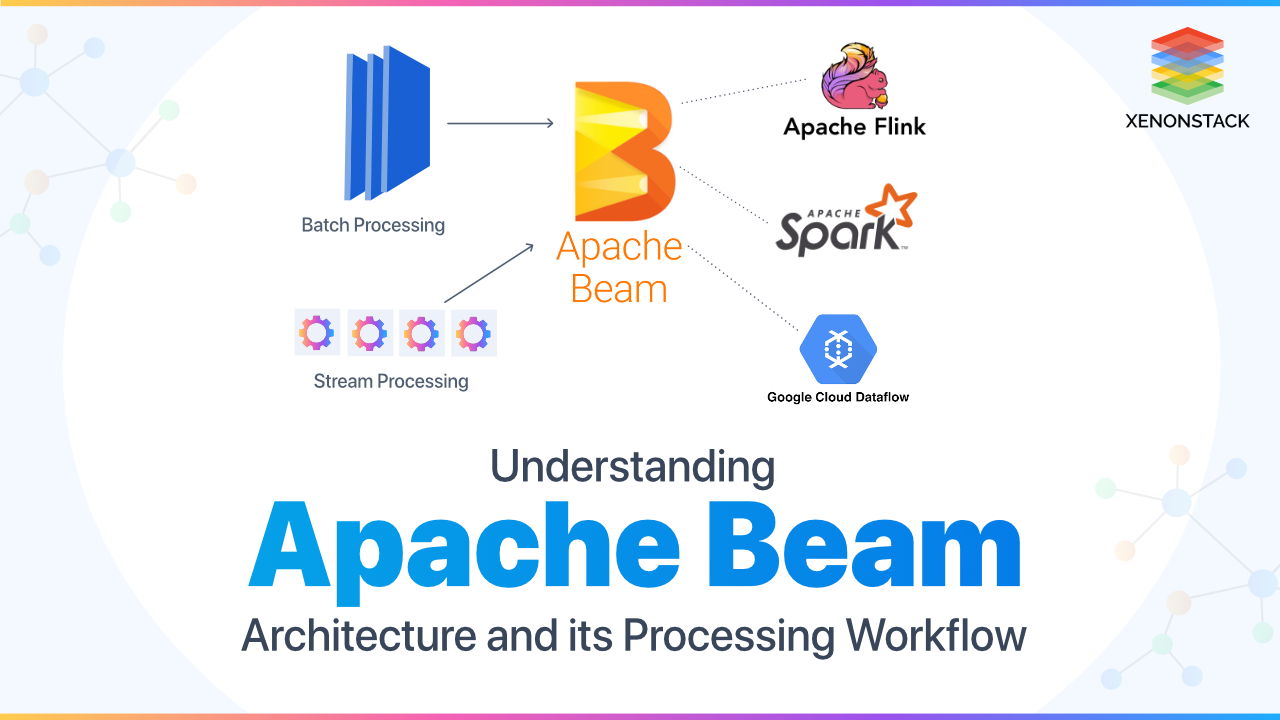

The initial version of it was developed in June 2016, and its stable release (2.7.0) came into existence in October 2018. With the help of any open source Beam SDKs, we can build a program that can define a pipeline. After that, the pipeline would be executed by any of the Beam distributed processing back-ends which involve some of the beam runners like -

- Apache Flink

- Apache Spark

- Apache Apex

- Google Cloud Dataflow

An important part of Data Science consist of two concepts such as Data Cleaning and Feature Engineering. Click to explore about, Data Preprocessing in Machine Learning

Why it is important?

The reason why Beam came into existence is that it can be used in parallel data processing tasks which can be fragmented into several smaller chunks of data which are processed in parallel and independently. The advantage of using beam SDK is that it can transform a dataset of any size whether the input data is finite i.e., came from a batch data source or an infinite data set from a streaming source of data. Other benefits of using Beam SDK are that it uses the same class to the representation of both the bounded and unbounded data. Currently, Beam supports the following language-specific SDKs -- Java

- Python

- Go

While creating a beam pipeline, one can have the following processing tasks in terms of abstractions -

- Pipeline - A pipeline consists of the entire data processing tasks from start to end. The stages which are involved in this are reading the input data, transforming that data, and after that, writing the output. When we create a pipeline, we must give the execution option, which tells the pipeline where to run and how to run.

- PCollection - As the name suggests, it represents a distributed data set on which the beam pipeline has to operate. The data set can be bounded or unbounded i.e., it can come from a fixed source or can come from a continuously updating source with the help of subscription or any other mechanism.

- PTransform - It represents a data processing transform or an operation. The input for every PTransform is a PCollection object, performs the processing functions that we provide, and gives zero or more PCollection objects as an output.

IoT technologies lower energy prices, optimize natural resource usage, clean cities and make a healthier climate. Click to explore about, Architecture of Data Processing in IoT

What is the Pipeline Development Life-cycle?

There exist three significant stages while designing a pipeline, and that is as follows:

Developing a pipeline - Designing a beam pipeline involves the information about how to determine a pipeline structure, how to choose that which transform must apply to the data, and how to determine the input and output data. Following are the things while designing a pipeline -

- Where the input data is stored i.e., how many sets of input data it has, it also determines which kind of read transforms we will need to apply at the beginning of the pipeline.

- What does the input data look like i.e., the data can be a plain text or rows in a database table?

- What to do with the data, it involves how we change and manipulate our data and to determine how to build core transforms such as ParDo.

- Where should the data go i.e., what kind of write transforms we will need to apply at the end of the pipeline.

A healthy product pipeline is an organization’s strongest defence against changing markets, technologies and consumer preferences. Source- Ways to refresh your product pipeline

Apache Beam Structure for Basic Pipeline

The most manageable pipeline would represent a linear flow of operations. A pipeline can have multiple input sources, as well as numerous output sinks. While creating a beam pipeline, one can have the following processing tasks in terms of abstractions –

Creating a Pipeline

For constructing a pipeline with the help of classes in the beam SDKs, the program will need to perform the following steps -

- Create a pipeline object - In beam SDK, each pipeline object is an independent entity that takes both the data and the transforms which are to be applied to the data.

- Read data into the pipeline - For reading the data, there are two root transforms, and that is Read and Create. With the help of Reading transform, we understand the data from an external source like a text file, and with the help of Create transform, we create a PCollection from in-memory.

- Apply transforms to process pipeline data - With the help of application method on each PCollection, we can handle our data using various transforms in the beam SDKs.

- Output the final transformed PCollections - After the pipeline has been applied all of its transforms, it needs to output the data. Forgiving the output of final PCollection, we refer to write transform, and it would output the elements of a PCollection to an external database.

- Run the pipeline - For executing our pipeline, we use the run method. If we would like to block the execution, then run the pipeline by appending a technique known as WaitUntilFinish.

- Testing a pipeline - Testing is one of the most essential parts of building a pipeline, before running the pipeline on the runners, we should perform unit testing on it. After testing it choose a small runner like Flink and run the pipeline on it. For the support of Unit testing, the beam SDKs for Java provides many test classes it the testing package. Some of the testing objects are -

- Testing Individual DoFn Objects - In Beam SDK for Java gives way for testing a DoFn, which is known is DoFn Tester, which uses the JUnit framework. This is included in the SDK Transforms package in Java.

- Testing Composite Transforms - For testing a composite transform that we have created, first, we have created a pipeline and some known input data. After that, apply the composite transform to input data set or PCollection and save the output PCollection.

- Testing a pipeline end to end - For testing a pipeline end to end, a class is already provided in the Beam Java SDK, which is TestPipeline. This is used when we create a pipeline object in place of the pipeline.

Apache ZooKeeper follows Client-Server architecture where servers are nodes that provides the service and clients are machines. Click to explore about, Apache ZooKeeper Security

Branching PCollection

As transforms do not consume PCollection, but the take each element of the PCollection and with the help of each component, it creates a new PCollection as an output. The advantage of consuming each aspect is that we can perform different tasks to different elements in the same PCollection. Several branching PCollection can be shown in the following ways -Multiple Transforms Process the Same PCollection

As an input, we can use the common PCollection for multiple transforms without even changing or altering the output. In the below example, a pipeline reads the input from the database, and with the help of this table, it creates its PCollection, and after that, the pipeline is applied. Here transform ‘A’ gives all the names in that PCollection whose starting letter is ‘A’ and in the same way transform ‘B’ provides all the names in PCollection whose starting letter is ‘B’ irrespective of both the transforms have the same input data set or PCollection.Single Transform that Produces Multiple Outputs

This is another way to branch a pipeline, in which only one transform gives multiple PCollections as an output.In the below-given example, there exist only multiple outputs but with a single transform. Here the persons whose name starts with the letter ‘A’ are added to the main output, and names beginning with the letter ‘B’ are added to the additional output PCollection.A platform or framework in which Big Data is stored in Distributed Environment and processing of this data is done parallelly. Click to explore about, Apache Hadoop Security with Kerberos

Merging PCollections

For merging two or more PCollection in a single unit, there is two transforms or methods available, and that is as follows -

Flatten - This transform in beam SDK is used for merging to or more PCollections of the same type.

Join - For performing a relational join between two or more PCollections, we can use CoGroupByKey transform. The only condition for using this transform is that the PCollections must be in key, value pair, and of the same type.

Multiple Transforms

These are the various operations that we apply in our pipelines. They also give us the general processing framework. According to the pipeline runner and the back end that we choose, several workers across the cluster can execute the sample of the user code in parallel. The code which is being executed on each worker produces the output elements, and those elements are being added to the final output PCollection. For invoking a transform, we use the apply method, and if we invoke multiple transforms at the same time, it refers to method chaining. Some of the Core Beam transforms which are supported by its SDKs are as follows -- ParDo

- GroupByKey

- CoGroupByKey

- Combine

- Flatten

- Partition

A technique that is used to convert the raw data into a clean data set. Source- Data Preparation Process

Apache Beam vs Apache Spark vs Apache Flink

The comparison between Apache Beam, Spark, and Flink can be made on several bases like - On the basis of what is being computed as GroupByKey is supported in Beam and Flink, which Is used for processing Key-Value pairs, but Spark does not support GroupByKey. While computation, composite transforms are used for breaking a composite transform into simpler transforms and then make code modular which is easier to understand and this is supported by beam, but Apache Spark and Flink does not help Composite transform.| Apache Beam | Apache Flink | Apache Spark | |

| GroupByKey | Supported | Supported | Partially Supported |

| Composite | Supported | Partially Supported | Partially Supported |

| Stateful Processing | Supported | Partially Supported | Partially Supported |

| Apache Beam | Apache Flink | Apache Spark | |

| Global Windows | Supported | Supported | Supported |

| Fixed Windows | Supported | Supported | Supported<t/d> |

| Sliding windows | Supported | Supported | Supported |

| Apache Beam | Apache Flink | Apache Spark | |

| Configurable Triggering | Supported | Supported | Not Supported |

| Event Time Triggers | Supported | Supported | Not Supported |

| Processing Time Triggers | Supported | Supported | Supported |

A Strategic Approach for Distributed Processing

Apache Beam can be implemented in several use cases, one of the examples of Beam is a mobile game which has some complex functionalities and with the help of several pipelines the complex tasks can be easily implemented. In this example, there exists a mobile game, and several users can play it at a time from different locations, users can also play in teams sitting at various locations. So the data generated will be in a considerable amount due to which the event time and the processing time of the scores of different teams can vary, and this time difference between the processing time and event time is called Skew. So with the help of pipelines available in its Architecture, we can reduce this time difference, and the scores will not vary. In this example, we can use several pipelines like UserScore and HourlyTeamScore, which divides the input data into logical windows and performs various operations on those windows, and each window represents the game score progress at a fixed interval of time.

- Read here about Apache Kafka Security with Kerberos

- Discover more about Data Processing with Apache Flink