What is Hadoop File System (HDFS)?

Hadoop File System (HDFS) is a distributed file system. Store all types of files in the Hadoop file system. It supports all standard formats such as Gifs, text, CSV, tsv, xls, etc. Beginners of Hadoop can opt for tab_delimiter (data separated by tabs) files because it is -- Easy to debug and readable

- The default format of Apache Hive

What is the Data Serialization Storage format?

Storage formats are a way to define how to store information in the file. Most of the time, assume this information is from the extension of the data. Both structured and unstructured data can store on HADOOP-enabled systems. Common Hdfs file formats are -- Plain text storage

- Sequence files

- RC files

- AVRO

- Parquet

Why Storage Formats?

- File format must be handy to serve complex data structures

- HDFS enabled applications to find relevant data in a particular location and write back data to another location.

- Dataset is large

- Having schemas

- Having storage constraints

A distributed file system that handles large data sets running on commodity hardware and used to scale a single Apache Hadoop cluster of nodes. Source: Apache Hadoop distributed file system

Why choose different File Formats?

Proper selection of file format leads to -- Faster read time

- Faster write time

- Splittable files (for partial data read)

- Schema evolution support (modifying dataset fields)

- Advance compression support

- Snappy compression leads to high speed and reasonable compression/decompression.

- File formats help to manage Diverse data.

How to enabling data serialization in it?

- Data serialization is a process that converts structure data manually back to the original form.

- Serialize to translate data structures into a stream of data. Transmit this stream of data over the network or store it in DB regardless of the system architecture.

- Isn't storing information in binary form or stream of bytes is the right approach.

- Serialization does the same but isn't dependent on architecture.

Why Data Serialization for Storage Formats?

- To process records faster (Time-bound).

- When proper data formats need to maintain and transmit over data without schema support on another end.

- Now when in the future, data without structure or format needs to process, complex Errors may occur.

- Serialization offers data validation over transmission.

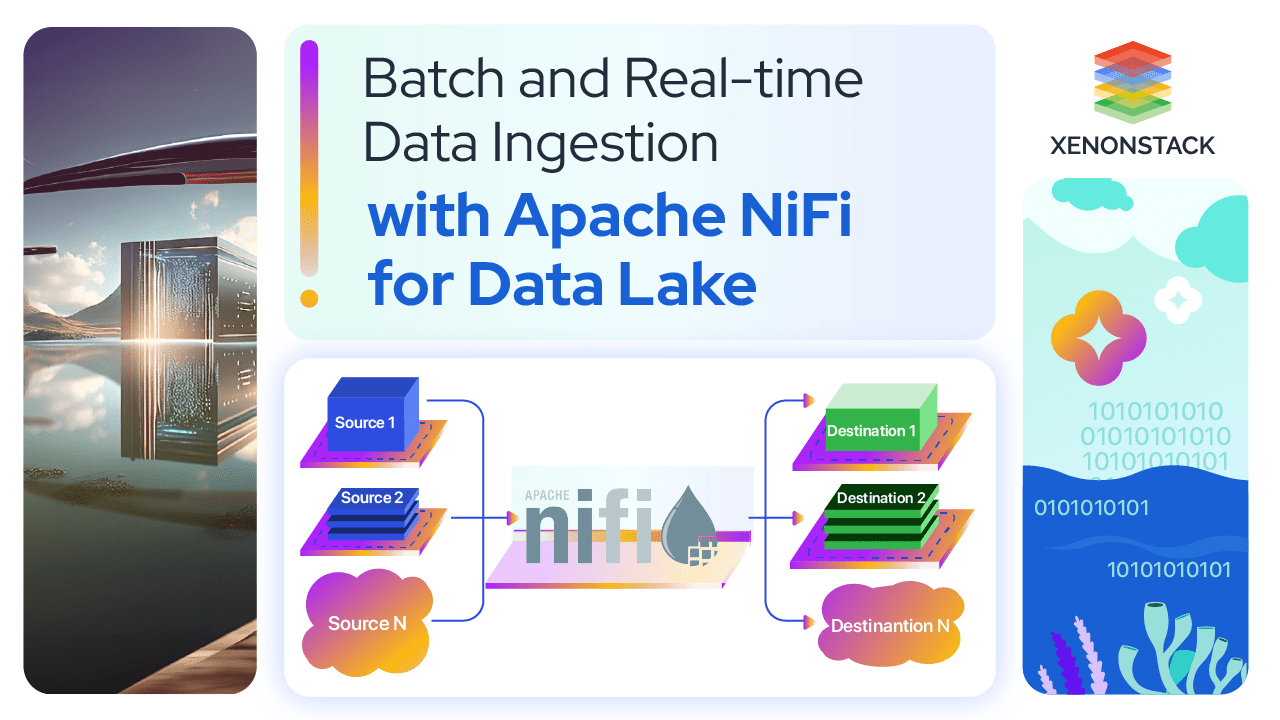

A part of the Big Data Architectural Layer in which components are decoupled so that analytic capabilities may begin. Click to explore about, Data Ingestion

Areas of Serialization for Storage Formats

To maintain the proper format of a data serialization system must have the following four properties -- Compact - helps in the best use of network bandwidth

- Fast - reduces the performance overhead

- Extensible - can match new requirements

- Inter-operable - not language-specific

Interprocess communication

When a client calls a function or subroutine from one pc to the pc in-network or server, that calling is a remote procedure call.Persistent storage

It is better than java's inbuilt serialization as java serialization isn't compact. Serialization and Deserialization of data help maintain and manage corporate decisions for effective use of resources and data available in Data warehouse or any other database -writable - language specific to java.Stacking of Storage Formats Hadoop

- Use splittable formats

- CSV files lack block compression; using CSV files in it may increase reading performance cost. Schema support is also within the limit.

- Use JSON records instead of JSON files. For better performance, experiments have to perform for each use case of JSON.

- AVRO file format and data serialization framework.

- Sequence files are complex in reading.

- Write operation is slower if RC (Row-Columnar) files are in use.

- Optimized RC (ORC) files are also the option to use but have less support.

- Parquet Files are using a columnar format to store data and process it.

Guide to Apache Hadoop File Format Configuration

- Its distribution information and architecture type (system information where Hadoop system is working and process jobs) must analyze thoroughly.

- Perform Schema evolution by defining the structure of your metadata.

- Processing requirements for file formats and configure the Hadoop system.

- Engine support for reading and write operations.

- Evaluate storage size at a production level its File formats selection.

- Text files are lightweight, but splitting those files and reading data leads to huge maps (MAPS needs to create a complicated way to achieve splitting of text (files data).

- Sequence files support block compression. A hive has SQL types, so not worthy of working with Hive.

- RCFILE has a high compression rate, but it takes more time to load data.

- ORC can reduce data size up to 75% and suitable with hive but increases CPU overhead. Serialization in ORC depends on data type (either integer or string).

- AVRO provides features like serialization and Deserialization with file format also. The core of AVRO is its schema. Supports dynamic and static types.

- Parquet provides the facility to partition data into both rows and columns but computationally slow on the right side.

- Avro is a data exchange and data serialization service system. It is based on schemas.

- Always store Schema in an AVRO file with data.

- Support Specific and Generic data.

- JSON defines AVRO schema (most languages have JSON libraries), and data format is binary, which determines the efficiency and makes it compact.

- Set the default schema for some mismatched or failed schema.

RAID stores the data in a defined way to improve overall performance. Click to explore about, Types of RAID Storage for Databases

Apache Avro Solution Offerings

At the same time, a few questions arise that - Is it possible to handle any schema change

- Yes, in AVRO, schema changes like missing fields, changed/modified fields, and added/new areas are easy to maintain What will happen to past data when there is a change in schema.

- AVRO can read data with a new schema regardless of data generation time. Is there any problem that occurs while trying to convert AVRO data to other formats, if needed.

- There are some inbuilt specifications in its system while using a Hybrid system of data? In such a way, Apache spark can convert Avro files to parquet. Is it possible to manage MapReduce jobs.

- MapReduce jobs can efficiently achieve by using Avro file processing in MapReduce itself. What connection Handshake do.

- Connection handshake helps to exchange schemas between client and server during RPC.

How is AVRO different from other systems? (Thrift and protocol buffer)

- AVRO has Dynamic Schema stores for serialized values in binary format in a space-efficient way.

- JSON schema and data in the file.

- Parser No compilation directly by using the parser library.

- AVRO is a language-neutral system.

How to implement Avro?

- Have the schema of data format ready

- To read schema in program

- Compile using AVRO (by generating class)

- Direct read via Parser library

- Achieve serialization through serialization API (java)

By creating class - A schema fed to AVRO utility and then gets processes as a java file. Now write API methods to serialize data.

By parser library - Fed schema to AVRO utility and access serialization parser library to serialize data. Achieve Deserialization through deserialization API (java.

By generating class - Deserialize the object and instantiate DataFileReader class.

By parser library - Instantiate parser class.

Creating schema in Avro

There are three ways to define and create JSON schemas of AVRO -- JSON String

- The JSON object

- JSON Array

- Primitive (common data types)

- Complex (when collective data elements need to be process and store)

- Primitive data types contain null, boolean, int, long, bytes, float, string, and double. In contrast, complex contains Records (attribute encapsulation), Enums, Arrays, Maps (associativity), Unions (multiple types for one field), and Fixed (deals with the size of data).

- Data types help to maintain sort order.

- Logical types (a super form of complex data types) are used to store individual data involved, i.e., time, date, timestamp, and decimal.

Apache Avro Use Cases

- Schema evolution.

- Apture is using AVRO for logging matrices data.

- RichRelevance is using binary formatted key/value pairs.

There are various types of testing in Big Data projects such as Database testing, Infrastructure, Performance Testing, and Functional testing. Click to explore about, Big Data Testing Best Practices

What is Apache Parquet?

Apache developed parquet, and it is a columnar storage format for the Hadoop ecosystem. It supports all data processing frameworks. Also, it supports all data models or programming languages -- Provides a compressed and efficient representation of columnar data.

- Record stripping and assembly algorithm from Dremel paper.

- Compression schemes can be present at the per-column level.

- Metadata is for single-pass writing after data.

- The file format contains column-wise data, which is split into row groups.

- Associations with data are done in three types of metadata forms

Files -Includes associations of schema element, principal value, and row group, which connect further to column chunk.

- Column (chunks) - Contains associations with KeyValue and connected with every ColumnChunk under RowGroup.

- Page Header - Contains info of size and associations of PageDataHeader, indexPage.

Header and Dictionary PageHeader

Had a capability to reuse efficient encoding over primitive types utilizing Annotations that further can decode and interpret and Support many engines such as Hive, Impala, spark, etc.Apache Parquet Capabilities

- Task-oriented serialization of data can be performed if some column-based decisions need to be managed.

- Data in 3 rows like r1(c1,c2,c3), r2(c1,c2,c3), r3(c1,c2,c3) will represent in columnar storage as 3 columns like c1(r1,r2,r3), c2(r1,r2,r3), c3(r1,r2,r3).

- Easy to split data partition in column way.

- As data is compressed and metadata is associated, modifications and new additions to schema may reflect changes immediately without interruption.

- The columnar format can store the same primitive types of values together, forming a nested data structure (using Definition and repetition levels).

- Columnar storage provides more homogeneous data so that the compression rate will be better for the Hadoop cluster.

- ProtoBuf model can help to describe the data structure of the columnar format using a schema.

- Encoding and decoding of column data make data more compact and compressed in nature.

Causes of error in Parquet.

- When any metadata is corrupt, then it will cause particular associative data to lose.

- While writing file metadata at the end of the file may also cause data loss if any error occurs.

- When the structure of the external table is changed, how can metadata information be updated?.

Apache Parquet Solution Offerings

- The approach with sync markers in RC and AVRO is usable here. Write file metadata at some Nth RowGroup, so during error, partially written data can be recovered.

- Easy to integrate with AVRO, thrift, etc. Models.

- When the PURGE option is not provided, managed tables can be retrieved from trash for a defined duration.

The process of turning data structure into byte stream either for storage or transmission over a network. Click to explore about, Best Practices for Hadoop Storage Format

What is Apache Hive and working architecture?

- Data warehouse software that provides fast processing of massive amounts of data.

- It can use for both Batch and Streaming queries.

- Read, write and manage large datasets of distributed systems using SQL.

- Requires schema to process structured data, follows OLAP.

- Develop SQL typescripts for MapReduce operations, known as HQL.

- The execution engine uses the technique of MapReduce to process the query and to generate results similar to MapReduce.

- Require an external database server to configure Metastore (Apache Derby).

- Convert unstructured data into a proper structure to process using Hive.

- Apache Hive has a high level of abstraction compared to Hadoop MapReduce, and code efficiency is very less.

Apache Hive Working Architecture

- First of all, give a query to the driver to execute

- The driver then sends a request to the compiler and then requests for metadata from the Meta store

- That metadata is then processed back to the driver. Then execution code is given to the execution engine, which processed data from the Hadoop system containing job tracker and HDFS with name nodes.

- After MapReduce finishes its processing of tasks and jobs, the result is then sent back to drivers and displayed to the user interface (CLI, web UI, etc.).

- Hive organizes data into Databases (namespaces), tables (schemas), partitions (each unit defines its meaning), and clusters (known as Buckets).

- HIVE has user-defined Aggregations (UDAFs), user-defined table functions (UDTFs), and user-defined functions (UDFs) to expand Hive's SQL.

Query Optimization with Apache Hive

- Use effective schema with partitioning tables

- Use compression on the map and reduce outputs

- Bucketing for increased performance on I/O scans

- MapReduce jobs with parallelism

- Vectorization process can be implemented for processing batch of rows together.

- Hive has inbuilt support to SerDes and allows custom SerDes to be developed by users of Hive.

- Shared and Exclusive locks can be used for optimizing resource use, and concurrent writers and readers are supported.

Apache Hive Use Cases

- Amazon Elastic MapReduce.

- Yahoo is using Pig for data factory and Hive for data warehousing.

Apache Hive data types

- Column types (specific to columns only)

- Integral (TINYINT, SMALLINT, INT, and BIGINT)

- Strings (Varchar, Char)

- Timestamps (Date)

- Decimals (immutable arbitrary-precision as of JAVA)

- Literals (representation of fixed value)

- Floating

- Decimals (values higher than DOUBLE)

- NULL type

- Complex Types (Arrays, Maps, Structs, Union)

- Hive can also change the kind of data when megastore col. Type. Changes are set to false. If the conversion is successful, data will be displayed otherwise NULL.

Partitioning in Apache Hive

Partitioning is the technique to divide tables into parts based on columns data, the structure of the data, and the nature of the data based on its source of generation and storage -- Partition keys help to identify partitions from a table

- Hive converts SQL queries into jobs of MapReduce to submit to the Hadoop cluster

- Created when data is inserted

- Manages data by default

- maintain Security at the Schema level

- Best for temporary data

- The external warehouse directory needs to be mentioned when external tables are present.

- Apply security at the HDFS level

- When data is necessary even after the DROP statement

- Execution load is horizontal

- When data is stored internally in a Hadoop system, then Dropping a table causes deletion of metadata and data tables permanently

Static partitioning in Apache Hive

- When data file size is significant, then static partitions are preferred only.

- Big files are generally generated in HDFS.

- From filename, partition column values can be easily fetched

- Execute Load statement whenever Partition column value needs to specify.

- Insert each data file as an individual.

- When the partition is to be defined for one or two columns, only

- Alteration is possible on static partitions

Dynamic partitioning in Apache Hive

- Every row of data is read and then goes to a specific field in the table

- When ETL flow of data pipeline is necessary

- Partition column value needs not to be mention whenever LOAD statements are executed.

- Only single insert

- Loads data from a non-partitioned table

- It takes more time to load as data needs to be read row by row

- When the partition generation process isn't static

- Alteration isn't possible. Need to perform load operation again

Understand what Software Defined Storage is? New era platform for centralizing organization operations. Click to explore about, Software-Defined Data Center Architecture

What are the features of Apache Hive?

- Interactive shell with essential Linux commands support

- Beeline CLI, a JDBC client on SQLLine (used at HiveServer2)

- Use AvroSerde for Avro data in HIVE tables, HiveQL for ORC file formats

- Hive does not support some data types of AVRO, but AvroSerde automatically do the work for this

- Test cases of Various file formats in it.

- Test case outputs are directly or indirectly changed with test cases, data model usage, and hardware specification and configuration.

- Based on space utilization

- Kudu and Parquet serve the best compression size with Snappy and GZip algorithms.

- Based on the ingestion rate

- AVRO and Parquet are best as compared with the writing speed of others

- Based on Lookup latency of random data

- Kudu and Hbase are faster than others

- The scan rate of data

- AVRO has the best scan rate of data when non-key columns are in output Test Cases Output

- When used with snappy compression, parquet or kudu reduces the data volume by ten times than simple uncompressed serialization format.

- Random lookup operations case is best suitable for HBase or Kudu. Parquet is also fast, but resources use more.

- Analytic on data is the best use case for Parquet and Kudu

- HBase and Kudu can alter in-place data

- Avro is the quickest encoder for structured data. Best when all attributes of record are necessary.

- Parquet works seamlessly for scan and ingestion

SerDe in Apache Hive

Hive has some built-in SerDe for Avro, ORC, parquet, CSV, JsonSerDe, and Thrift. Custom support is also available for users. Hive engines automatically handle object conversion. Two types of processing are done for serialization and Deserialization of records. Input Processing- The Execution Engine uses the configured input format to read

- Then performs Deserialization

- that engine also holds object inspector

- Deserialized objects and inspectors are then passed to operators by the engine to manipulate records.

- Serialize method of Serde uses deserialized object and object inspector

- Then performs serialization on it in expected output format for writing

A Holistic Approach

To know more about how Why Adopting Hadoop Storage Format Matters and understanding how to process records faster, we recommend talking to our expert.

- Explore here about Data Validation Testing Tools and Techniques

- Read more about Software Defined Storage