In supercomputing, the distribution of network traffic has an overwhelming impact on a system's overall effectiveness and reliability. With increasing application usage, more traffic has to be dealt with, which becomes a more serious problem to solve. Load balancing is a method that helps achieve this objective by directing incoming traffic to a series of servers rather than allowing it to one to await processing. Out of the various load balancers, the one that Facebook has developed, named Katran, is notable as an L4 load balancer aimed at high throughput and low late delivery applications. In contrast to traditional load balancers with higher levels of the OSI model, Katran is a transport layer (TCP and UDP) load balancer that does IP port and address-based packet forwarding on its own.

This blog will examine Katran's internal and external architecture, including its application sector, significant characteristics, implementation steps, strengths and weaknesses, and the extent to which it will be relevant to modern-day distributed systems and cloud-native applications.

Importance of Load Balancing

With the current pace of digitization, organizations are finding it difficult to manage large, complex, global or even regional systems. Whether it is a worldwide e-commerce engine processing millions of transactions in a second, a media-on-demand service that has low or no latencies, or an online multiplayer gaming system with live interactions, services provided by any institution must always be on, fast and elastic in optimizing to the demand as the traffic increases.

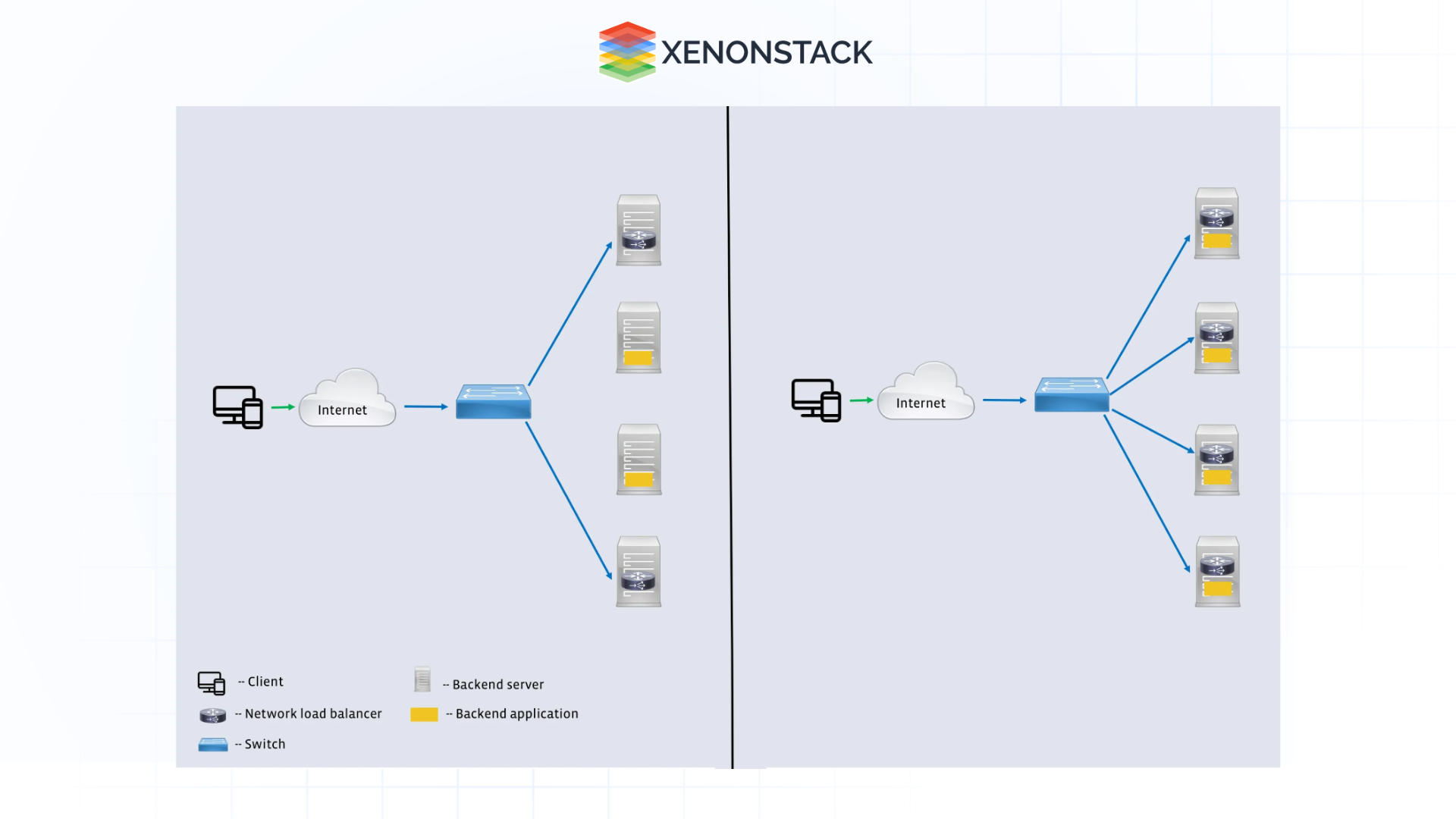

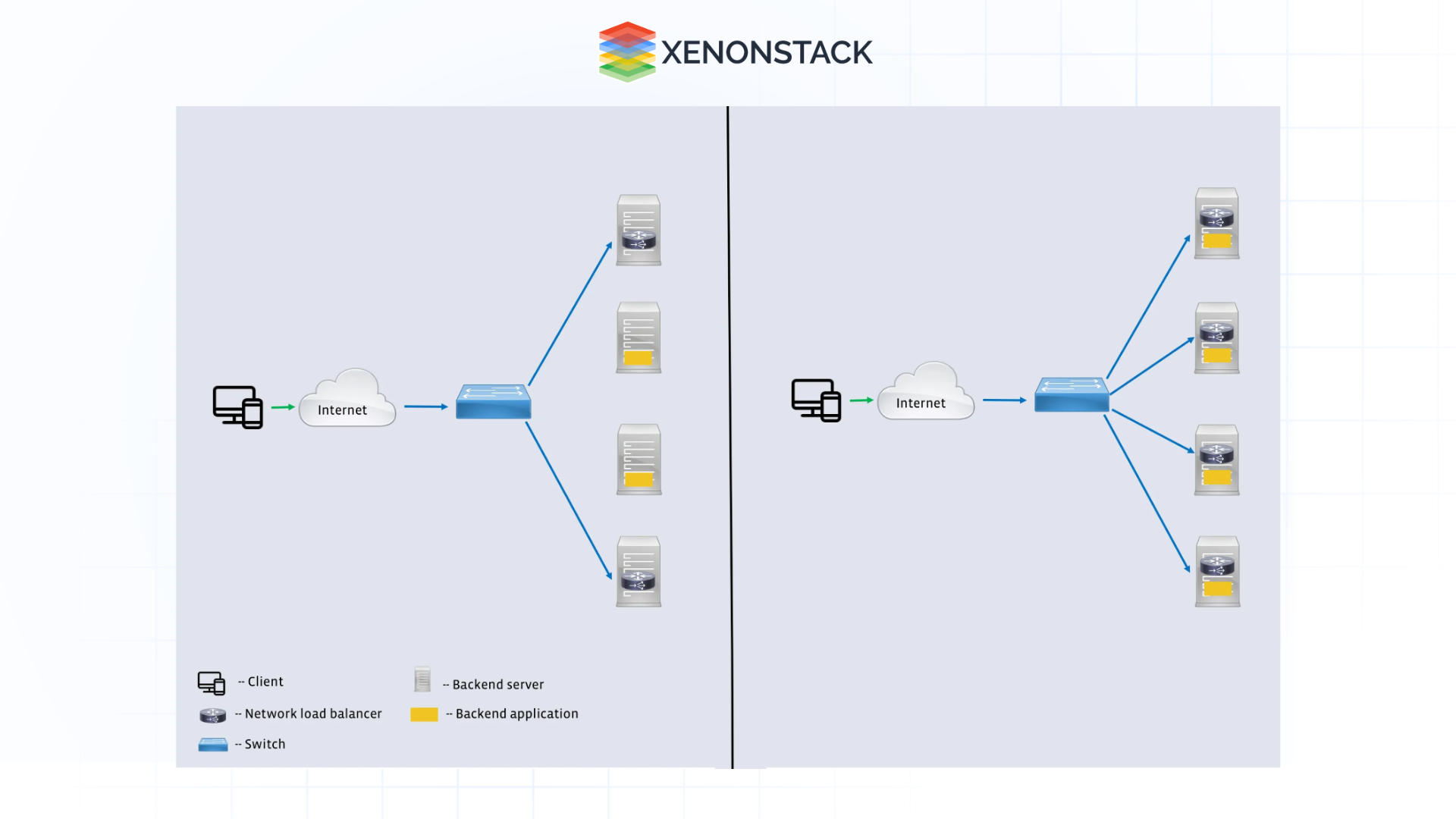

In this context, load balancing is very important. Load balancers, as the name suggests, take incoming network traffic and spread it over several servers, thereby improving the network's system availability by avoiding a traffic bottleneck and ensuring that the end users do not suffer high latency.

Challenges with Traditional Load Balancers

Conventional Layer 7 (L7) load balancers are classified within the application layer, and extensive inspection of the HTTP Protocol, including headers, URLs, cookies, and other application-related measures, is performed for routing purposes. They also come with additional useful features such as SSL termination and content-based routing, which may, in return, make them complex and hence cause increased computation overhead in high throughput mode. This is where Katran, as an L4 load balancer, comes as a simpler and more efficient solution.

Layer 4 Load Balancing

L4 load balancers, by contrast, operate at the transport layer, where traffic is routed based purely on IP addresses, ports, and protocols (TCP/UDP). In environments where deep packet inspection is not necessary, L4 load balancers like Katran excel because they can forward traffic with minimal processing, reducing latency and allowing the system to handle far more concurrent connections. For businesses dealing with high-volume traffic, where performance is paramount, L4 load balancing is ideal. Katran, in particular, is designed to handle high-performance systems with millions of packets per second while offering the scalability required to maintain high availability even in massive environments such as data centres and cloud infrastructures.

Features of Katran

Perspective is necessary to re-evaluate Katran, as the edge load balancer is rapidly gaining momentum. All its features explain why it is emerging as the best option for companies that need horizontally growing low latency congestion control deployment.

-

High-Performance Routing

High-performance routing is one of Katran's strongest value propositions. Since it works at the transport layer, Katran is capable of processing and transmitting millions of packets in just a few milliseconds. This is necessary for geolocation games, live video conferencing, or other systems like financial exchanges since tremendous losses are incurred when these systems fail due to transport layer latencies.

-

Scalability

Katran was developed with scalability in mind. Its architecture can accommodate heavy rush hours, making it suitable for systems that are high in volume and have hundreds or thousands of back-end servers. Thus, with the increasing demand for traffic, Katran can scale out in gradations to beef up the weight, allowing enterprises to expand in the vertical depth without the fear of load-balancing layer performance ringnecks.

-

Support of TCP/UDP Traffic

Katran offers support for both TCP and UDP traffic. This is especially crucial for different kinds of network applications such as:

Applications that use TCP, such as web services (HTTP, HTTPS), relational databases, or file transfer services; applications such as DNS, VoIP telephone services, social games, or video-on-demand services that utilize a user datagram protocol. Such versatility is the reason why Katran can be used in various scenarios, from regular websites to more niche markets, such as online gaming and voice-over internet services.

-

Connection Persistence

For the majority of the applications, session persistence is needed. Katran has such capabilities offering sticky sessions so that requests from the same client are directed to a given backend server only.

Implementation of Katran

Implementation of Katran

Deploying Katran involves integrating it within a pre-existing network geographical layout, most often in addition to or rather than replacing commonplace L7 load balancers, and ultimately directing its traffic management capabilities. Since Katran works on Layer 4 as compared to L7, installation seems to be generally easy, only oriented on the dispersion of traffic by means of IP and port. Let us consider the procedure for deploying Katran within a network:

-

Hardware Requirement

When Katran is concerned, its built-in high throughput and lowest dost latency make it perfect only for powerful working Maui G4. It needs a server with relevant CPU and memory resources to process large-scale and intensive network traffic efficiently. The system is also configured with more than one network interface to enable the handling of high bandwidth without incurring any high traffic costs.

-

Configuration

Conflicting Kardan and Kiltonor can be applied from the user space, defining how traffic rules will be distributed. Such a tool enables one to:

-

Prepare backend server pools.

-

State load balancing procedures (for example, round-robin or least connections)

-

Illustrate persistence requisites (i.e. sticky sessions)

Provide health checks to backend servers so that only healthy servers are provided with traffic. The way Katran is designed gives room for configuration on routing or content policies depending on whether demand is being pumped or fairness in demand for different traffic is being restored, including for extreme events.

-

Integration with Networking Infrastructure

Linux networking components are already available to aid the deployment of Katran and extend the use of modern approaches such as eBPF (Extended Berkeley Packet Filter) for fast packet processing and forwarding. This ensures that Katran can be successfully used in cloud-based systems, specifically microservices architecture, where workloads and networks are frequently changed. Even though Katran was first incorporated for internal use only by Facebook, the circumstances that led to this inclusion made it possible for K mean.

Advantages and Disadvantages

Advantages

-

Performance: Millions of pps kulan deliver Katran's low latency performance, making it optimal for applications requiring high performance, such as gaming, Video streaming, or financial services.

-

Simplicity: Katran sticks to Layer 4, avoiding Layer 7 load balancing, which includes overheads. This results in more effective traffic forwarding and less processing time.

-

Scalability: Katran’s architecture leans more towards horizontal standing, which makes it suitable for those applications that have or are expected to grow rapidly within a short period, as well as service traffic spikes.

-

Flexibility: Katran, being open source, has provisions for customization and enhancement according to the business's needs. This enables businesses to avoid remaining locked to a certain vendor and design the systems according to their needs.

-

Cost-Effective: Katran's open-source nature and lightweight nature, which does not entail heavy downloads, could provide a cheaper alternative to proprietary load-balancing solutions that incur licensing costs.

Disadvantages

-

Limited Protocol Support: Katran operates at the fourth layer of the OSI model. Consequently, it does not provide advanced application-layer features of L7 load balancers such as SSL termination, cookie-based load balancing, or rewriting HTTP-level headers.

-

Complexity in Setup: Though the structure is straightforward, the first installation and adjustment may be complicated, especially in cases where there are no committed network personnel in the organization. Making adjustments and scaling Katran to a global multi-region environment is not easy.

-

Limited Ecosystem: Katran is a newer technology than older providers (like HAProxy, Nginx, or AWS Elastic Load Balancer). This means less support for integrations, which may increase the difficulty level for most business organizations.

-

Facebook-Specific Design: Katran was developed to meet Facebook's needs, which means it was probably designed with a data centre similar to Facebook's. Such variations may lead to integration issues for companies with other infrastructural demands.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)