Overview of Kubernetes-Based Event Driven Autoscaling

Implement event-driven processing on Kubernetes using Kubernetes-Based Event-Driven Autoscaling (KEDA). The IT industry is now moving towards Event-Driven Computing. Today it’s becoming so popular due to the ability of engaging users with the app. Popular games like PUBG and COD are using this approach to provide the user with a quick and accurate response which results in better user experience, but what is this Event-Driven Computing and what is the role of Serverless Architecture in it? Event-Driven Computing is nothing but a computing model in which programs perform their jobs in response to the occurrence of events like user actions (mouse click, keypress), sensors output and the messages from the process or thread. It requires autoscaling based on the events triggered for better autoscaling we use serverless.

Serverless does not mean running code without a server; the name “Serverless” is used because the users don’t have to rent or buy the server for the background code to run. The background code is entirely managed by the third-party (cloud providers).

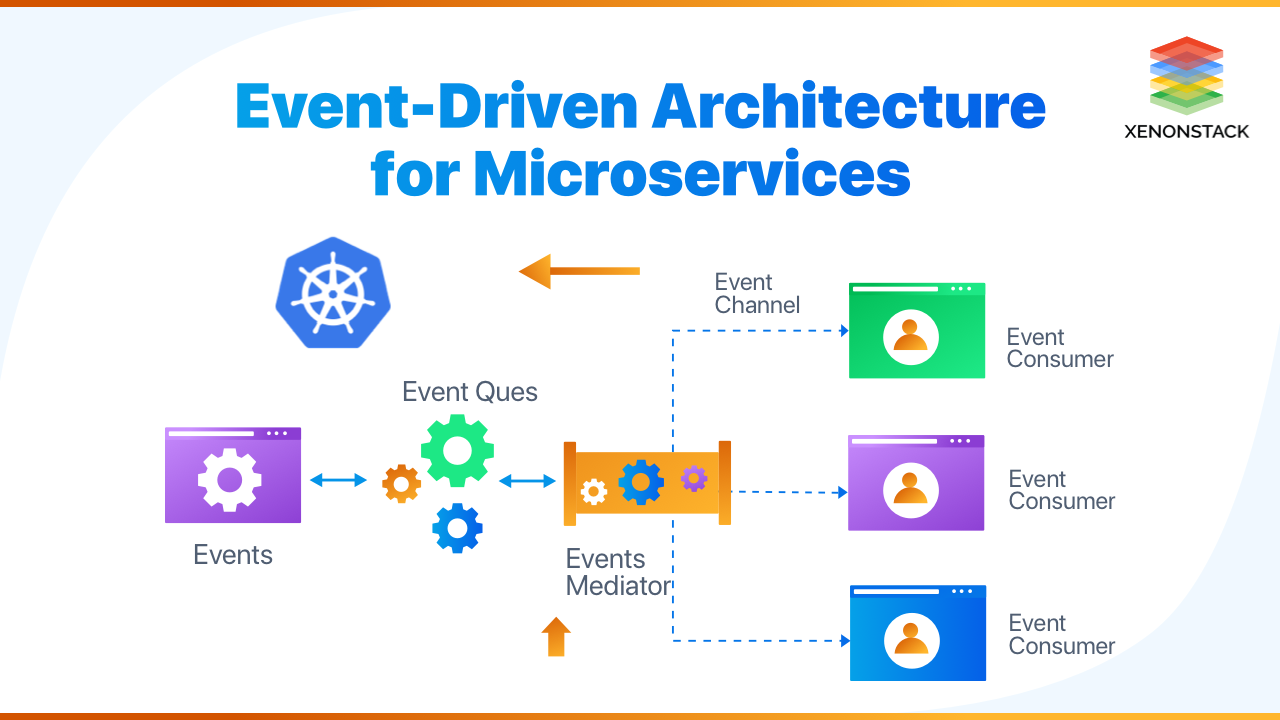

Event-driven and serverless architecture are defining a new generation of apps and microservices. Moreover, containers are no exception; these containerized workloads and services are managed using an open-source tool called Kubernetes. Auto Scaling is an integral part of Event-driven and serverless architecture, although Kubernetes provides auto-scaling, it does not support serverless style event-driven scaling. To allow users to build their event-driven apps on top of Kubernetes Red Hat and Microsoft joined forces and developed a project called KEDA (Kubernetes Based Event Driven Autoscaling). It is a step towards serverless Kubernetes and serverless on Kubernetes.

KEDA is a vital part of our serverless strategy. Collectively with the Azure Functions runtime, which is available as an open-source, it gives serverless runtime and scaling functionality without vendor lock-in. It enables organizations to develop hybrid serverless applications spanning fully managed clouds and edges that were impossible earlier.

KEDA handles the triggers in response to the events that happen in other services and scale up according to that event. In Kubernetes-Based Event-Driven Autoscaling (KEDA), containers don’t use HTTP routing to consume events, instead they directly consume events from the source.

Kubernetes-Based Event-Driven Autoscaling allows the Kubernetes cluster to manage the event-driven architecture effectively, and it also provides scale-out and scales zero capability natively. In KEDA, we can choose how often it will poll for new messages from a new source and how it will scale up and scale down according to those messages.

Example of Kubernetes Event-Driven Autoscaling

During the festive season, the number of users in shopping apps like Amazon and Flipkart increases drastically, and there can be thousands of users who add items to the shopping cart. The KEDA will continuously monitor these apps and scale-up them according to the number of users adding things into the shopping cart. It will scale-down the number of resources in the app whenever a user is done with shopping, and there is nothing in the cart. You can also create Serverless Applications on AWS on your own.

Kubernetes-Based Event-Driven Autoscaling (KEDA) contains a set of components which fetch metrics from a data source; these fetched metrics are used to determine the scaling of the environment. These adapters are Kafka, RabbitMQ, Redis DB, Azure Service Bus, Azure IoT Hub, and Cosmos DB.

Kubernetes-Based Event-Driven Autoscaling Architecture

Scaler

Scaler is a component in KEDA which connects to the specific source and reads its metrics; the cause can be any component like RabbitMQ, Kafka, etc.Metrics Adapter

Metrics Adapter forwards data read by scaler to Horizontal Pod Autoscaler (HPA), HPA enables horizontal app autoscaling by automatically scaling the number of Pods.Controller

The controller is the last and crucial element of Kubernetes Event-Driven Autoscaling. It is also known as the heart of Keda as it is a controller to provide scaling from 0 to 1 in Kubernetes. Scaling from 1 to n is done by HPA by reading the data from the scaler. The controller also monitors whether a new ScaledObject deployment appeared in the cluster.Customer Resources (CRD)

Whenever we install KEDA, it creates two custom resources in the kubernetes that are scaledobjects.keda.k8s.io and triggerauthentications.keda.k8s.io. These two resources allow us to map an event source to deployment or a job for scaling the resources.ScaledObject

ScaledObject defines how Kubernetes-Based Event-Driven Autoscaling (KEDA) should scale the application, and it also uses it to describe the triggers. The basic structure of the YAML file of ScaledObjectTrigger Authentication

It contains the authentication secrets or configuration to monitor the event source like Kafka, RabbitMQDeploying KEDA

Deploying with helm

helm repo add kedacore https://kedacore.github.io/charts (To add helm repo) helm repo update (To update helm repo) To install keda Helm chart: Helm 2 helm install kedacore/keda --namespace keda --name keda Helm 3 kubectl create namespace keda helm install keda kedacore/keda --namespace keda

Uninstalling KEDA

By using helm 3: helm uninstall -n keda keda kubectl delete -f https://raw.githubusercontent.com/kedacore/keda/master/deploy/crds/keda.k8s.io_scaledobjects_crd.yaml kubectl delete -f https://raw.githubusercontent.com/kedacore/keda/master/deploy/crds/keda.k8s.io_triggerauthentications_crd.yaml By using helm 2: helm delete --purge keda kubectl delete -f https://raw.githubusercontent.com/kedacore/keda/master/deploy/crds/keda.k8s.io_scaledobjects_crd.yaml kubectl delete -f https://raw.githubusercontent.com/kedacore/keda/master/deploy/crds/keda.k8s.io_triggerauthentications_crd.yaml Kubernetes-Based Event-Driven Autoscaling in AWS SQSTrigger specification

In this, we will describe the aws-sqs-queue trigger that will scale based upon AWS SQS Queue. queueURL: Here, we will enter the full URL for the SQS Queue. queueLength: It is the target value forApproximateNumberOfMessages in the SQS Queue. awsRegion: It is the AWS region for the SQS queue Authentication Parameters: We can use TriggerAuthentication CRD to configure the authenticate by providing either a role ARN or a set of IAM credentials.Develop and deploy machine learning models at scale, effectively adding intelligence to applications with flexibility. Source: AWS AI Solutions and Services

Role-based authentication

awsRoleArn - Amazon Resource Names (ARNs) which uniquely identifies the AWS resources. Credential based authentication: awsAccessKeyID - Id of the user awsSecretAccessKey - Access key for the user to authenticate with Example of AWS SQS KEDA on AWS CloudwatchTrigger Specification

In this specification, we describe the aws-cloudwatch that scales based on AWS Cloudwatch.Authentication Parameter

We can use TriggerAuthentication CRD to configure the authentication by providing either a role ARN or a set of IAM credentials.Role-based authentication

awsRoleArn: Amazon Resource Name that is used for unique identification of AWS resources. Credential based authentication: awsAccessKeyID: This is the ID of the user. awsSecretAccessKey: Access key for user authenticationSummary

Kubernetes-Based Event-Driven Autoscaling is still in the early development phase, but it has the potential to be the next big thing in the Kubernetes world. One can now run Spark, Hadoop, HDFS on Kubernetes. The future of Kubernetes is serverless, and it is a considerable step towards serverless Kubernetes and serverless on Kubernetes, it enables any container to scale up from zero to thousands of instances on the bases of event metrics. Kubernetes-Based Event-Driven Autoscaling (KEDA) is still an open-source project in GitHub, and It still needs time to be fully developed, KEDA’s use is limited for now, but it will surely grow in the future as the new Scalers and Triggers are being continuously added into Kubernetes-Based Event-Driven Autoscaling like CosmosDB, Azure IoT Hub, etc.