Introduction to MLOps

MLOps make a complete, accurate, and perfect model. It is not enough to use correct data and models for the AI system. It is also equally essential to deploy and monitor models timely. Akira AI provides MLOps Roadmap to have the capability to deploy, monitor, and govern various ML models. Deployment of ML models is one click far using Akira AI. It also provides insights for users to monitor the model so that developers can monitor and take necessary action if any problem is found, such as feature drift, fairness, accuracy, etc.

A set of processes that aim to supply and maintain machine learning models reliably and efficiently to be productionize. Taken From Article, Top 7 Layers of MLOps Security

A Brief Explanation of MLOps Roadmap

Model Deployment: Developers can quickly deploy their system using Akira AI MLOps roadmap with one click. Several parameters need to be tracked during deployment, such as approval, version target feature of mode, etc and troubleshoot deployment errors. Model deployment does not finish the task of the developer. It is equally essential for the developer to analyze deployments and their health as there can be several deployments that organizations can provide to their several end customers. Akira AI offers useful insights to understand their deployment easily and in a better way.

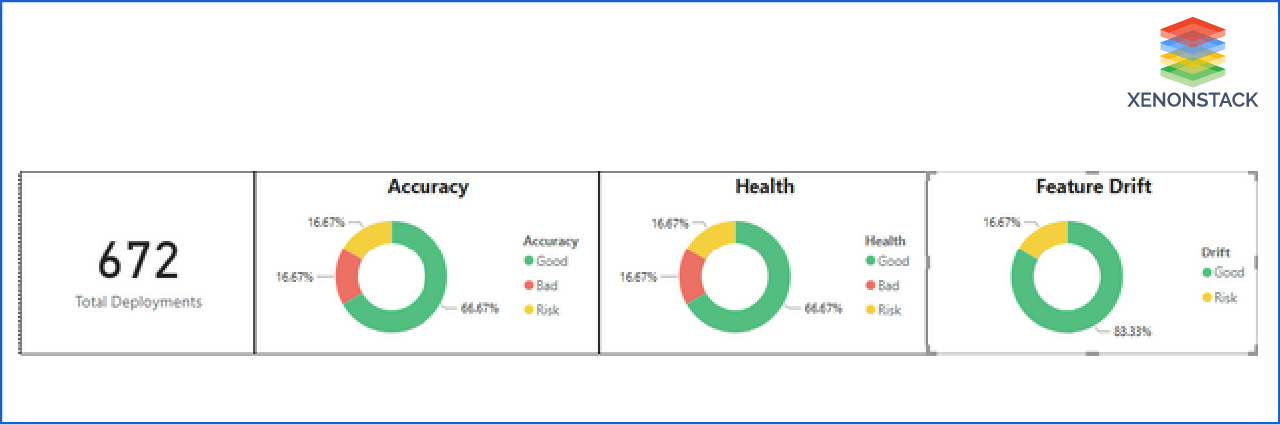

Figure 1.1

Figure 1.1 gives insights to the user if they want to analyze all their deployments together at a single place.

- It provides the total number of deployments that they have.

- In the second insight, Akira AI analyzes the overall accuracy of the user's all deployments, such as approximately 17% of the deployment's accuracy is bad and the same percent of deployments are at risk. Approximately 66.67% of the deployment have good accuracy.

- Similarly, as the second insight, the third insight represents the health of the models.

- The third insight shows Feature drift, representing that approximately 83% of models have accurate data or features, and the remaining approximately 17% of the deployments' are at risk.

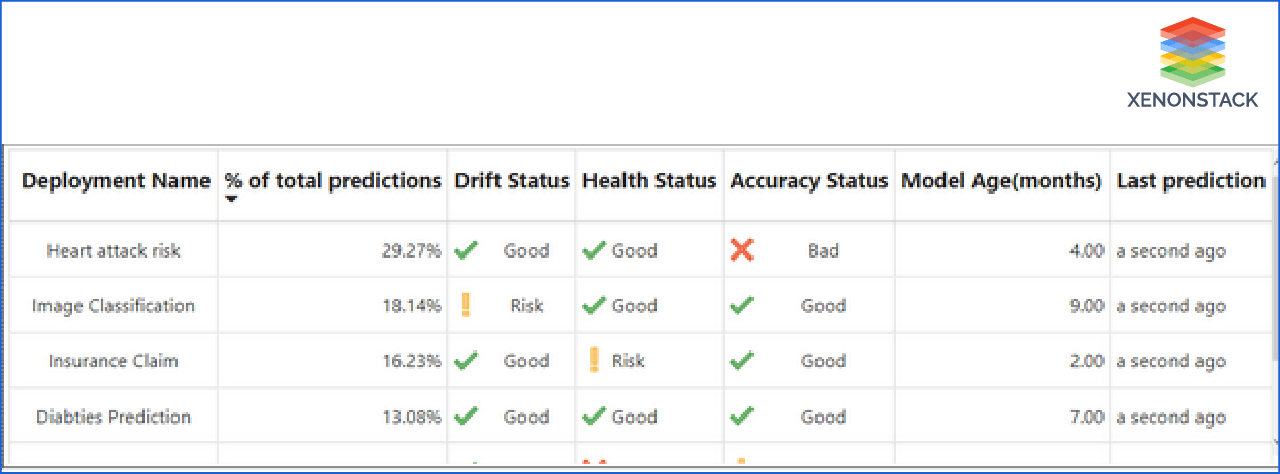

Figure 1.2

Previous Figure 1.1 provides insights for overall deployment. But tracking overall information is not enough if users want to track problems and want to solve that. Therefore Akira AI provide a dashboard to the user to analyze their deployment in Figure 1.2

- It provides information about an individual deployment in tabular form.

- It sorts the deployments according to their use to know which deployments are most in use and not. It provides information about the prediction percentage by the deployment, accuracy, drift, health, how old the deployment is, and the time from the model's last prediction.

- As the dashboard is showing Heart attack risk prediction is this does one of the deployments that customers are using most, approximately 29% of the total predictions, the health and drift are good, but there is a problem in its accuracy, so now user can check why their system is not providing the accurate result.

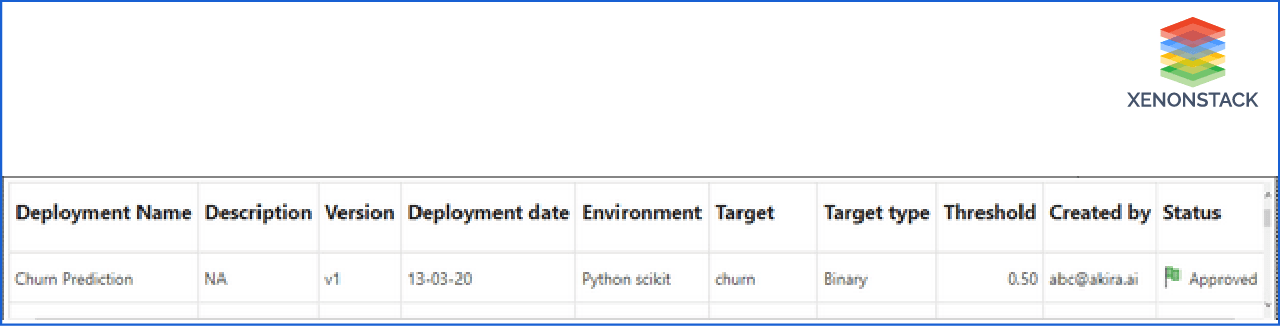

Figure 1.3

Till now, we have discussed the explanation of deployments that are already done. But there can be some parameters whose explanation will be required and also that the user wants to check whether the deployment is correct, what its purpose is, and by whom it was done. For this purpose, Akira AI provides in-depth details of the individual deployment. From the tabular insight of deployment, users can click any of them and look for its in-depth analysis.

- Figure 1.3 is representing detailed information about the deployment. It provides description, version, deployment date, environment, target column detail, threshold, status, and the person's id by whom this model is deployed.

Models Platforms are services that solve the above problem so enterprises can save resources on building and monitoring their models. ModelOps Platform for Operationalizing ML

ML Model Monitoring and Management

Timely monitoring of the model makes their AI system healthy and productive. Suppose if a user would never check their model after deployment, then what would happen? It can be possible that the system works well for some months, and after that, it starts giving wrong results. There can be various reasons behind the system's wrong prediction, such as a change in data, feature drift, accuracy, etc. Due to this, it can be possible that the patient has to compromise his life if the AI system is used for disease prediction. And both the user and developer will be entirely unaware of that. It ensures that this would never happen. It is compulsory to monitor and manage models after deployment. Therefore Akira AI provides interactive dashboards to their users to monitor models and track and solve their issues.

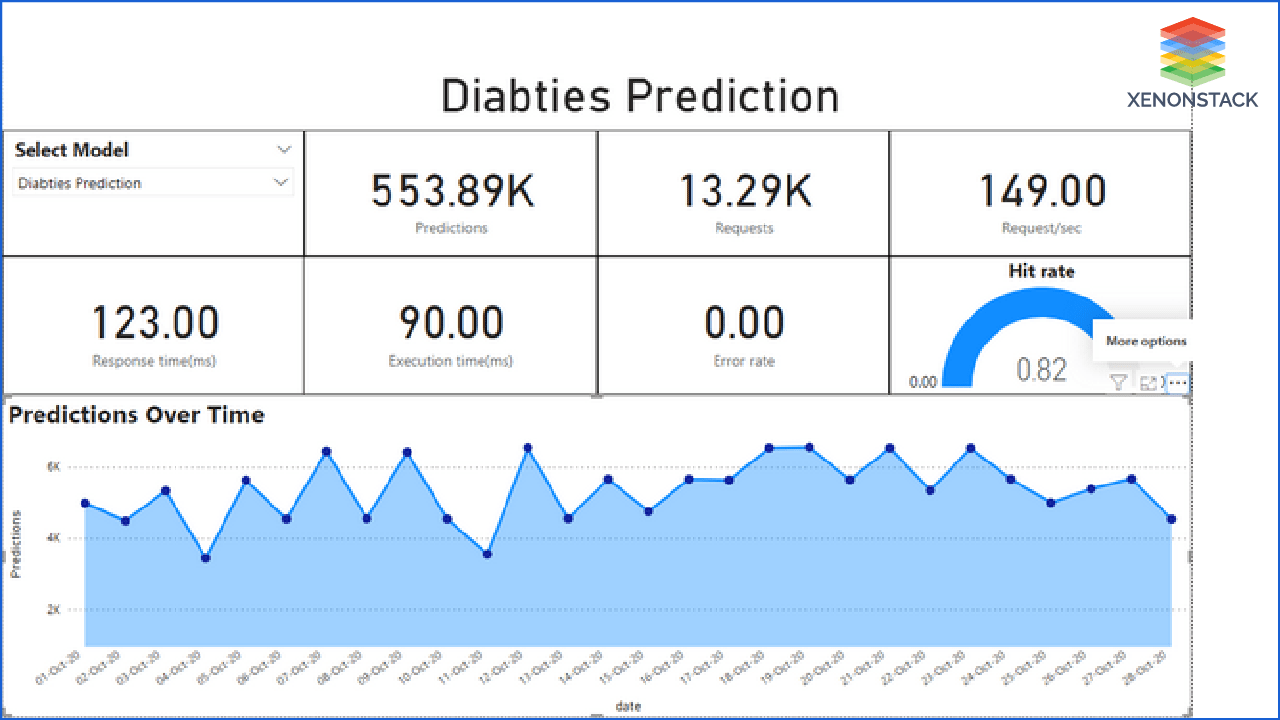

Figure 1.4

The dashboard shown in Figure 1.4 provides users with some essential parameters required to check the model's performance.

Users can select any of the models that they want to monitor from the slicer. The dashboard will then provide the number of predictions and requests predicted using the model, which will tell the model's usage.

After that, the dashboard shows some parameters based on which they get to know the model's performance. It provides the number of requests coming per second, response time, and execution time of the model to monitor whether the model can manage the predictions coming timely or not.

It also provides the hit rate and error rate of the model that helps to check model work.

The dashboard also provides the prediction trend of the model to check its working. It can monitor day-wise trends to know whether the model is working well or not. Users can get to know if a model does not work or work less for a day and then check the reason.

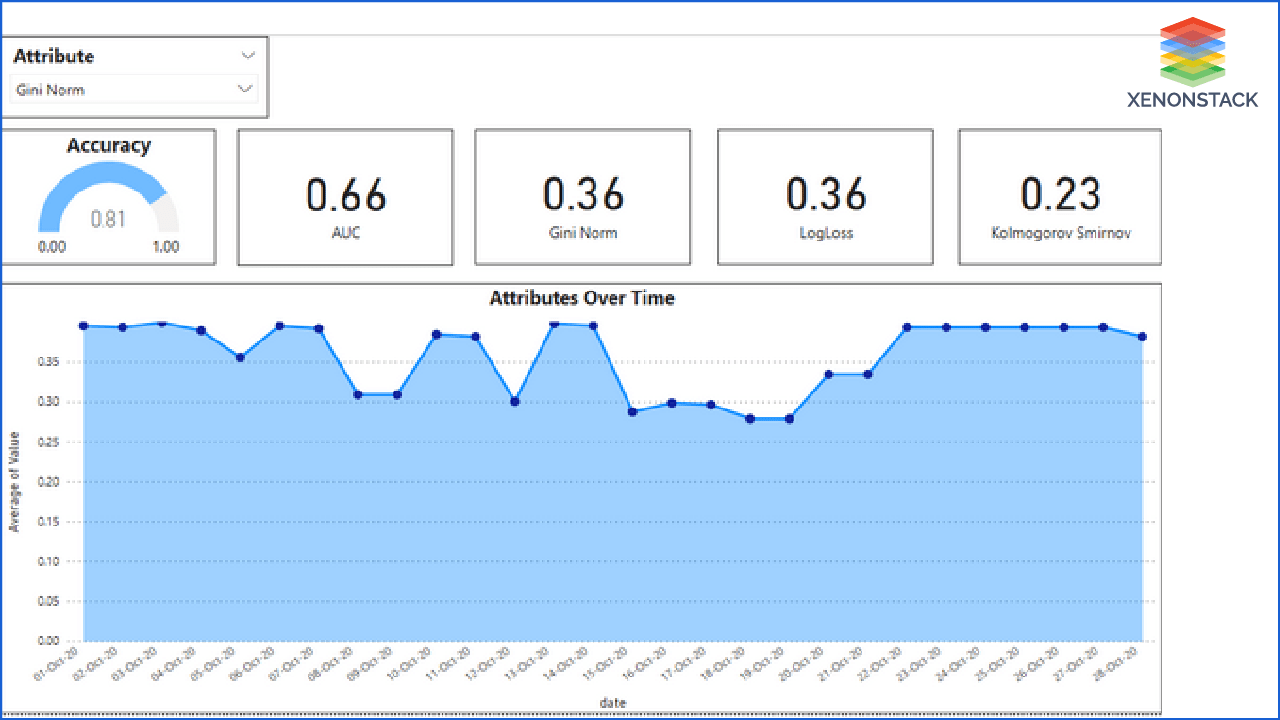

Figure 1.5

The previous dashboard provides the first level of information or explainability of models. Now in the second dashboard that is illustrated in Figure 1.5 shows more parameters.

The dashboard provides accuracy, AUC, Gini Norm, LogLoss, and Kolmogorov–Smirnov values because these parameters are used to know the performance and success of the model.

Users also would get to know the accuracy of models by the line chart given in the dashboard. This user can select any of the parameters from the given slicer and analyze that over days. From this chart, users would be able to track any of the fluctuations in any parameter. Hence from a single dashboard, the user would monitor the data of the whole month.

Figure 1.6

In the previous two dashboards, we analyze the model based on the algorithm and monitor. Next, Akira AI provides dashboards to monitor data that is used for the model.

Figure 1.6 represents the Feature drift of data with feature importance. It provides feature drift and feature importance on the y-axis. Analysis of feature drift is very important to know the health of data used for the model. It also provides the limit in visualization for the highest drift and marks a red line here. All the features that crossed this line are not well.

There is only one feature that is crossing that line. Users can get the detail of that by hovering on that, as shown in Figure 1.7.

Figure 1.7

Figure 1.8

This dashboard shows the trained and predicted data composition. From this and previous charts are given in Figure 1.7, the user can estimate whether the model performs well or needs training again.

Automate the process of Machine Learning from model building to model deployment in production. Machine Learning Framework for Continuous Delivery

What are the Features of MLOps Roadmap?

- Model versioning: Akira AI is a fully Managed MLOps platform that provides the ability to create a new version of the model as required, provide notifications to users of the model about variations in version, and maintain model version history.

- Model governance: Akira AI provides an effective MLOps platform. Users can establish cross-functional governance and achieve capabilities to audit, manage access control in real-time. Akira AI allows Enterprises to efficiently streamline Machine learning cycles with automated deployment and administration of machine learning models.

- End-to-End Machine Learning orchestration: Minimize the complexity of the Artificial Intelligence lifecycle, enable Automated Pipeline Orchestration, and build AutoML capabilities.

- Monitoring and Management: Monitoring helps MLOps solutions continuously control and manage the model's always and metrics, including infrastructure monitoring.

- ML Model Lifecycle Management: Akira AI offers MLOps capabilities enabled solutions for building, maintaining, and deploying Machine Learning Applications at Scale.

Conclusion

MLOps, a close relative of DevOps and a combination of philosophies and practices, is designed to enable IT and science teams to rapidly develop, train, deploy, maintain, and scale machine learning models. Akira AI can also support the organization as they begin to climb MLOps practices through an organization's AI / ML workflows. Akira AI will also engage with the organization to plan an MLOps roadmap.

- Explore here about ML Pipeline Deployment and Architecture

- Learn more about Data Preparation Roadmap

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)