Overview of Edge AI in Automotive

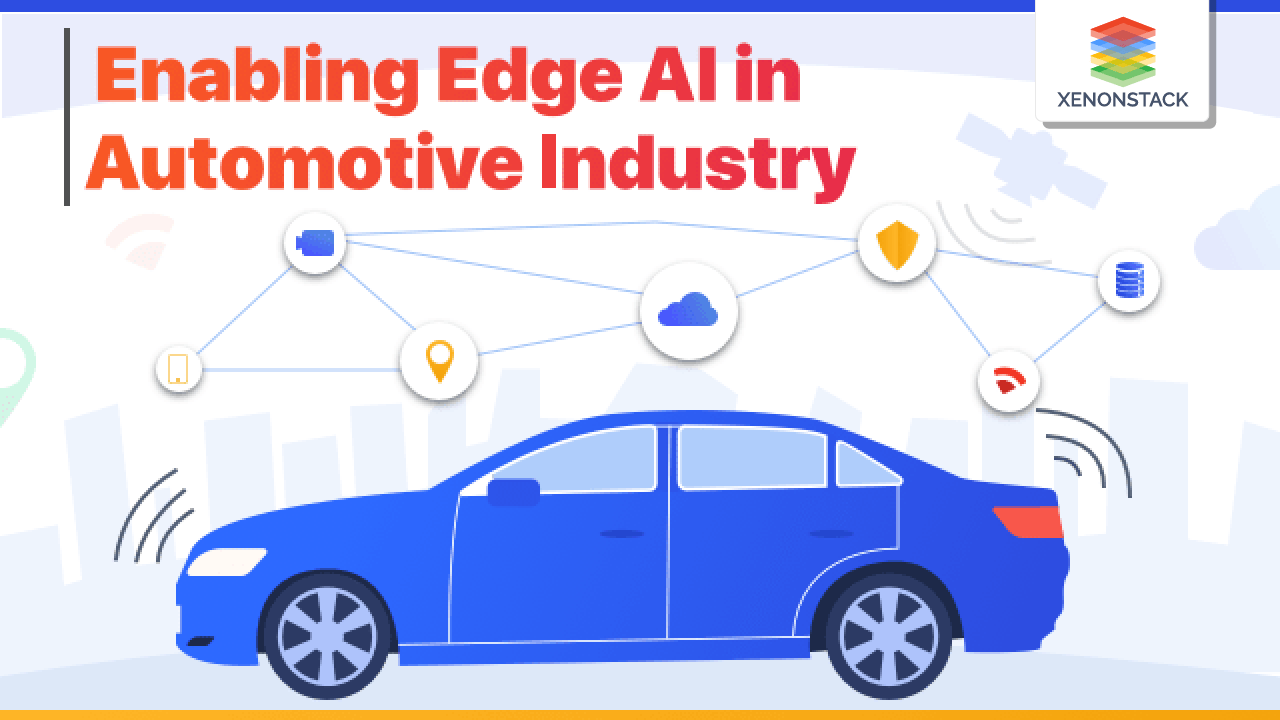

When we think about Edge AI in the automotive industry, most of us have thought about cars' functionality that can be remotely controlled via smart devices like phones, watches, computers, and tablets. That should have the ability to lock a car remotely, help find parking lots in the rushy area, easily track cars for theft, or receive maintenance reminders on various systems is already seen as a modern-day advancement. Self-driving cars or automated vehicles main goal is to provide a better user experience and safety rules and regulations. Automatic cars can connect to a smart device and receive information from the world around them.

What is the Architecture of Edge AI in Automotive?

At a high-level architecture, automated vehicles have four major components: sensors, Perception, Planning, and Control. All the components perform together to grasp the environment around the automobiles, plan destination routes, predict vehicle and pedestrian behaviour, and finally move according to instructions, like driving smoothly and safely.

Technologies Involved in Automated Vehicles

Self-driving cars have a significant amount of technology within them. The hardware in these cars has stayed fairly consistent, but the cars' software is continuously changing and updated. Looking at some of the leading technologies, we have:

Sensor

- Cameras

-

The sensor technology used for self-driving cars is a camera claimed by Elon Musk. Images that we receive to make it fully comprehend we need algorithms.

-

The camera captures every angle that is required to drive a car.

-

We are still designing new things for systems to process visual data and translate it into 3D actionable data. We use Tesla's (camera), which has eight external-facing cameras, to help them understand the world around them.

- Radar

-

One of the primary components for self-driving is a radar that helps detect LiDar, camera, and computer images.

-

Radar, without a doubt, has the lowest resolution, but it can still see through adverse weather conditions, unlike LiDar, which is mainly light-based.

-

As we know, radar is radio wave-based and can propagate through rain or snow-like things.

- LiDAR

-

As mentioned earlier, LiDar is a light-based sensor placed on top of self-driving cars spinning around.

-

It helps generate a highly detailed 3D map of its surroundings as feedback by shooting out the light.

-

LiDAR has a much higher resolution than RADAR because it is light-based and has limitations in low-visibility weather.

- Other Sensors

-

Sensors like Ultrasonic Sensors, inertial sensors, and GPS 802. IP also used self-driving cars to fully image what is occurring around them and what the car is doing.

-

We collect more accurate and better solutions to implement machine learning and self-driving technology data.

Perception

The perception subsystem mainly contains software components that grab all sensor data, merge them into meaningful, structured information through sensor fusion, and understand the Autonomous vehicle's environment.

Perception is broadly divided into parts, i.e., Localization and Detection:-

-

Localization: This system gets data from GPS (Global Positioning System) and maps to detect vehicles' precise location. It helps form the basis for other functions that will be used later.

-

Detection: This system gets data from other sensors, such as radar and LiDar, to perform different functions, such as lane detection, traffic light detection and classification, object detection and tracking, and free space detection.

Planning

Input for the planning subsystem is collected from the perception subsystem and used for long-range planning (e.g., road planning) and short-range planning (e.g., which turns to take). Four prominent planning positions are in place.

Route Planning

-

The car will follow between two points on the map for the path, e.g., the highways and the roads to take, and the route designer will map out high-level, rough plans.

-

In most vehicles, this is similar to the navigation system. The route planner mainly takes the information from the map and the GPS.

Prediction

-

The forecast aspect forecasts the actions of other cars, barriers, and pedestrians on the road in the autonomous vehicle's vicinity.

-

It uses probabilistic simulations to predict their next positions and possible trajectories.

-

All this is achieved by safely navigating autonomous vehicles around them.

-

The prediction aspect involves input from components such as a lane detector, a traffic light, a signal detector/classifier, and an object detector/tracker.

Behavior Planning

-

The behaviour planner then uses data from the predictor and the fusion sensor to schedule its behaviour, such as staying in the current lane, shifting lanes (right/left), braking at the traffic light, or accelerating as required.

-

The behaviour designer incorporates input from components such as a lane tracker, a traffic light and a signal detector/classifier, a free space detector, and a localized.

Artificial Intelligence (AI) is quickly becoming a new tool in the CEO tool belt to drive revenues and profitability - McKinsey

Trajectory Planning

-

The trajectory planner takes the behaviour planner's immediately planned behaviour and generates multiple trajectories while keeping track of user comfort (e.g., smooth acceleration/deceleration), road rules (e.g., speed limits, etc.), and vehicle dynamics (e.g., body weight, load, etc.) and determining the exact trajectory to be taken.

-

This direction is transferred to the control subsystem to be executed as a series of commands.

-

The trajectory planner gathers information from the lane detector, object detector/tracker, free space detector, and action planner and feeds information back to the behaviour planner.

-

Continuing the Waymo case, we see how the Forecast and Preparation components help Waymo address the following two questions: What will happen next? And What Should I Do?

Control

-

The control subsystem is the final system that takes instructions from the planner and performs them through acceleration/deceleration (i.e., throttle), accelerating or steering.

-

It guarantees that the vehicle follows the trajectory it receives from the planning subsystem.

-

The control subsystem usually uses well-known PID controllers, Model Predictive controllers, and others.

-

The controllers submit information for throttle, acceleration, and steering actuators to move the vehicle.

-

This completes the knowledge flow from sensors to actuators, replicated continuously when the car is at the Autonomous vehicle level.

Levels of Autonomous Vehicles

We've laid them out below to explain each detail in a more concrete text.

-

Level 0: At this level, the driver is always in full control of the vehicle.

-

Level 1: Vehicles feature automated controls, such as automatic braking and electronic stability control, but the driver remains in control.

-

Level 2: Significant controls, including steering, acceleration, and braking, are automated, though the driver must still be engaged.

-

Level 3: About 75% of vehicle controls are automated. The car can monitor the road, steer, accelerate, and brake independently, but the driver must be ready to take over if needed.

-

Level 4: The driver relies almost entirely on the car for safety functions and does not need to control the vehicle actively.

-

Level 5: The vehicle is fully autonomous, with all functions managed by the car, and humans are merely passengers.

Businesses increasingly rely on AI to make important decisions and embrace AI in the business workflow by adopting Artificial Intelligence. Click to explore our, Challenges and Solutions in AI Adoption

Applications of Edge AI in the Automotive

There are various Edge AI applications in the Automotive Industry. A few of them are defined below.

Sensor Data

The sensor technology for self-driving cars is a camera, which captures every angle required to drive a car.

Electric Vehicles

Edge AI in electric vehicles or driverless cars is immediately processed within the same device, and action is performed within milliseconds.

Smart Traffic Management

Like in the real-life scenario, we have traffic lights, especially for four side roads, which are heavily used most of the time, and vehicles need to wait for some time. This vehicle estimates the intersection with other vehicles and pedestrians and helps from a collision.

Vehicle Security

Applying Edge AI to automobiles involves a significant amount of technology. The hardware, such as sensors, cameras, radar, lidar, and other sensors inside these cars, has stayed fairly consistent, providing high security to vehicles.

Predictive

Edge AI continuously monitors parameters like breaking tyre inflating, acceleration, etc. Analytical models help predict any component's failure and alert the owner.

Benefits of Edge AI in Automotive

Listed below are the benefits of Edge AI in the Automotive Industry.

High Processing Speed

Edge AI helps in offering high-performance computing power to the edge where sensors are located. There is no need to send data to the cloud, which takes much time compared to the edge.

High Security

Another benefit of using Edge AI in automobiles is privacy; we know privacy is a significant concern for every industry. In Edge AI, we do not send data to the cloud to decide. The decision took on an edge itself. So there is no risk of data being mishandled.

Reduction in Internet Bandwidth

Edge AI performs data processing locally, and less data is transferred through the internet, so lots of time and money is saved because less bandwidth is required.

Less Power

As data processing is done locally, it will save a lot of energy because we need not remain connected with the cloud and transfer data between the edge device and the cloud.

Conclusion

AI is one of the technology sector's fundamental engines, growing in importance in all scenarios. Explainable Artificial intelligence in the automotive industry is more than the concept of self-driving cars. It can connect us and keep us safe while driving. This means that a lot of money can be made in many areas. The estimated value of AI in manufacturing and cloud services by 2024 is $ 10 billion.

Read More about Edge AI in Manufacturing

Click to Explore Edge AI For Autonomous Operations