The emergence of powerful AI models like DeepSeek R1 is game-changing for businesses and opens up new frontiers in cybersecurity. As organizations race to adopt and implement advanced AI, they need equally sophisticated security solutions to protect these valuable but vulnerable assets.

Microsoft provides a comprehensive set of tools to secure AI systems at every layer - from development to deployment to data protection. This post will explore how Microsoft's offerings can help safeguard AI initiatives, focusing on capabilities relevant to DeepSeek R1 and the DeepSeek consumer app.

Secure AI Development in Azure

A Trusted Foundation for DeepSeek R1 and Beyond

Any secure AI project must be built on a hardened foundation. Azure provides a robust platform for developing AI applications with strict, built-in safeguards. When organizations leverage DeepSeek R1 in Azure, they inherit the benefits of Microsoft's rigorous pre-release security vetting. Before making DeepSeek R1 available to customers, Microsoft subjected the model to extensive testing and validation, including:

-

Automated evaluations to uncover potentially unsafe behaviors

-

Thorough manual reviews by Microsoft's AI security experts

-

Mitigation of any identified risks before release

This intensive screening process gives DeepSeek R1 on Azure users a significant head start on security. They can be confident that the fundamental building blocks of their AI applications have been battle-tested against a wide range of threats.

Isolated Environments for Customer-Specific Assets

Azure's hosting architecture for AI is designed to isolate each customer's workload and data strictly. When businesses build applications with DeepSeek R1, their unique training data, model parameters, and user inputs are entirely segregated from other customers' assets.

This rigid separation prevents unauthorized access to a company's proprietary AI ingredients and ensures that sensitive data doesn't inadvertently leak or mix across customers' AI systems. With Azure, each organization maintains complete control and confidentiality over the unique components that power their DeepSeek R1 solutions.

Automatic Filtering to Align AI with Enterprise Standards

By default, Azure applies a layer of content filtering to DeepSeek R1 and other hosted models. This filtering capability dynamically scans the AI outputs and removes any content flagged as inappropriate or misaligned by company standards.

The filters automatically detect and block outputs that are:

-

False, misleading, or unsupported by evidence

-

Explicit, offensive, or adult in nature

-

Discriminatory, demeaning, or hateful toward protected groups

-

Encouraging illegal acts or violence

-

Otherwise, it is problematic according to Microsoft's AI principles

This first line of defence helps keep a company's live DeepSeek R1 applications operating within common-sense safety and accuracy boundaries. While the filters can be tuned based on an organization's needs, they provide immediate protection against common risks.

Pre-Deployment Stress Testing to Anticipate Edge Cases

Before releasing a DeepSeek R1-powered application to end-users, Azure equips developers with tools to vet the system's responses across various scenarios thoroughly. This pre-deployment safety testing allows teams to:

-

Simulate real-world user inputs, including adversarial or accidental edge cases

-

Analyze the AI's outputs for any concerning, inaccurate, or inconsistent content

-

Identify weak points in the model's performance or areas prone to misuse

-

Make targeted improvements and corrections before moving to production

Businesses can proactively surface and resolve potential blindspots by stress-testing DeepSeek R1 applications in a controlled environment. Catching issues early before they impact real users is preferable to discovering problems in the wild.

Runtime Monitoring and Response for Live DeepSeek R1 Apps

Always-On Detection of Anomalous Activity

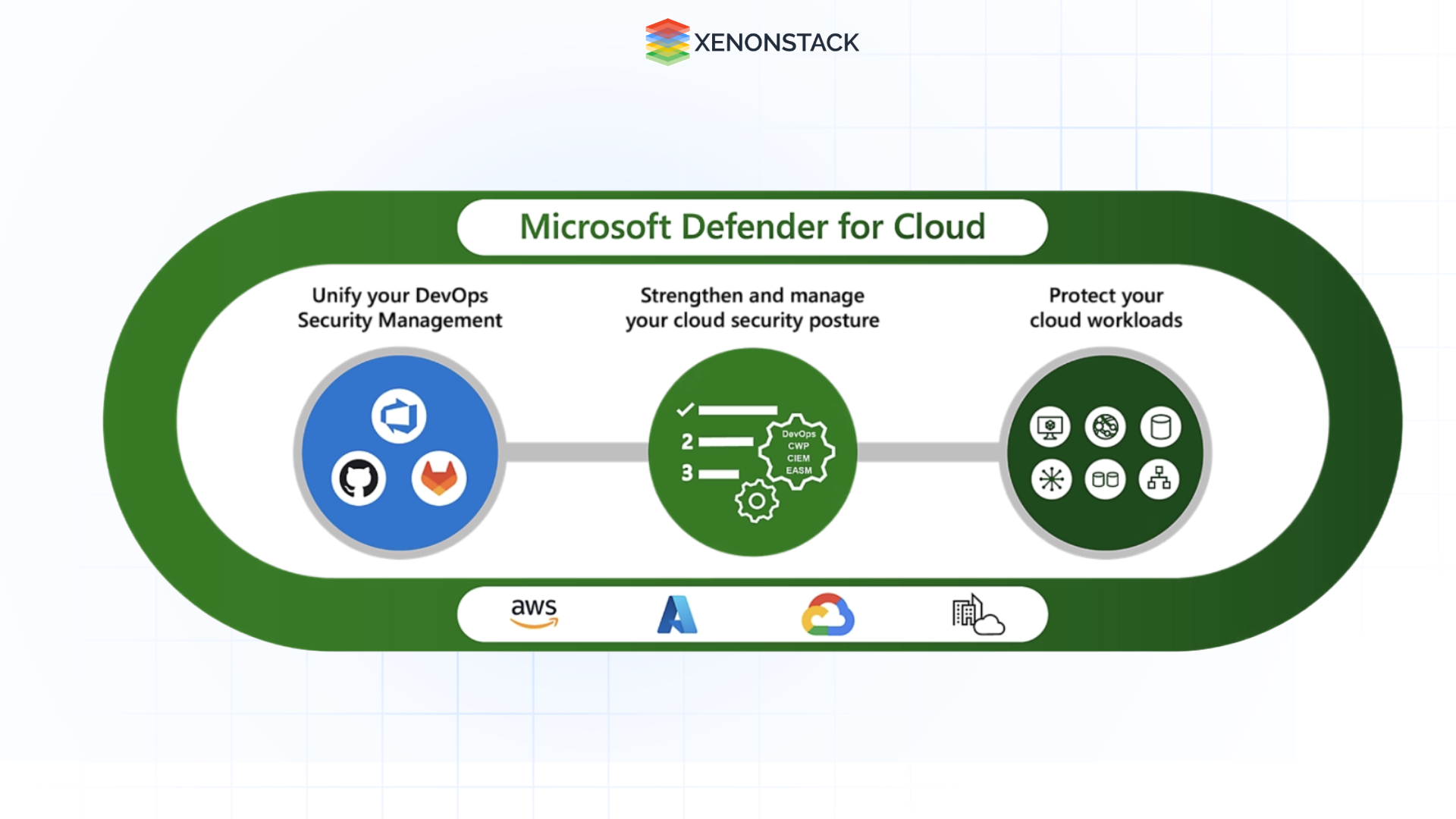

Once a business deploys its DeepSeek R1 system to production, Microsoft Defender for Cloud provides 24/7 monitoring for suspicious behaviour and emerging threats. Defender uses advanced AI to analyze all user interactions with the live DeepSeek application continuously.

Fig 2.0 Microsoft Defender for Cloud

Fig 2.0 Microsoft Defender for Cloud Over time, Defender constructs a dynamic behavioural profile of normal, expected usage. With this evolving baseline in place, it can then detect patterns that deviate from the norm and that may signal an attack, such as:

-

Attempts to bypass or manipulate the DeepSeek model's content filters and safety checks

-

Efforts to reverse engineer or extract the proprietary data used to train the model

-

Abnormally frequent requests or systematic probing of the model's full response surface

Defender functions as an always-on guardian for the live DeepSeek R1 system by intelligently scrutinising user activity in real time.

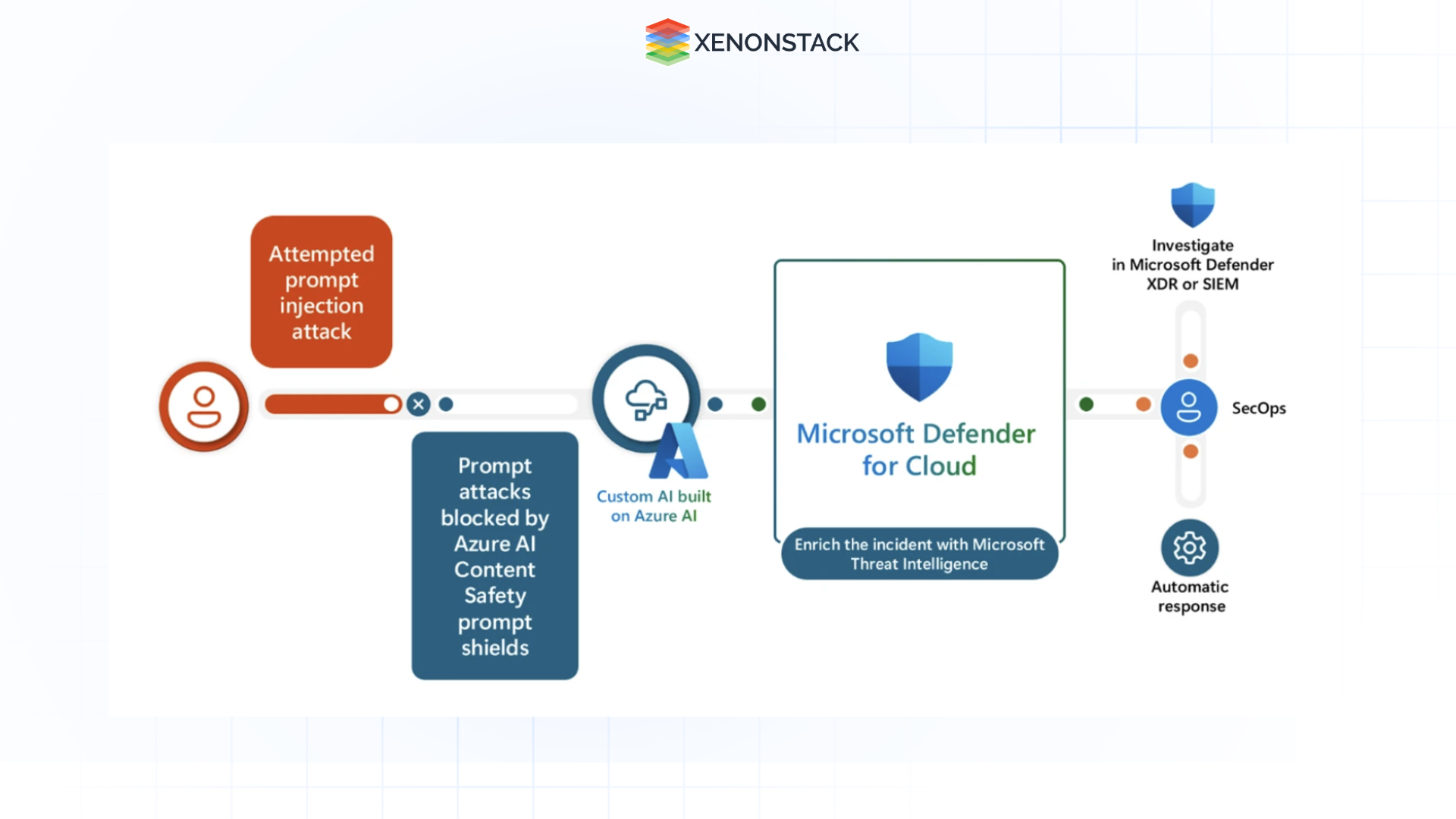

Fig 3.0 Microsoft Defender for injection cyberattacks

Fig 3.0 Microsoft Defender for injection cyberattacks It vigilantly watches for any signs of misuse or attack, no matter how novel the approach is.

Instant Intervention to Disrupt Attacks in Progress

When Defender identifies activity that crosses its risk threshold, the platform can immediately step in and take action to neutralize the threat. Depending on the specific scenario, this could entail:

-

Blocking the suspicious user inputs before they even reach the DeepSeek model for processing

-

Truncating the AI's response to remove any unsafe or inappropriate content

-

Temporarily limiting the speed or volume of requests from a flagged user or IP address

-

Severing the connection between the user and the DeepSeek application entirely

By taking decisive action in real-time, Defender contains potential damage from attacks in progress. Critically, this buys time for the company's security personnel to investigate and respond without the pressure of active exploitation.

Security Alerts Enriched with Actionable Context

When Defender confirms a legitimate threat to a live DeepSeek R1 system, it instantly alerts the company's designated security team. To facilitate a rapid and effective response, the alert includes rich details such as:

-

The specific DeepSeek model and endpoint targeted

-

Characteristics of the attacker (e.g. IP address, device fingerprint, authentication token)

-

The exact text of the malicious prompt or query and the AI's response

-

Timeline and pattern of the anomalous behaviour that triggered the alert

Microsoft Threat Intelligence automatically correlates the incident with any broader campaign activity across Microsoft's global ecosystem where possible. This delivers crucial context about the attacker's objectives, methods, and infrastructure.

Armed with this actionable information, the company's security team can quickly investigate the incident and take necessary steps to prevent recurrence. They can confidently update firewall rules, refine content filters, revoke compromised credentials, and patch vulnerabilities.

Explore more insights on Microsoft Security and how it protects AI systems—check out our latest blogs for in-depth analysis!

Connecting DeepSeek Incidents to the Broader Threat Landscape

For a bigger picture view, Microsoft Defender for Cloud Apps can connect alerts from the DeepSeek application with signals from the full breadth of a company's technology stack - email systems, identity providers, endpoint devices, and more. This comprehensive threat visibility, powered by Microsoft Defender XDR (extended detection and response), is a game changer for identifying sophisticated, multi-stage attacks that could otherwise slip through the cracks. By stitching together disparate indicators from across domains, Defender XDR enables security teams to track the end-to-end lifecycle of an attack that may use DeepSeek R1 as just one of many infiltration points.

For example, Defender XDR could connect the dots between a user who fell for a phishing email, had their credentials stolen, and used their account to abuse the DeepSeek model. Each of those steps may be logged in separate systems, but only when combined, do they reveal the true nature and scope of the incident.

With this unified situational awareness, security personnel can respond more strategically. Rather than treating the DeepSeek exploitation as an isolated event, they can coordinate a comprehensive mitigation plan that addresses root causes and locks down the entire attack chain. This maximizes the efficiency and effectiveness of the company's security resources.

Next Steps with Securing DeepSeek with Microsoft Security

Talk to our experts about securing DeepSeek and other AI systems with Microsoft Security and how industries and different departments can leverage Agentic Workflows and Decision Intelligence to enhance cybersecurity. Utilize AI-driven security solutions to automate threat detection, optimize risk management, and improve system resilience, ensuring robust protection for AI-powered infrastructures.