Introduction to Augmented Data Quality

As we know, today’s world is going digital, so data is critical. Not only is data necessary, but the quality of data is also crucial. Biometrics is the best example of data quality. Suppose biometric machines record wrong or incomplete information; then how will we use or access biometrics if data is incorrect? So, data is essential. Similarly, language translators depend on data if a language translator shows us wrong information.

What is Data Quality?

Data is essential nowadays; if the data is wrong or incomplete, then we say that data has poor quality. Data quality is measured by how much data is being used and whether the data is correct. For Example, In the login form, if we enter the wrong info, that data is useless, so the quality of that data is poor. For example, in our face recognition feature, if data is stored wrong, how does this feature never work or produce the wrong output?

A measurement of the scope of data for the required purpose. It shows the reliability of a given dataset. Taken From Article, Data Quality Management

Why do we need Data Quality?

By having good Data Quality, we can make better decisions for the growth of our organization because if we have incorrect Data, then how will we make plans for the development of the organization? List of critical points that must be present as good Data Quality:-

-

Fit for use.

-

Follow all validations, whichever we apply, while making the form.

-

Data must be complete.

-

Easily understand and follow the proper structure.

How to improve Data Quality?

There are lots of ways to improve data quality. Some of the following:-

-

By adding proper validation on the source. Where source means we take customer data (like weather by Google forms, login forms, and others.)

-

Use the proper structure of data while storing time in the database.

-

Correctly add appropriate fields so the customer will understand easily.

-

Check data before saving it anywhere to see whether it is correct.

-

Do not create confusing forms for customers. If the customer does not understand what we want as data, the customer will enter the wrong data into the form.

What are Convolutional Neural Networks?

Firstly, Convolutional means we have two functions, A and B. We apply one operation, C, on both A and B, which defines how function A produces function B, so in this example, C is Convolutional. Secondly, Neural Networks mean that our brain has lots of connected neurons, which help us learn things. For example, if we see a person's face a second time, our brain will recognize faces. Similarly, in data, AI has the concept.

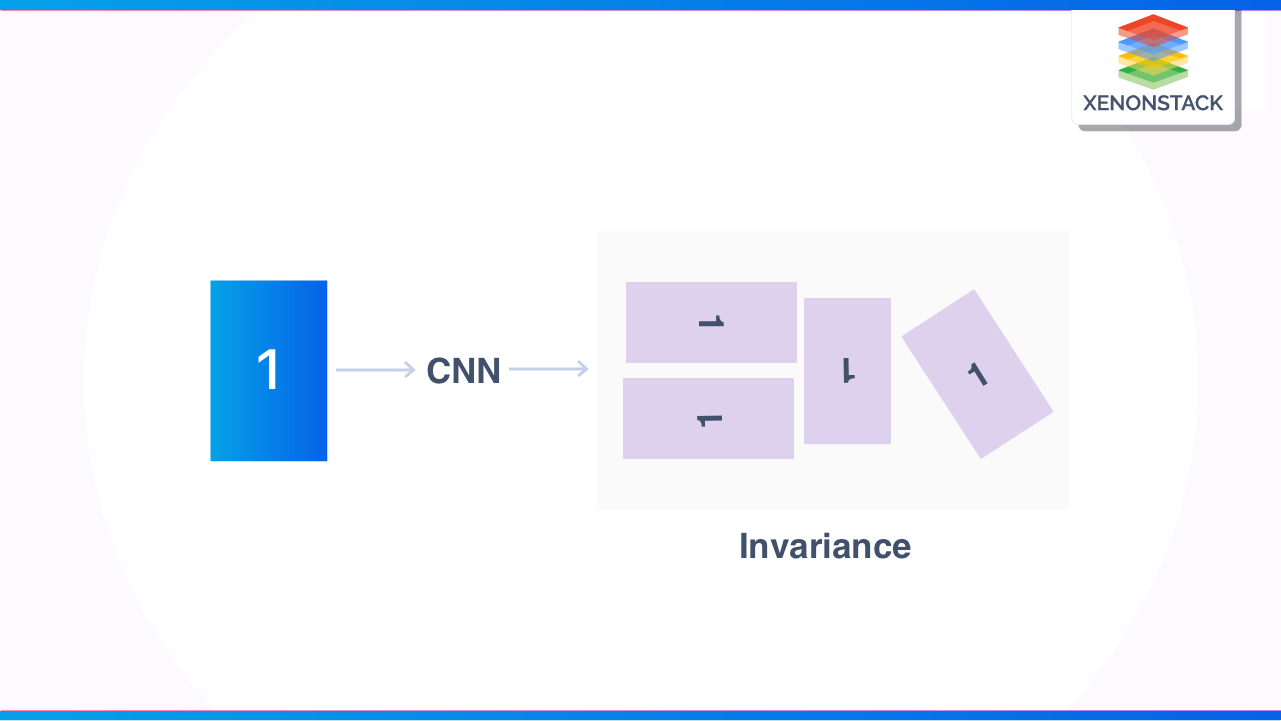

CNN is a technique that is used for recognizing the Image. In simple words, recognizing the Image means seeing the object, animals, or anything as an image and then seeing the edges of images, for example:

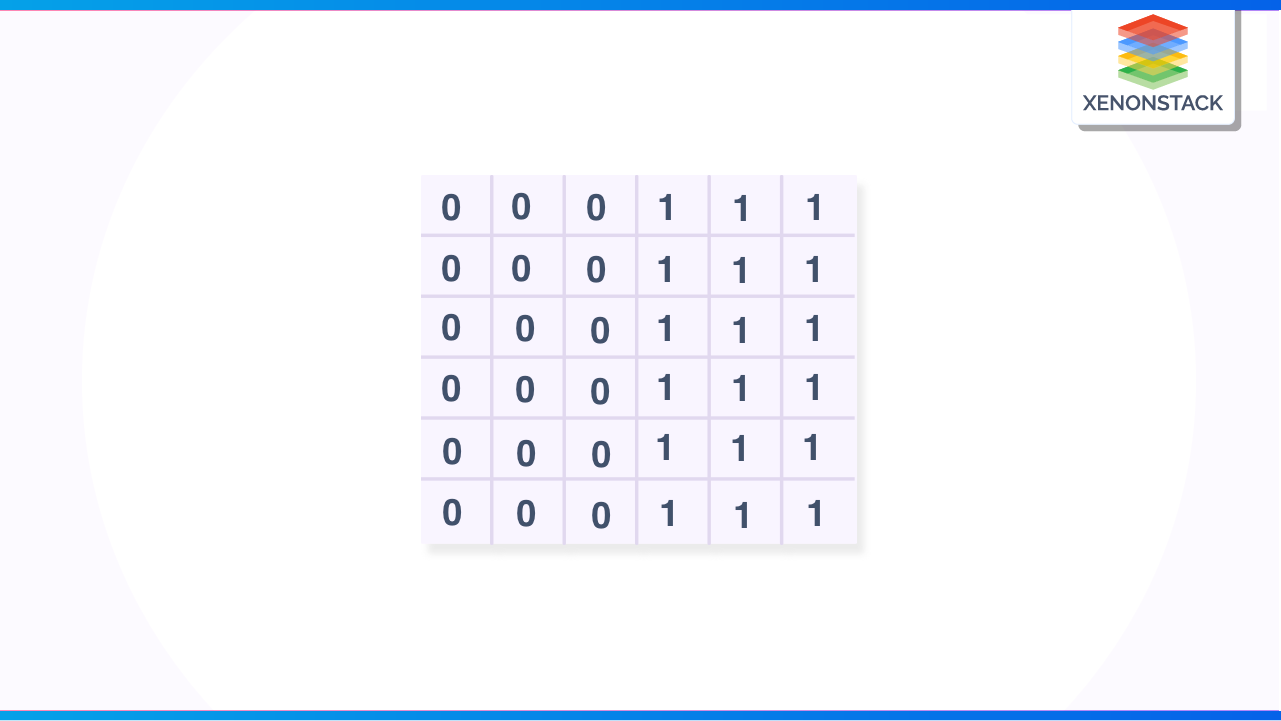

As we know, images are categorized into grayscale images(means ranges 0 to 255, black and white ) and coloured images combined with RGB). We can also scale down the Image by 0's and 1's.

0's represent white, and 1's as black. And between 0's and 1's, we have vertical edges. As we see, this Image is 6X6 pix, and when we apply a 3X3 filter on it, we get a 4X4 filter

4X4 filter

In the 4X4 Image, we can apply the min-max scale, which means converting the minimum value to 0 and the maximum value to 255 because the maximum value is 255. In the final, we get the middle layer(the middle two layers), which is our white side, and the first and last are our dark sides.

What is Augmented Data Quality?

We will automate the data quality process by using ADQ techniques or functions on data. The main motive of Augmented Data Quality is to reduce the manual tasks related to data quality, saving time and resources.

Example: We have one rectangle image with one, and we pass that Image to CNN. The CNN will show the different types of invariance on that particular image, giving us lots of images within various styles. For example, in the diagram, we apply different rotations on images. We do zoom in and zoom out as well.

Why do we need Augmented Data Quality?

By Augmented Data Quality, we will analyze information daily to identify the pattern of data quality. As the volume of data increases daily, organizations will evolve to transform into data-driven organizations; to speed up this process, they will augment their data to improve the quality of data.

Suppose we have very few images, and we want many photos. In that case, we will have different invariances or transformations on images, and we get lots of images from pictures because, let's take an example. If we pass input is X(A), then output is Y(A). If the input is X(B), the output is Y(B).

Automate the Data Quality Task: Profiling, matching data, poor quality warning, and merging are data quality tasks that are automated by Augmented Data Quality functions to improve data quality.

How does Augmented Data Quality work?

We will create new augmented data by applying reasonable filters on data or images. We can augment the text, images, audio, and other data. For Image augmentation, we use TensorFlow or Keras. Keras uses various layers to apply augmentation to data.

Data Observability combines data monitoring, tracking, and troubleshooting to maintain a healthy data system. Explore here How Data Observability Drives Data Analytics Platform?

What are the common Challenges of Augmented Data Quality?

The ever-increasing amount of data has created various challenges regarding data quality. Accurate information has become more essential as businesses use data-driven decision-making. Here are some of the main challenges related to data quality:

-

Data Duplication: Data duplication can occur when multiple sources store and provide similar data, but each source might contain different interpretations of the same facts. This can lead to difficulty in identifying accurate duplicates.

-

Wrong Data Representations: Inaccuracies can also occur due to errors in the data representation. For example, if a customer's address is entered incorrectly or incompletely, this could prevent them from receiving goods or services or finding their way back to the company.

-

Poor Data Formatting: This can lead to clarity, especially with larger datasets. Data not formatted uniformly or consistently can create issues when creating reports or analyzing the data.

-

Fragmented Data Sources: Data silos can form when data is trapped in disparate systems. This can make gaining comprehensive insights across multiple departments or business entities challenging.

-

Outdated Information: Stale and obsolete information can linger in databases without proper governance, creating inconsistencies and miscommunications.

-

Establishing a process for data cleaning.

-

Creating criteria for determining accurate data.

-

Creating methods for validating data accuracy.

-

Automating data transformation and cleansing processes.

-

Fighting contamination from external sources.

-

Managing security and privacy requirements.

-

Establishing data governance infrastructure

What are the features of Augmented Data Quality?

The features of Augmented Data Quality are listed below:

Data Integration

In traditional general, tools replace and move data for data quality, but this Augmented Data Quality will combine data from all resources to get well-structured, and the organization uses the Augmented data quality to make the data easy for real-time analytics. Reducing complexities is critical for any organization to achieve its business objectives.

Unstructured Data

Most of the time, data is not stored in a perfect framework or particular structure. For that, augmented data quality also does lots of help. ADQ also sets some missing, corrupted data.

Accuracy

We can check data accuracy by the percentage of records falling between the upper and lower limits, whatever we choose. For example, we want to measure the percentage of completed products that meet the total produced where the lower limit is 93, and we set the upper limit as 97. Getting a rate outside of the range on the first day will significantly impact data accuracy.

Data Quality Rules and Patterns

Augmented Data Quality will suggest a data quality rule for improving the quality of data. It suggests requirements based on datasets and a pattern for cleansing and merging the data.

A data mesh architecture is a decentralised approach that allows domain teams to independently perform cross-domain data analysis. Click to explore Adopt or not to Adopt Data Mesh? - A Crucial Question

What are the standard methods of Augmented Data Quality Management?

According to Gartner, we will apply Augmented Data Quality in the following areas:

Discovery

This feature is developed by using the reference data in distributed environments with many data assets and active metadata. This enables us to discover where the data resides, for instance, sensible data for privacy purposes.

Suggestion

Augmented Data Quality (ADQ) will suggest a data quality rule for improving data quality. ADQ suggests requirements based on datasets and a pattern for cleansing and merging the data.

What are the standard Data Quality checks?

The standard data quality checks are described below:

Uniqueness

If multiple items are repeated in our data, meaning if duplicate values are tremendous, then we will consider that data poor quality. ADQ will use the SQL rules to apply duplicate items or data filters. Once we can apply a filter, we apply it automatically to our data.

Completeness

We consider our Data complete when nothing is missing. We can set a search rule on null values to identify missing values or crucial vital data.

A data strategy is frequently thought of as a technical exercise, but a modern and comprehensive data strategy is a plan that defines people, processes, and technology. Discover here 7 Key Elements of Data Strategy

What are the Best Practices for Augmented Data Quality?

Best practices of Augmented Data Quality are given below:

By Automating the Data Quality Task

Data Quality tasks are profiling, merging, cleansing, and monitoring by linking automatically between entities.

Start Small and Align KPIs(key performance indicators)

KPI means we check data quality by performance. Based on performance, we generate KPIs. KPIs are measurable values used to evaluate how successful a person or organization is at reaching a target. In the beginning, our data does not have to be used directly in data science and artificial intelligence.

First, we have to choose the use case that will align with KPIs when we succeed once we move to larger projects.

Focus on Data Quality and Reduce Duplicates

Removing repeated or duplicate values from data can increase data quality. This is an essential and best practice of augmented DQ.

Create a Data Recovery or Backup Policy

Suppose if we lose our data for any reason, if we have these kinds of policies, we can easily handle that problem.

By Applying Rules to Specific Data Types

By Data Quality profiling, we can scan any data in real time.

Using the Governing Data Approach

In this approach, we create some conditions by which we can get only the data we need on time-based on those conditions.

What is the flow of Augmented Data Quality?

To add the augmentation to the data set, we have to follow the following steps:-

-

First, we have to transform and then copy that statement and use it as a tranform_train statement. Then, the next step applies alternation or invariance to data sets.

-

The alternation of invariance depends on our need for whatever we want, such as rotation, scaling, zooming, or whatever. Next, change the data set according to need or transformation.

Data is an organization's pillar, so we must follow excellent and simple rules while storing it. Profiling, matching data, poor quality warnings, and merging are the data quality tasks automated by ADQ functions to improve data quality. The quantity of data doesn't matter, but how much we use the data correctly does.

- Explore more about Augmented Data Management Best Practices

- Click to explore Augmented Data Management Solutions

- Know more about Data Management Services and Solutions

Next Steps with Augmented Data Quality

Talk to our experts about implementing compound AI system, How Industries and different departments use Agentic Workflows and Decision Intelligence to Become Decision Centric. Utilizes AI to automate and optimize IT support and operations, improving efficiency and responsiveness.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)