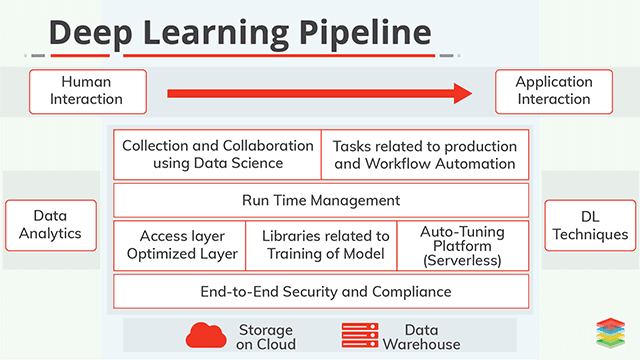

Deep Learning Pipeline

Deep learning Pipeline is a subset of Machine learning, a technique to train the deep architectures (Deep Neural Networks or Deep Graphical Models). The human brain structure profoundly inspires these architectures. The main tasks for which Deep learning used are Object Recognition from images or Videos, Speech Science-related tasks(such as Speech Understanding). If Machine learning already exists in the arsenal of Artificial Intelligence galvanized by the functioning of the human brain, then why is Deep learning gaining so much popularity? The reason is simple Deep learning handle features according to the need case of the problem. In 1958, a psychologist named as Frank Rosenblatt build artificial which reciprocates like the Human brain designated as a Perceptron, an artificial machine to memorize, think and counter like the Human brain. In 2016, one of the best players in a game named as GO defeated by a computer program. Yes, it is true, Lee Sedol (one of the most influential players in GO) defeated by Alpha(made known by "Google DeepMind"). It describes the power of Deep learning in the area of Artificial Intelligence. The pipeline (placement of the sub-parts of techniques) which deals with Deep learning models and techniques considered as Deep learning Pipeline.How Deep Learning Pipeline Works?

Neural Network acts as the elementary bricks of Deep Learning. A Neural Network is an artificial model of the Human Brain network modeled using hardware and Software. There are many types of Neural Network used in Deep Learning. Some of them are Multilayer Perceptron (Simplest ones), Convolutional Neural Network (Digital Image Processing), Recurrent Neural Network (continuous data). Multilayer Perceptron is a network build by some hidden layers of neurons where a layer uses the output of its previous layer as input. Convolution Neural Networks (made known by Lecun), reform Digital Image Processing, provides a solution to the problems of physical abstraction galvanized by the functioning of the human brain, of the features. They are successfully used for classification and segmentation of Image and used for the other operations also such as Face recognition and Object recognition. The simplest Recurrent Neural Networks came into existence in the 1980s — Recurrent Neural Networks used primarily for continuous data such as Time Series.Why Deep Learning Pipeline Matters?

Firstly, it gives an edge to the feature abstraction process to perform the process of abstraction directly from the content inspired by the functioning of the human brain,(Images, Music, Videos). Secondly, it handles mixed data comfortably. Deep Learning provides Real-Time behavior to the model. There are top two things which matter in the modern era of technology, accuracy, and efficiency (whether it is related to time or related to cost). Deep learning achieves both and performs well in both terms. The power of Deep learning estimated by the fact that it also surpasses Human Intelligence in some tasks. The main reason behind the enormous computation power of the human brain is not that it has a considerable number of neurons, but placed in a very accurate manner the same goes for a Deep Learning technique. It is not about how many layers or how many artificial neurons used for a Deep Learning Model, it is all about placing them correctly.Benefits of Deep Learning Pipeline

Aerospace and Defense - To detect objects from an image medium or a video medium such as satellites. These types of jobs have their advantages such as identifying safe or unsafe zones for military troops. Automated Driving - The trend of using Deep learning in driving the vehicles is also started. Deep learning provides an automation edge to this process. Such as automation of traffic signals and detection of objects to avoid accidents. Industrial Automation - Deep learning provides an edge to automation so if there is a need for automation in Industrial work, Deep learning can be used. Medical Research - In the area of Medical Research, Deep learning emerged as a boon. Whether it is the case of detecting Cancer from the medical images or it is the task of providing automation to the prescriptions in respect of any diseases(just like a Doctor Robo). Electronics - The most current examples of Deep learning in Electronics devices are Amazon Alexa, Siri from Apple and Google home, etc. These devices not only process speeches by human but reply also by the medium of speech.How to Adopt a Deep Learning Pipeline?

The main thing about giving life to any technology is bridging the gap between the theoretical aspect and the practical aspect. In the case of Deep learning, architecture can be of different types, but pipeline remains the same all of the time. Some of the Deep learning architectures are -- Perceptrons

- Recurrent Neural Networks

- Convolutional Neural Networks

- Long/Short Term Memory Network

- Boltzmann Machine Networks

- Hopfield Networks

- Deep Auto-encoders

- Deep Belief Network

Best Practices of Deep Learning Pipeline

Data Should be managed properly - Data Management includes Data Analysis, Data Validation, and Data Transformation. Handling the Training of the Model - It includes Model Building, Model Specification, and Model Training. Evaluation of the Models - It includes the technique of model evaluation and validation. Deploy, predict and monitor- It serves the model to its use case.Tools for Building Deep Learning Pipeline

| Components of Pipeline | Tools |

| Collect | Programming Languages = Scala, Go, Python, R Tools = Pentaho Data Integration, WEKA. |

| Store | Dynamodb, MongoDB, SQL, HDFS, Amazon S3, Redis, Cassandra, Apache Kafka, Elasticsearch |

| Enrich | Apache Spark, Apache Storm, Apache Nifi, Apache Airflow, Amazon Elastic MapReduce (EMR) |

| Train | Tensorflow, Pytorch, Kafka, MXNet |

| Visualize | Kibana, Grafana, Amazon Athena(in the case of S3), D3.js, Flask |

| Infrastructure of Pipeline | Container Technologies = Docker, Amazon Elastic Container Service Servers = Amazon EC2, Datacenter |

Concluding Deep Learning Pipeline

Deep Learning Pipelines is a high-level deep learning framework that helps simple deep learning workflows via the Spark MLlib Pipelines API and scales out deep learning on big data using Spark. Deep Learning Pipelines is a high-level API that designates into lower-level deep learning libraries. To have deep knowledge about building deep learning pipeline architecture, you can explore our more content related to this.- Learn more about "Machine Learning Pipeline" Architecture

- Explore our use case based on "AWS DevOps Pipeline" on Laravel Php

- Read our blog based on "Continuous Delivery Pipeline" for Python