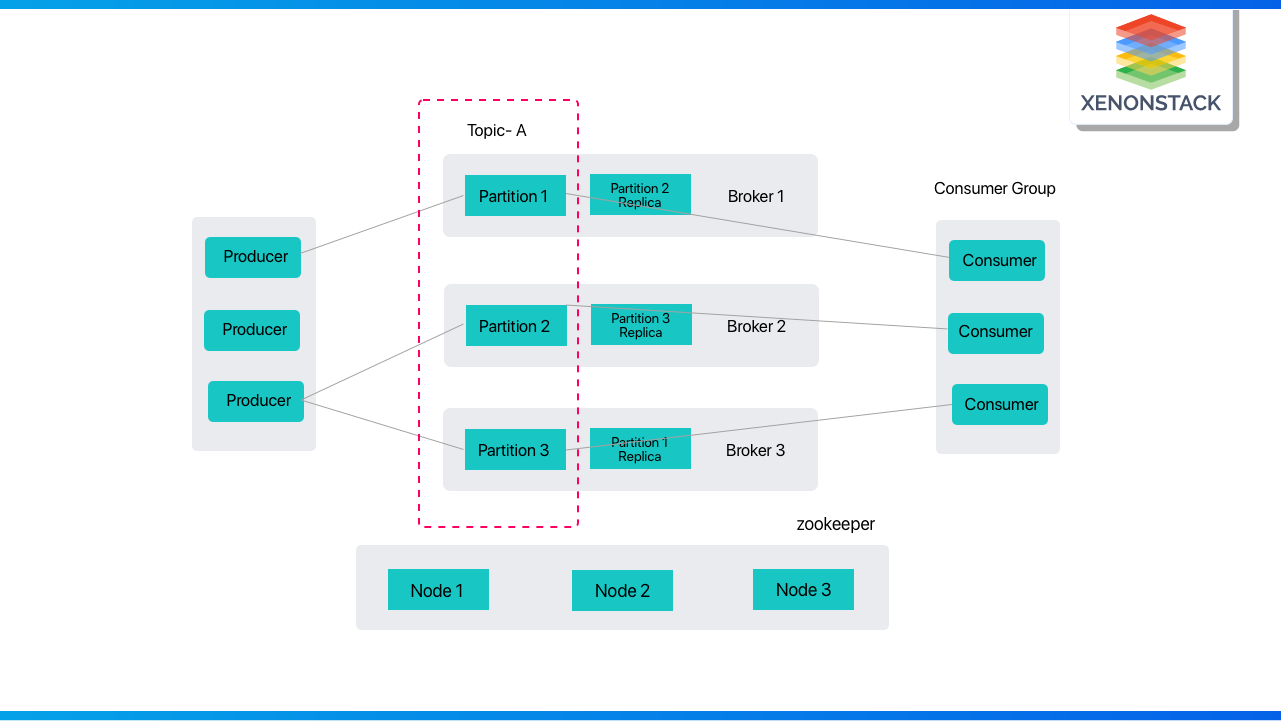

As a result, the consumer's degree of parallelism (within a consumer group) is limited by the number of partitions consumed. As a result, the higher the throughput achieved by a Kafka cluster with more partitions, the better, but having a huge number of partitions may also have several negative impacts, such as

-

A more significant number of partitions necessitates a more significant number of open file handles.

-

There's a chance that adding more partitions would increase unavailability.

-

End-to-end latency may increase when more partitions are added.

-

More partitions may necessitate additional memory on the client's part.

Throughput is used as a crude calculation for determining the number of partitions. You calculate the total amount of production (for example, x) and consumption (for example, y) that can be achieved on a single partition (for example, y). Let's suppose your throughput goal is t. Then you'll need a minimum of max(t/x, t/y) partitions. The amount of per-partition throughput that may be achieved on the producer is determined by batching size, compression codec, acknowledgment type, replication factor, and so on.

In general, a Kafka cluster with more partitions has greater throughput. However, the impact of having too many partitions in total or per broker on factors like availability and latency must be considered.

What is the Architecture of ZooKeeper?

Apache ZooKeeper is a free and open-source distributed coordination service that aids in the management of a large number of servers. It isn't easy to manage and coordinate in a distributed setting. ZooKeeper automates this process, allowing developers to develop software features rather than worrying about how it is distributed.

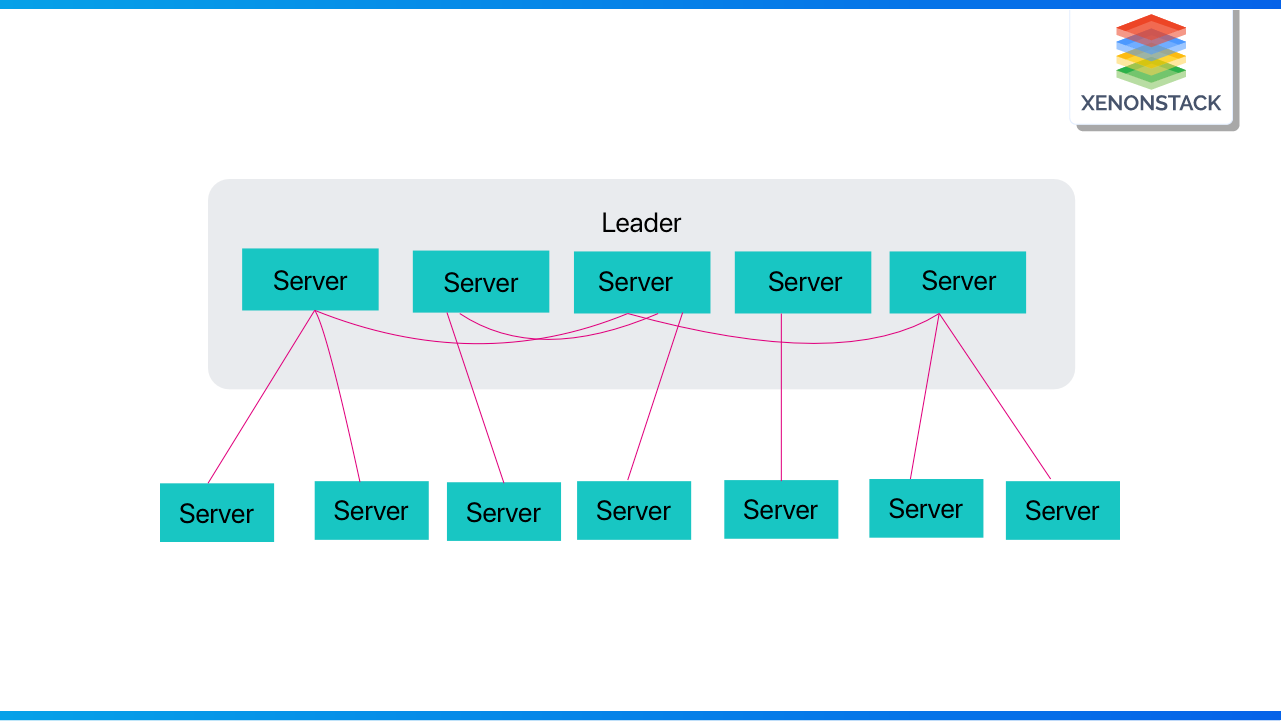

Client-Server architecture is used by Apache ZooKeeper, in which clients are “machine nodes” and servers are nodes.The relationship between the servers and their clients is depicted below. We can see that each client uses the client library and communicates with any of the ZooKeeper nodes in this way.

Server

When a client connects, the server sends an acknowledgment. If the connected server does not respond, the client will immediately transfer the message to another server.

Client

The client is a node in a distributed application cluster. It enables you to obtain information from a server. Every client sends a message to the server at regular intervals, which allows the server to determine whether or not the client is alive.

Leader

A server is designated as the Leader. It provides all of the information to the clients and an acknowledgment that the server is still operational. If any of the connected nodes fails, it will undertake automated recovery.

Follower

A follower is a server node that obeys the instructions of the leader. Client read requests are handled by the ZooKeeper server connected to the client. The ZooKeeper leader is in charge of handling client write requests.

Cluster/Ensemble

Ensemble or Cluster is a collection of ZooKeeper servers. When using Apache, you can use ZooKeeper infrastructure in cluster mode to keep the system working at its best.

Role of ZooKeeper in Kafka and Backup

ZooKeeper accomplishes its high availability and consistency by distributing data over multiple collections of nodes. ZooKeeper can execute instant failover migration if a node fails; for example, a leader node fails, a new one is selected in real-time via polling within an ensemble. If the initial node fails to answer, a client connected to the server might query a different node.

ZooKeeper is used in a minor but crucial coordinating function in newer versions of Kafka (>= 0.10.x). When new Kafka Brokersjoin the cluster, ZooKeeper is used to discover and connect to the other brokers. The cluster also uses ZooKeeper to pick the controller and track the controller epoch. Finally, and arguably most significantly, ZooKeeper saves the Kafka Broker topic partition mappings, which keep track of the data held on each broker. The data will still exist in the absence of these mappings, but it will not be accessible or copied.

How is the heartbeat managed for Kafka Brokers?

-

The mechanism by which Kafka can know that the business application with the consumer is still up and running or is not running is known as heartbeat.

-

This helps Kafka expel a non-active consumer from the group to distribute partitions to the remaining consumers. Still, this way of handling heartbeat had a problem, i.e., If your processing takes a longer time, you'll need to set a long timeout, which will result in a system that takes minutes to notice and recover from failure.

-

The Kafka broker controls this process by delivering network status updates stating that we're still alive and evicting each other based on timeouts. In older versions of Kafka, we had to call the poll method to trigger the heartbeat mechanism.

-

Kafka's newer versions use KIP-62 (a background thread) to handle this mechanism. So, instead of waiting for us to call a poll, this thread informs Kafka that everything is good.

-

So, suppose your entire process dies along with the heartbeat thread. In that case, Kafka will soon notice. It will no longer be influenced by the amount of time you spend processing data because the heartbeat is transmitted asynchronously and more frequently.

Conclusion

As a result, in this role of ZooKeeper in Kafka, we've seen that Kafka relies heavily on ZooKeeper to function effectively in a Kafka cluster. Finally, we discovered that all Kafka broker settings are saved in ZooKeeper nodes. Even Kafka consumers require Zookeeper to be aware of the most recent message consumed. We also looked into ZooKeeper server monitoring and ZooKeeper server hardware.

- Discover more about DevOps Best Practices for Data Engineers

- Explore more about Big Data Discovery using Blockchain

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)