Introduction to Serverless Computing

Serverless computing can also be recognized as the FaaS (Function-as-a-Service) architecture of cloud computing, where the developers have to set a function or two for an event. And consumers have not to worry about the resources because resources in this approach come to life when the function starts and get released when the function ends, and also, they are available to the service itself. Because only some functions will run on an event, the time of the service will be short, so we can say cloud providers provide ephemeral computing services.

A method of observing, reviewing, and managing the workflow in a cloud-based infrastructure. Click to explore about, Cloud Management and Monitoring Tools

That means the consumer of the cloud would be charged according to the Execution time of the provided function per millisecond and the times of execution of the code. If the client is up and the functions are in use, the consumer will be charged; if there are no clients and the functions are not in use, there are no events, the consumer will not be charged. Serverless computing makes the scalability of the server to infinity, i.e., there will be no load management, it has to run code or function on request or event, and if there will be parallel requests, there will be a function running for each particular application.

What is Serverless Computing?

Serverless computing kind of cloud computing is definitely not server-less, there are servers, but the point is that the service providers or the consumers of cloud computing do not have to worry about planning or managing the server and its properties, or we can say infrastructure, cloud service providers will do that, and the developers of the service have to provide the functionality to the providers to run either on request or on the event. It is offering them the actual business logic, and the cloud provider will handle all the server management and run our business logic on demand. Understand like this, suppose a trained, national player of a game has to play in a team of all freshers, so he has to maintain his game with keeping in mind all the others, he has to change positions of all the others, he has to keep an eye on every player, to improve them, and a part of developing himself, that was the old version of cloud computing was like, the developer of the service was the player, who has to take care of all the infrastructure, and the business model.

Serverless Capabilities help Enterprise to build and scale applications without storage provisioning problems and enable real-time data processing Source - Building a Serverless Big Data Application

While the new, serverless computing is like, the situation that a player has, when either his teammates are professional players or there is a trainer for those who are not that good, so, if this is the condition, although he still has to look for his give to the team, except that, he has to concentrate on his play, his skills, he doesn’t have to worry about other teammates, how they play and all. The same in serverless computing, where again the player is the developer, and the teammates are the infrastructure, but in the above example and this one, the difference is that here we include either the experience of teammates or an experienced coach, which is referred to the cloud providers, i.e., the developers have not to too much worry about the infrastructure, providers handle the foundation, although the developer has to be a little bit informed about the working of serverless, what it can do with it and some bits more, except this developer has to worry just about the business model or logic.

Importance of Serverless Computing

The importance of Serverless Computing is listed below:

A lot less work for a service developer

- Server scaling, planning, or any other kind of management will not be the work of consumers of the cloud.

- Just provide the logic in a single function, and set the event or request, and the work is done.

- Cloud providers also provide a logging and monitoring system for consumers.

- Because of the less work, Deployment time will be short.

Works Automatically

- Server management is the work of cloud providers.

- Functions are executed on either request or event.

- Monitoring and logging are the work of providers.

- Kind of completely automatic for cloud consumers.

Infinite Scalability

- The more the load is, the more the requests are, and the more the functions are running.

- You are automatically scaling inelastic fashion.

Pay-per-use

- As it states, consumers of the cloud have to pay just for the time they use, which can be evaluated on the basis of the execution time of the function they provide any number of times their purpose runs.

- No charges if there is no use.

AIOps is proving invaluable at discovering how closing gaps in cloud infrastructure and container performance reduces operating risks and improves business continuity. Click to explore about, AIOps Cloud Monitoring for Business Continuity

Comparison between Serverless vs Traditional Architecture?

The difference between Serverless and Traditional Architecture is described below:

With Trusted Platform Module

If we look back in time, we’ll see that working with serverless has many similarities with how developers' teams used to work with mainframes. There was a technology name TPM (Transaction Process Monitoring), for example, IBM’s CICS(Customer Information Control System), and all it did was handle non-functional elements. TPM handles the issues of load management, security, monitoring, deployment, etc. So, TPM can be called very similar to Serverless.With IaaS (Infrastructure as a Service)

IaaS offers complete infrastructure, as demanded, and developers have to install a virtual machine first and then deploy the app. IaaS is done with the Virtualization of infrastructure demanded. Everything has to be managed by the developer's team, or we could say, cloud consumers.With CaaS (Container as a Service)

CaaS (Container as a Service) was used and is still where we want maximum flexibility. Because it delivers an entirely controlled environment where developers can deploy the app as they want and can run their app with very little or almost no dependencies on the client side. Developers have to manage traffic control, and it has to borrow on rent for a fixed period, like six months or a year.PaaS (Platform as a Service)

Then PaaS (Platform as a Service) came to focus, which was a step towards Serverless, the improved version of CaaS, where the developer has not to worry about the operating system and its working. Instead, they have to develop the app or service and deploy that to the platform. But it also has to be bought for a fixed period. Where scaling is automatic in serverless and happens instantly without any preplanning, in case of PaaS, scaling can occur in PaaS but it's not automatic, and developers might have to prepare there an app, before scaling.An initiative to combine the storage interface of Container Orchestrator Systems(COS) such as Mesos, Kubernetes, Docker Swarm, etc. Click to explore about, Container Storage Interface

Serverless Vs. Containers

Containers work on a single machine, on which they are assigned initially, they can be relocated, on the other hand, assigning the server in serverless is the responsibility of the provider, and it is attached when the event triggers the function, so in serverless, it is not confirmed that the same purpose will run at the same machine, even if we try two consecutive times. Scaling of containers have to be done by the developers, it is not easy in case of containers, but it can be done. While, scaling in serverless is automatic, as electricity in our houses, use according to need. In containers, charges are fixed, because the have to be working continuously, whether there is traffic or not, like for a month or two, or a year. But in case of serverless, again as electricity, we have to pay as much as we use, which is evaluated by the Execution time of our function. In the case of Containers, the management responsibility is of the consumers of the cloud, either reducing failure risks, or connections between the container and the other resources. While in the case of serverless, cloud consumers have to submit their business logic, or part of it, as a function. Provider of the cloud will manage everything else.Components of Serverless Computing Architecture

Serverless Computing needs three main parts to work at its full pace- API Gateway

- FaaS(Frontend-as-a-Service)

- BaaS(Backend-as-a-Service)

Based on Classification

- Based on the method of triggering

- Request-based Serverless Computing

- Event-driven Serverless Computing

Request-based Serverless Computing

Request-based Serverless Computing is the approach followed by the services that act upon some request. When a client sends a request, serverless computing performs an action by the type of application. And sends a response to the client (if applied).Event-driven Serverless Computing

Event-driven Serverless Computing is another approach followed by the services that act upon some event. The event can be of any kind, like a file uploaded, file deleted, new client, etc. There are a huge amount of events, and each game can trigger one or more than one functions. Functions can act upon the event, or it’s properties or any data given.A path to manage storage that utilizes a pair of both local as well as off-site resources. Click to explore about, Hybrid Cloud File System Solutions and Storage

Best Practices for Serverless Computing

- It would be more beneficial if the functions would be stateless, or states can be saved in some database. The function would, fetch it, work on it, and store it again (if needed).

- Serverless Computing is very developed, or we can say an evolved version of Cloud, but it is not the best choice for every kind of service. It is more beneficial for services with uneven or unpredictable traffic patterns. Especially the services with very high computing or large processing requirements or file system access or operating system access are where serverless is not the best option.

- Relational Databases are not preferred because of the limit on connections open simultaneously, which could lead to scalability and performance issues. The cold start could be reduced if we manage resources accordingly.

- It would be beneficial too if we do our as much possible computation on the client side so that computation on the server side could reduce.

Conclusion

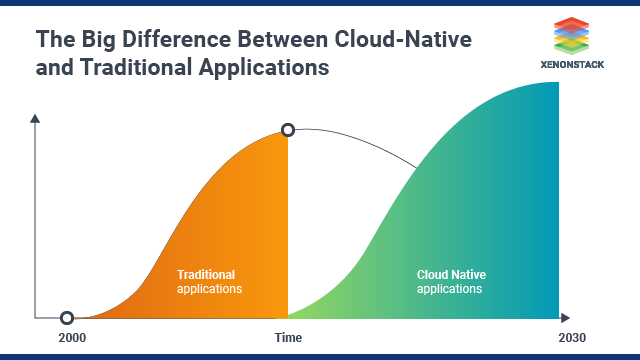

Yes, many issues are like disadvantages of Serverless, but still, it is way great approach than the other ones, though the tools and standards for Serverless are still not that mature, it is be going to the future of cloud computing. And yes, it is a great approach and very efficient (as cost and for developers too), it is not the best approach to implement in every kind of service, at least not for now. There should be proper analysis before implementing a service or app by this approach.

What's Next?

With the growth of technology, companies are moving towards serverless computing to execute their functioning hassle-free and without worrying about data loss. If you are planning to go serverless, have a look at the following steps:

- Discover more about Serverless Microservices with Python on Kubernetes

- Click to know about Enterprise Hybrid Cloud Storage Solutions

- Read more about Why we need Hybrid Cloud solutions?