What is Linkerd?

Linkerd is an open-source, lightweight Service Mesh developed mainly for Kubernetes. It is used in production by many companies such as PayPal, Expedia, etc. It adds reliability, security, and visibility to Cloud-Native applications. It provides observability for all Microservices running in the cluster, without requiring any code change in Microservices.

Users monitor success rate, requests, and latency issues for individual service. It also provides live traffic analysis which helps in diagnosing of failures. The best part about Linkerd is that it works out of the box without any complicated configuration. This can easily handle tens of thousands of requests per second, and it works perfectly with Kubernetes.

An infrastructure layer atom of all services, which handles communication between them. Click to explore about, Service Mesh Architecture and Best Practices

Why Linkerd Matters?

Following are the reasons why Linkerd matters -- It's completely open-source and has a very active community.

- Adding Service Mesh to a cluster of Microservices is very important.

- It helps both developers and DevOps guys to find bottlenecks in applications.

- It is an excellent choice for Service Mesh to monitor Service-to-Service communication across multiple applications running in a cluster.

- Battle tested in production by many big Enterprises.

- It's one of the most used Service Mesh, along with Istio.

- It works independently, it is not dependant on any libraries or languages.

How Linkerd Works?

Configuring Linkerd in a cluster is a little complex task, but with the right approach and following all steps in sequence, it’s configured cluster-wide across all deployments.Control Plane

Control Plane comprises of a collection of services deployed in a dedicated Kubernetes namespace. All these services provide various things, collecting telemetry data, providing a front-facing API, giving control data to all data plane proxies. Four components of the Control Plane -

- Controller - It has many Containers (Public and Proxy API, destination) which provides the most of Control Plane’s functionality.

- Web - Gives the Linkerd dashboard.

- Prometheus - Scrapes all of metrics exposed by Linkerd & stores them. Linkerd comes with a customized instance of Prometheus.

- Grafana - Grafana component displays the dashboards. Links to dashboards provided in the Linkerd dashboard itself.

Data Plane

Multiple lightweight proxies deployed in Data Plane runs as Sidecar Containers alongside each instance of the application. To add an application to the Linkerd Service Mesh, the pods for that application must be redeployed to add a Data Plane proxy in each pod. (It inject command used for this, just with a CLI command). All data proxies transparently intercept requests/responses between each pod, and add features as encryption-TLS, also allowing and rejecting requests according to the defined policy. The behavior of these proxies controlled entirely by the Control Plane.Dashboard

The dashboard of Linkerd provides a high-level view of what exactly happening with applications in Near Real-Time. It is used to view all metrics (success rate, requests/second, etc), visualize all applications dependencies and know the health of specific routes an application follows. Simply run linkerd dashboard from the terminal to open the dashboard in the browser.Proxy

It receives all incoming traffic for a pod and intercepts all outgoing traffic as well. It can also be added on run-time. Proxy requires no code changes in applications, which allows proxies to be deployed with any kind of application.A design model that makes use of shared systems that give services to additional applications over the protocol. Click to explore about, Service-Oriented Architecture

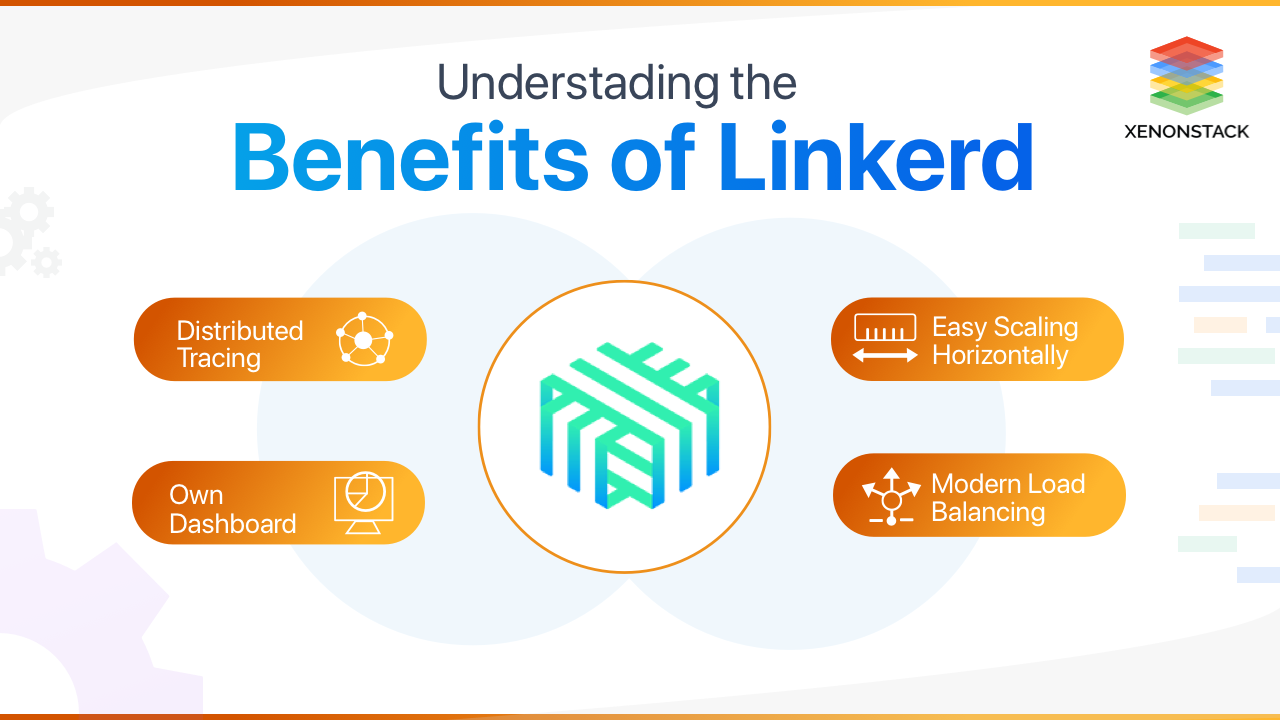

What are the benefits of Linkerd?

- While Istio is quite difficult to set up in the cluster, for Linkerd no configuration required, it works out of the box.

- It allows for easy scaling horizontally.

- It supports HTTP, http/2, gRPC and pretty much all commonly used protocols.

- Allows TLS application wide.

- Highly intelligent distributes traffic using modern load-balancing algorithms.

- Allows request routing dynamically and shifts traffic accordingly.

- It provides distributed tracing to trace the root cause of issues.

- It aligns well with the modern Microservices world.

- Provides features such as resilience, observability, and load balancing.

- It provides Prometheus and Grafana out of the box.

- It also has its own dashboard great to observe what’s happening in Real-Time.

How to adopt Linkerd?

The most basic requirement is that the application should be composed of tiny independent Microservices deployed using Docker Containers and being managed using Kubernetes Cluster. RBAC need to be enabled in the cluster, enabled by default from v1.9. Adopting Linkerd is fairly simple and straightforward having the above requirements. You can also explore more about Envoy Proxy Mesh in this insight.

Install Linkerd proxies per node (to minimize resource usage) or deploy proxy as a sidecar alongside the application. Initially, Linkerd injected as a sidecar for a couple of Microservices and tested everything between them before deployed it cluster-wide for all Microservices running in Kubernetes. Once adopted and a Service Mesh created, adds value to the management of all Microservices with the Linker's features.

Modern Data warehouse comprised of multiple programs impervious to user. Click to explore about, Modern Data Warehouse Services

Best Practices to Implement Linkerd Service Mesh for Microservices

Best Practices followed while implementing Envoy proxy atop Microservices running in the cluster -

- The default way is to run Linkerd using Sidecar pattern, along with every pod but this pattern has many downsides, such as excessive resource usage.

- Hence it is best to run it per node. In this way, resource usage will be quite less.

- Every node should have a Linkerd pod with one or more instance of all services. Each node should form a separate neighborhood allowing communication.

- There should be no direct Service-to-Service communication across the neighboring nodes, Linkerd proxies should be allowed to talk to another Linkerd proxy.

- Create alerts based on Prometheus or Grafana metrics of running applications provided by proxies. It should be tested for few Microservices first before injecting the proxy for all of the Microservices.

What are the best tools for enabling Linkerd?

The below mentioned are the best tools for enabling Linkerd:

- Proxy - Inbuilt in Linkerd.

- Deployment Tools - Containers and Orchestration tools such as Docker and Kubernetes.

- Services Discovery - Inbuilt proxy feature.

- Monitoring - Prometheus, Grafana

Concluding Linkerd

Linkerd installed as a separate standalone proxy component. Applications use Linkerd by running instances of applications in defined locations and use these instances to proxy calls, instead of connecting to destinations applications directly, each service connects to their corresponding Linkerd instances, and think of the instances as if they are the destination services.

For example - Two Linkerd’s as L1 and L2, who receives calls from neighboring service (service A) is L1 Linkerd while the one who finally sends messages to its neighboring service( service B ) is L2 Linkerd. In modern applications, some communications happen over Http 1 or 2, some over gRPC, Linkerd works well for all these protocols. Hence, it is best suited to having more than 15-20 Microservices running inside the Kubernetes cluster. Also can achieve more by creating a Service Mesh infrastructure layer. To know more, you are advised to look into the below steps:

- Discover more about Data Mesh and its Benefits

- Explore about Istio Service Mesh Architecture and Service Discovery

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)