Among the established technologies in the developing automotive industry, computer vision is crucial in enhancing vehicle safety and monitoring systems.

Using various algorithms and machine learning, computer vision systems understand visuals from the vehicle’s environment and facilitate several advanced safety features, improving drivers’ experience and reducing accidents. Facilitates several advanced safety features, improving drivers’ experience and reducing accidents. This blog reviews the state of affairs of computer vision in automotive safety to cover its role and underlying implementation platforms, the use cases, opportunities, and limitations.

Introduction to Computer Vision in Automotive Safety

Computer vision is a branch of AI where data or information is retrieved, processed and understood from images and videos. Computer Vision systems in the automotive safety field extract information from different sensing devices—chiefly cameras to constantly observe the environment, driver behaviour, and car state. These systems are the core platforms for ADAS and are critical to the evolution of autonomous vehicles.

Key Applications of Computer Vision in Vehicle Safety

Fig 1.0: Key Applications of Computer Vision in Vehicle Safety

Fig 1.0: Key Applications of Computer Vision in Vehicle Safety

-

Advanced Driver Assistance Systems (ADAS)

ADAS covers a spectrum of tools that aim to help the driver perform certain tasks while on the road and create a safer and more comfortable environment in the car.

Key ADAS features enabled by computer vision include:

-

Collision Avoidance Systems

Collusion avoidance systems work on computer vision, which helps identify possible hurdles that exist on the road. Techniques such as object detection, tracking, and trajectory prediction are fundamental:

-

Object detection methods include YOLO (You Only Look Once) and Faster R-CNN, which detect and recognise objects (vehicles, pedestrians, and cyclists) in real-time.

-

Driver Monitoring Systems (DMS)

Maintaining the drivers’ alertness during their driving is essential for vehicle safety. Computer vision-based DMS employs facial recognition and gaze tracking to monitor driver state:

-

Traffic Sign and Signal Recognition

It is always important to know your signs and signals as a driver when you are on the road. Computer vision systems recognize and interpret various road signs, traffic lights, and signals:

-

Traffic Sign Recognition (TSR) uses deep learning, specifically convolutional neural networks (CNNs), to identify and translate road traffic signs, communicating to the driver when to slow down, stop, or obey other regulatory signs.

-

Traffic Light Detection: It detects traffic light colours, including red, yellow, or green, which in turn helps the vehicle decide whether to stop at a red light or get ready for the green light, among other things.

-

Surround View and Parking Assistance

Computer vision enhances low-speed manoeuvres through surround view systems and parking assistance:

Underlying Technologies and Algorithms

Fig 2.0: Machine Vision-based Autonomous Road Hazard Avoidance System for Self-driving Vehicles

-

Image Processing and Feature Extraction

As a critical part of computer vision, image processing requires various activities, including noise removal, scaling, and contrast stretching. Edge detection (Sobel, Canny), corner (Harris) and key points descriptors (SIFT, SURF) are applied to discover important characteristics of the visual data.

-

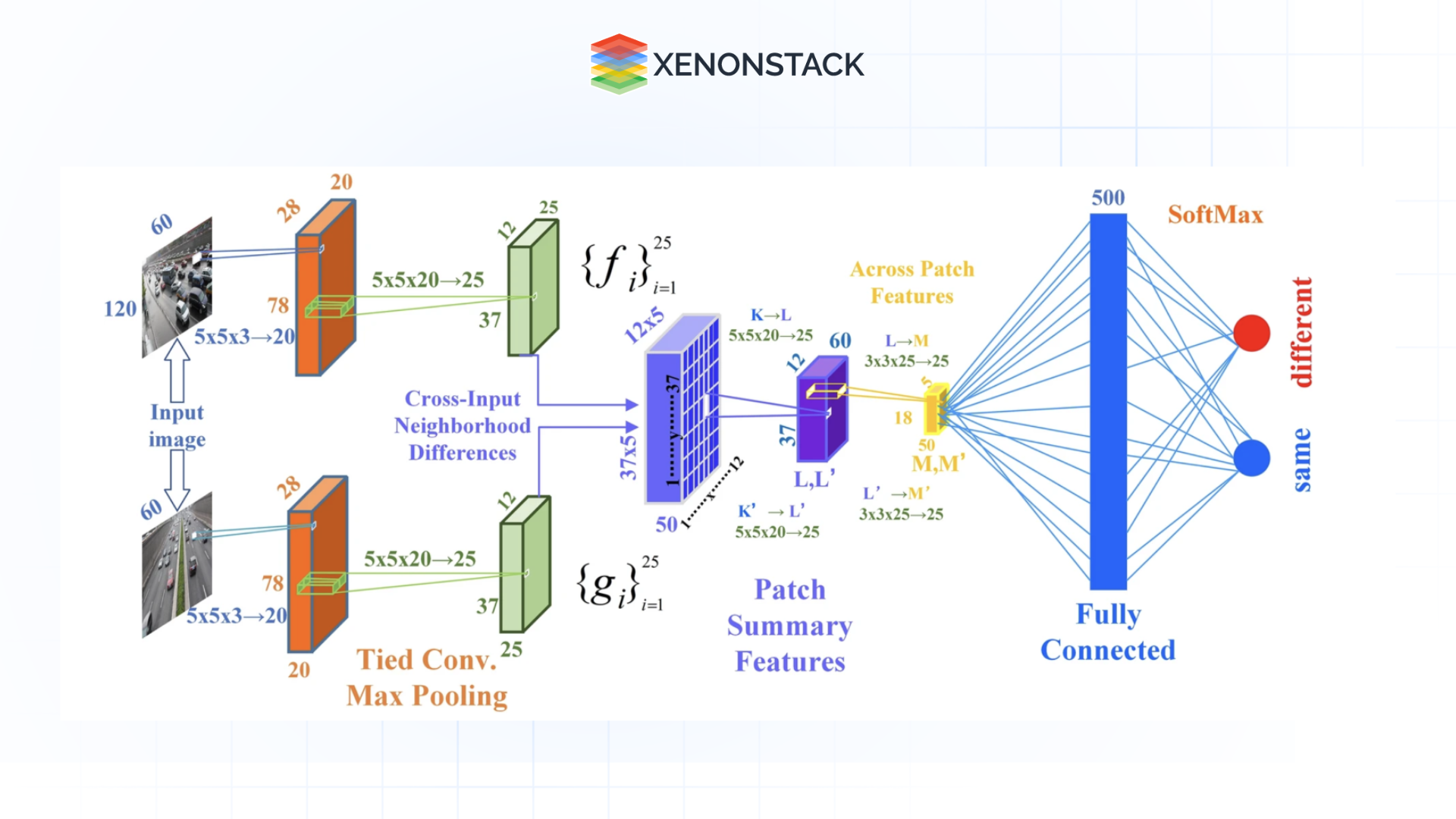

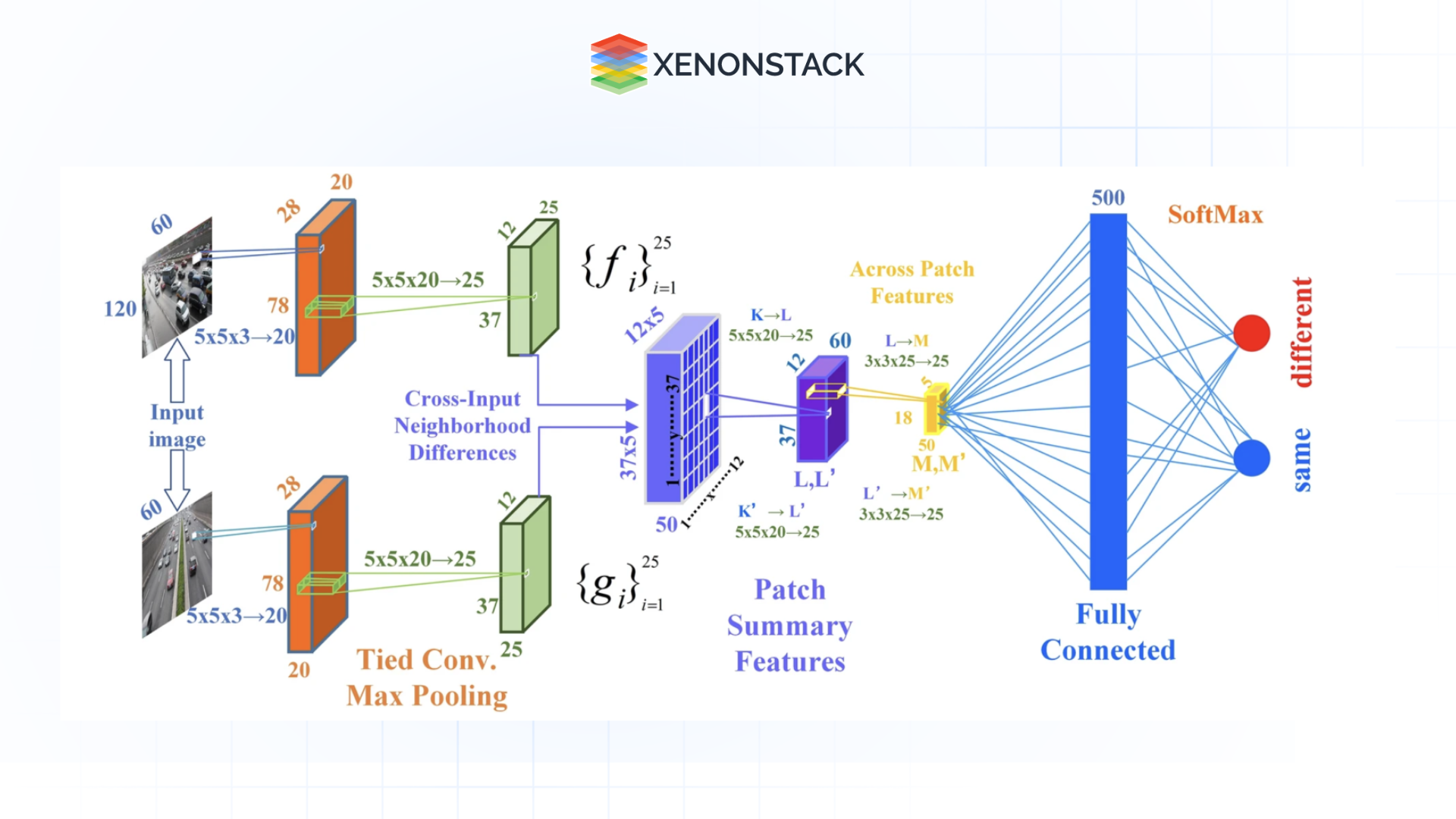

Machine Learning and Deep Learning

Machine learning algorithms, particularly deep learning models like CNNs, have revolutionized computer vision in automotive applications:

-

Sensor Fusion

This is because incorporating images, laser, and radio data into the computation process of a car’s perception apparatus makes it more precise. Data fusion compensates for individual sensors' weaknesses and offers an improved representation of the vehicle's surrounding environment. Automating Financial Document Processing with Computer Vision.

-

Real-Time Processing and Edge Computing

Due to the essence of the car business, manufacturing applications need to process and communicate data in real time. Integrated hardware such as GPUs and TPUs enable edge-computing solutions that enable fast Times to Solution, enabling algorithms to run directly on the vehicle in real time without delay.

Challenges in Implementing Computer Vision for Vehicle Safety

-

Environmental Variability

LIDAR systems are affected by light fluctuations, weather conditions, and complicated backgrounds, which are hard to handle in computer vision. Thus, models must be trained on different data to sustain high performance in various conditions.

-

Computational Constraints

The question of meeting high computational requirements while working on cars with comparatively low computation power and narrow power consumption constraints has remained. Indeed, efficient algorithm design and efficient hardware implementation are crucial to achieving real-time processing targets without high power consumption.

-

Data Privacy and Security

Gathering and analyzing visual information can be problematic for the driver’s privacy and information security. Protecting user data and adjusting privacy laws are crucial for creating confidence and protecting the system.

-

Regulatory and Standardization Issues

The automotive industry has significant safety requirements and legal imperatives that must always be met. Achieving and demonstrating the adoption of these standards for constructing computer vision systems form the basis of extensive test, validation, and certification programs. Computer Vision for Automated Assembly Line Inspections.

-

Adversarial Robustness

There is a major issue of model robustness or adversarial attacks, in which a model is programmed to produce an incorrect output for a small modification to the input data. Therefore, improving the models' ability to resist such attacks is important for better system dependability and safety.

Future Directions and Innovations

Future Directions and Innovations

-

Integration with Autonomous Driving

Since increased autonomy is the industry’s future, computer vision will be even more important for perception, decision-making, and control. Sophisticated models that can interpret sophisticated driving environment conditions and make some correct decisions are required for safe, self-sustaining movement.

-

Enhanced 3D Perception

New advancements in in-depth estimation and point cloud processing in 3D computer vision will enhance recognition of the vehicle environment. This will make obstacle detection more accurate, navigation more efficient, and interaction with dynamic environments more effective.

-

AI-Powered Predictive Maintenance

Computer vision can move beyond safety and monitoring to include aspects such as predicting vehicle health based on the visual cues the computer vision system can capture. We can recognise characteristics such as wear and tear, future failures, and timely maintenance to eliminate such mishaps.

-

Personalized Driver Assistance

Future systems might provide custom help to the driver by learning the behaviour and choices of a specific person. Customizing safety features and alerts using data relating to the driver ensures that the driver gets the best experience as far as the car is concerned, and thus, the sense and ability to drive become more intuitive.

-

Collaborative Vehicle Networks

Smart and connected vehicles with computer vision can exchange information about road conditions, traffic flow, and potential dangers. Applying this collective intelligence makes it easier to manage traffic flow, reduce traffic buildup, and make the roads safer.

Conclusion

It is crystal clear that computer vision is the new frontier revolutionizing how cars are driven and controlled by developing complex vehicle safety and monitoring solutions. Self-driving technology results from sophisticated algorithms acquired, real-time processing, and sensor fusion technologies that make a vehicle accurately identify the environment in which it operates. Challenges still affecting it today include environmental fluctuations, computational limitations, and security concerns.

But, as pointed out earlier, ongoing work, experiences, or new developments go on to redefine feasibility. That is why computer vision systems will become increasingly important as they are integrated into roadways, making them safer and providing a more secure and efficient future for driving.

Future Directions and Innovations

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)