In the rapidly evolving environment of artificial intelligence, maybe the most exciting development is that of multi-modal agents. Next-generation AI agents can process and integrate different kinds of data such as text, images, sound, and video to facilitate more sophisticated and responsive interactions.

Unlike traditional AI, which is more likely to operate with a single modality, multi-modal AI uses different forms of data to build a richer understanding and interaction, making it closer to human perception and response. The integration of AI TRiSM (Trust, Risk, and Security Management) ensures these AI systems are ethically sound, transparent, and aligned with enterprise requirements.

How Databricks Powers Multi-Modal Agents

AI systems have achieved a major advancement through multi-modal AI technology. Systems built using compositional AI frameworks enable dynamic adaptation to complex data structures. By uniting various input types, these AI systems process, analyze, and produce combined outputs across multiple processing domains, resulting in a more robust AI decision-making process.

A multi-modal AI system leverages three different input types: text for communication, image recognition for object identification, and voice command recognition through artificial neural networks for enhanced audio processing. These capabilities deliver essential value to industries like Artificial Intelligence in Requirement Management, where AI-driven insights refine business needs and streamline decision-making.

Comprehensive Definition of Multi-Modal Agents

Multi-modal agents are highly sophisticated AI-based systems that manage multiple types of data at one time. These agents analyze, process, and produce diverse outputs, allowing them to interact with users and their environments more effectively. This ability is crucial in processes requiring an amalgamation of various data inputs for strategic decisions and complex task execution. Adopting Artificial Intelligence Best Practices ensures these AI models remain reliable, scalable, and efficient across industries.

Breaking Down the Concept of Intelligent Multi-Data Processing

Intelligent multi-data processing involves using AI to analyze and integrate different types of data to gain deeper insights or perform complex tasks. This process involves several key steps:

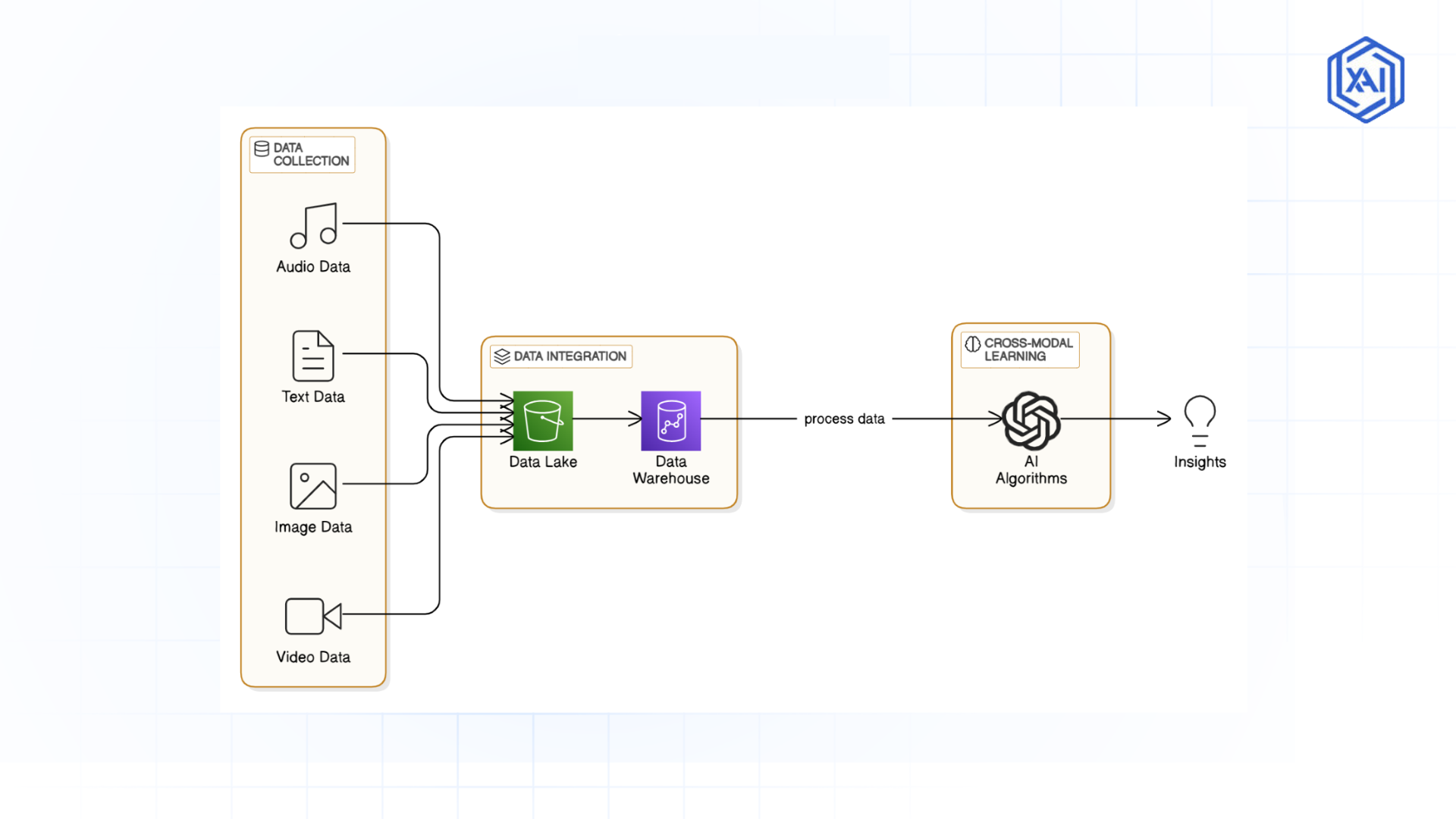

Fig 1: Cross-modal AI-driven Data Insights

Fig 1: Cross-modal AI-driven Data Insights-

Data Collection: AI gathers information from multiple modalities, including text documents, images, audio recordings, and video streams. Cloud-based Google AI Services and AWS AI services enhance this phase by enabling seamless data ingestion and preprocessing.

-

Data Integration: AI consolidates diverse data sources into a unified analytical structure. Platforms like Databricks, along with Azure AI services, facilitate efficient data management through data lakes and warehouses.

-

Cross-Modal Learning: AI models utilize artificial neural networks to establish relationships between different data types, improving overall decision-making. The ethical implications of AI Ethics are crucial here, ensuring AI models operate transparently and fairly.

Databricks AI vs. Traditional AI Comparison

|

Category |

Aspect |

Multi-Modal AI |

Traditional AI |

|

Performance Benchmarks |

Data Processing Capability |

Integrates and analyzes multiple data types (text, images, audio, video) simultaneously for deeper insights. |

Limited to single-modality data, reducing its ability to generate comprehensive insights. |

|

Task Complexity Handling |

Excels at handling complex, multi-faceted tasks requiring diverse data sources. |

Best suited for simpler, structured data-driven tasks. |

|

|

Decision-Making Accuracy |

Generates more informed and context-aware decisions by leveraging multi-source data fusion. |

Relies on a single data stream, leading to potential gaps in decision-making. |

|

|

Competitive Advantages |

User Experience |

Enhances interaction by allowing more natural, human-like engagement using various data inputs (voice, text, visuals). |

Restricted interaction due to dependence on a single type of input. |

|

Adaptability |

Highly adaptable to diverse use cases across industries due to its cross-modal capabilities. |

Limited flexibility as it requires structured, domain-specific data. |

|

|

Real-World Application Suitability |

Ideal for dynamic, data-rich environments such as healthcare, robotics, and finance. |

Best suited for static, rule-based applications with well-defined data inputs. |

How Databricks Multi-Modal AI Enhances Data Interaction

Multi-modal intelligence revolutionizes data interaction by enabling AI systems to engage with users in more natural and intuitive ways. For example, a multi-modal AI system can:

Understand Voice Commands

The system processes audio inputs to carry out activities or retrieve information thus making the interaction more dialogue driven.

Recognize Visual Cues

The system benefits from images or videos to identify objects while understanding visual contexts which improves the capability to analyze and respond to visual data.

Generate Text Responses

Summarized or written responses from text data analysis provide clear communication channels to consumers.

AI user experience advances through multiple interaction methods which develops new AI opportunities in different business sectors.

Mechanisms of Integrating Diverse Data Types

Integrating diverse data types involves several mechanisms:

-

Unified Data Platforms: Tools like Databricks' Unity Catalog help manage and govern data across different modalities, ensuring consistency and accessibility. These platforms are crucial for organizing and integrating diverse data sources.

-

Cross-Modal Models: AI models designed to process and correlate data from multiple sources, such as text and images. These models are essential for enabling AI systems to understand and generate outputs across different modalities.

-

Advanced Neural Networks: Architectures like transformers and convolutional neural networks (CNNs) are crucial for handling complex multi-modal data. These networks provide the computational power needed to process and integrate diverse data types effectively.

Breakthrough Approaches in Cross-Modal Learning

Cross-modal learning entails the ability to translate AI models so as to map and comprehend in association with other modalities. New approaches cover:

-

Transfer Learning: Building on what pre-trained models accomplish to initiate cross-modal tasks. This is a time and effort saver by capitalizing on past task learning.

-

Multimodal Fusion: Integration of features from multiple modalities to boost model performance. Multimodal fusion enables AI models to tap the potential of every modality for improved overall understanding and decision-making.

Why Businesses Need Databricks Multimodal Agents

Databricks offers a comprehensive platform for developing and deploying multi-modal AI applications. Key features include:

-

Mosaic AI: Integrates the AI lifecycle, from data preparation to model deployment and monitoring. This structure simplifies the development process, allowing for simpler building and deployment of multi-modal AI solutions.

- Unity Catalog: Provides governance, discovery, and versioning for data and models. This tool is essential for managing complex data ecosystems and ensuring data quality.

-

Mosaic AI Model Serving: Enables the deployment of large language models (LLMs) and other AI applications. This capability allows organizations to scale their AI operations efficiently.

Unique Databricks Methodologies in Multi-Modal Agent Development

Databricks employs several unique methodologies:

-

Foundation Model Fine-tuning: Allows customization of pre-trained models for specific use cases. This approach enables organizations to adapt powerful AI models to their unique needs.

-

Mosaic AI Agent Framework: Supports the development of retrieval-augmented generation (RAG) applications. This framework facilitates the creation of AI systems that can retrieve and generate information dynamically.

Cutting-Edge Architectural Approaches

Databricks incorporates cutting-edge architectural approaches, including:

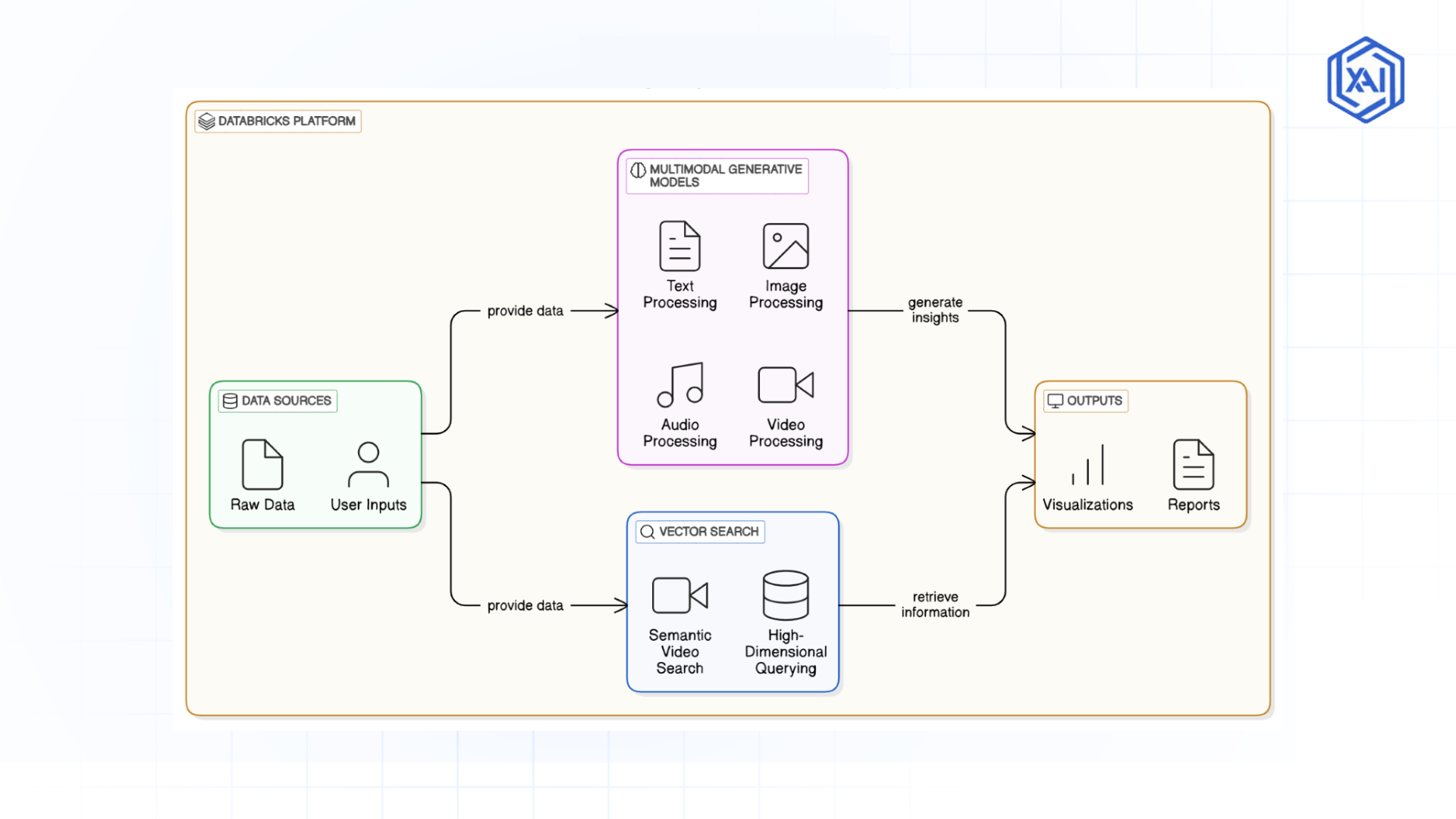

Fig 2: Databricks AI Architecture

Fig 2: Databricks AI Architecture-

Multimodal Generative Models: Capable of processing and generating outputs across text, images, audio, and video. These models are at the forefront of AI innovation, enabling more sophisticated interactions.

-

Vector Search: Enables efficient querying of high-dimensional vector representations for advanced applications like semantic video search. This technology allows for fast and accurate retrieval of relevant information from large datasets.

Technologies Behind Databricks Multi-Modal Agents

Key technologies include:

-

Advanced Neural Networks: Such as transformers and CNNs, which are essential for handling complex multi-modal data. These networks provide the computational power needed to process and integrate diverse data types effectively.

Real-World Impact of Databricks Multi-Modal Agents

Multi-modal AI has numerous practical applications:

Impact of Databricks Multimodal Agents

The tangible impacts include:

-

Enhanced User Experience: More intuitive and natural interactions with AI systems. Users can engage with AI in ways that feel more human-like, improving satisfaction and adoption.

- Increased Efficiency: The evidence shows that integrating multiple data types leads to the automation of sophisticated operations. The usage of this technology cuts down human labor while boosting work output across numerous business sectors.

-

Innovative Solutions: The technology makes new application and service development possible which was before out of reach. The combination of multiple AI technologies through Multi-modal AI systems creates new opportunities for innovation that accelerates business development and establishes changes.

Overcoming Challenges in Databricks Multi-Modal AI Adoption

Developing multi-modal agents encounters multiple difficulties that require attention.

-

Technical Complexity: Sophisticated frameworks determine the integration of multiple data varieties and modeling systems. The extensive nature of this topic may be complex however it enables innovation opportunities.

-

Ethical Considerations: Guaranteeing equity and privacy in multi-modal data processing. As more sensitive information is processed by AI systems, ethical issues are of greater concern.

Strategic measures for overcoming them include:

-

Collaborative Development: Working across disciplines to integrate diverse expertise. Collaboration ensures that all aspects of multi-modal AI development are considered, from technical to ethical.

-

Continuous Testing: Ensuring models are robust and fair through rigorous testing. This step is critical for maintaining trust in AI systems and ensuring they perform as intended.

To learn more about the transformative power of Databricks and multimodal AI, read more.

Databricks AI Roadmap for Multimodal Agents

Advancements in Cross-Modal Learning

Neural Network Architecture Innovations

Improved Data Integration

Real-Time AI Applications

Enhanced Human-AI Interaction

Smarter AI Systems

Scalability and Adaptability Across Industries

Next-Generation AI Solutions

Best Practices for Databricks Multimodal Agents

Best practices include:

-

Comprehensive Implementation Guide: The development process requires understanding from every stakeholder in the system. Success in project execution requires staff to possess complete understanding of the development process.

-

Performance Optimization Strategies: Regularly evaluating and refining model performance. Continuous improvement ensures that AI systems remain effective and efficient over time.

-

Cross-Functional Collaboration: Successful implementation requires collaboration across teams (data scientists, engineers, business leaders) to align on objectives, technical requirements, and outcomes. This ensures seamless integration of AI solutions with existing business processes.

-

Ethical and Bias Considerations: Continuously evaluate AI models for bias and fairness, ensuring that all data used for training is representative and ethically sound. This helps maintain trust and aligns AI development with industry standards and regulations.

Databricks Multimodal Agents for Smarter Solutions

The transformative impact includes:

-

Key Insights: Multi-modal AI enables more nuanced and context-aware applications. This capability allows AI systems to understand and respond to complex situations more effectively.

-

Future Implications: Ability to disrupt industries by delivering more advanced AI solutions. As multi-modal AI develops, we can anticipate impressive developments in technology's interaction with humans and the environment.

Databricks' vision for intelligent systems focuses on the convergence of AI across different modalities to build more powerful and more capable applications. This vision is ready to revolutionize the way we engage with technology and use data to fuel innovation.

This expanded version provides a deeper dive into the concepts and applications of multi-modal AI, using human-friendly language to explain complex technical ideas.

Next Steps in Implementing Databricks Multimodal Agents

Talk to our experts about implementing Databricks Multi-Modal AI systems. Learn how industries and different departments use agentic workflows and decision intelligence to become data-driven. Utilize AI to automate and optimize IT support and operations, improving efficiency and responsiveness.