The Role of Snowflake in Scaling Multimodal AI Applications

In an era overflowing with diverse data formats, we encounter information through text, images, videos, and audio every day. Each data modality offers a unique perspective, painting a partial picture of reality when analyzed in isolation. This is where Multimodal AI Models step in, offering a transformative approach to data analysis.

Imagine AI models that can understand not just the words in a customer review, but also the sentiment conveyed through their voice and facial expressions in an accompanying video. That’s the power of multimodal AI – the ability to synthesize insights from diverse data types for a more holistic and accurate understanding.

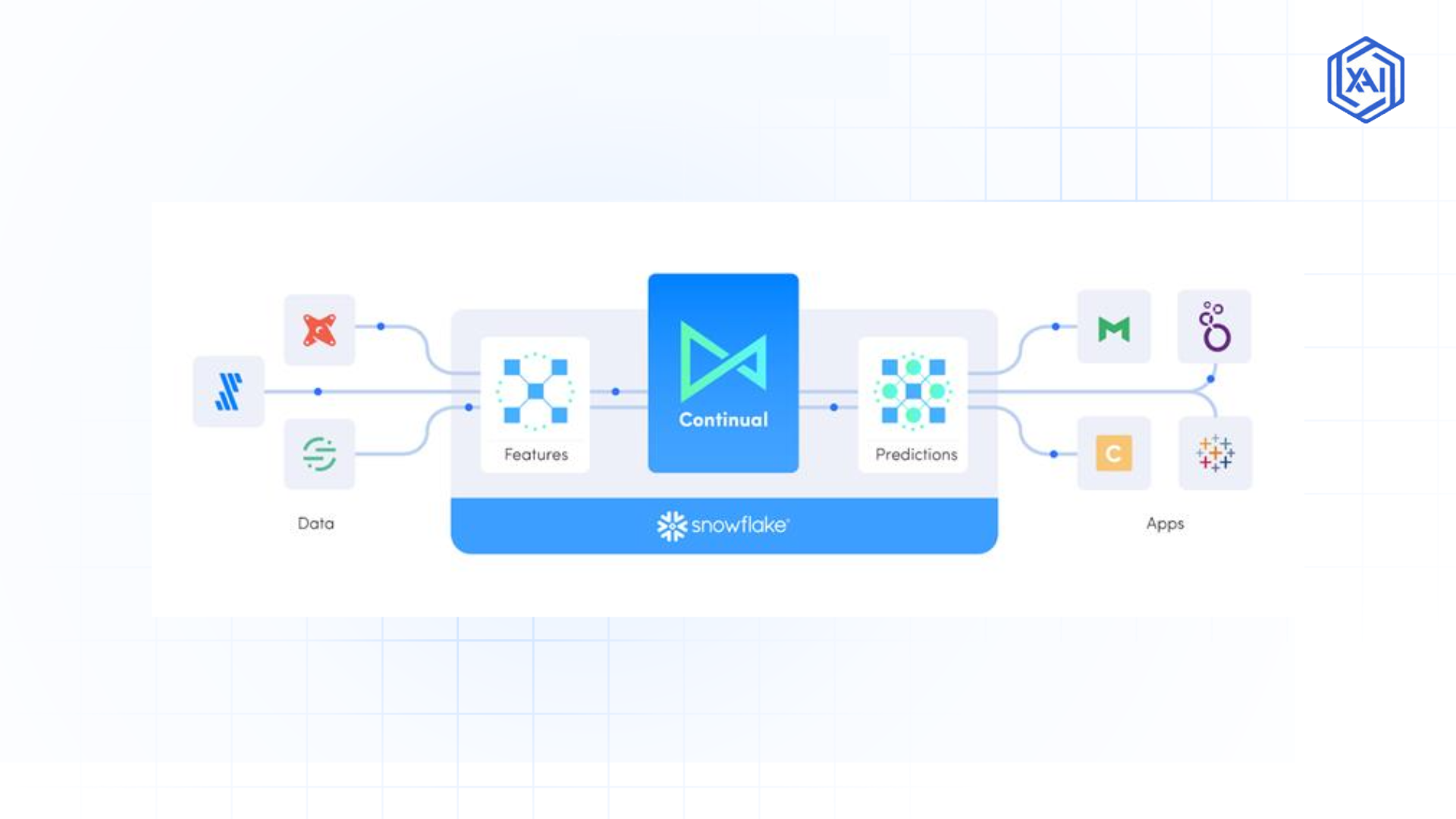

Fig 1: Seamless Multimodal AI Integration with Snowflake

Fig 1: Seamless Multimodal AI Integration with SnowflakeWhy is Multimodal AI important?

The genuine world consists of more than one operational mode. The customer experience with our company takes place through distinct channels as they communicate their feedback through text and visual and audio elements. The business operations produce various data types originating from sensors and images and transactional systems.

Multimodal AI Models become essential for a proper understanding of customer behavior, market trends, and operational efficiencies because they integrate multiple data sources. The essential advantage of Multimodal AI exists because it goes past stand-alone models to access richer and more complex insights.

Data management and analytics received an essential advancement with Snowflake Schema, allowing seamless structuring of diverse datasets. Snowflake presents itself as a complete Data Cloud beyond a traditional cloud data warehouse because it handles enormous varieties of data with unmatched levels of scalability and adaptability. Snowflake's architecture enables users to access data in a democratic manner, functioning as the perfect base to construct advanced AI applications, including Multimodal AI Models.

The AI features at Snowflake exist as a result of its well-developed computing resources and extensive data handling capabilities. The combination of tools and scalability within the platform enables data scientists and engineers to accomplish teamwork and deploy complex AI solutions together. Snowflake emerges as the foundational technology that enables the building and deployment of multi-pronged AI systems for contemporary applications.

Key Benefits of Multimodal AI Models

The multiple modalities of artificial intelligence systems create unstoppable appeal because they demonstrate human-approvable perception and understanding capabilities. Its advantages as an approach surpass traditional single-modality models because of the following main benefits.

-

Enhanced Accuracy and Robustness: The assessment of multiple viewpoints through multimodal AI models produces better accuracy alongside improved robustness. Imagine a fraud detection system. Transaction history analysis alone would likely overlook important indications in the data. Multiple data inputs that include scanned check images with text records from support communications alongside phone call audio provides an extensive perspective enabling fraud detection models to lower the number of false positives while raising detection effectiveness.

-

Richer, More Contextual Insights: A single-data approach faces limitations because its data field remains restricted. Multimodal AI breaks these boundaries. A detailed analysis of customer sentiments goes beyond textual data because emotions need deeper understanding beyond words. The integration of audio-video with text reviews provides greater depth of analysis because it reveals the multi-dimensional content of face expressions and vocal intensity together with the settings recorded in these video recordings.

-

Improved Generalization and Adaptability: Computer models that receive input from multiple data formats show enhanced ability to transfer information to previously unknown data sets. Such systems do not overreact to specific data patterns while adapting better to changing conditions in real-world operations. The value of this approach becomes essential for systems which must operate in evolving situations that produce changing data patterns.

-

More Human-like AI Interactions: Through multimodal artificial intelligence people can enjoy authentic and intuitive ways to interact with machines. Virtual assistants became smarter because they can now identify both spoken voice commands together with facial expressions and hand gestures for delivering individualized and empathetic interactions.

Discover how Multimodal AI Agents combine text, vision, and speech to create intelligent, context-aware systems. Learn how they enhance decision-making, automation, and user experiences across industries in this blog.

Challenges in Multimodal AI Development

Data Complexity and Heterogeneity

Heterogeneous data characteristics make multimodal data complex by nature. Every modality possesses its own unique data structure as well as unique data formats and unique data levels of noise. To utilize diverse data properly requires complex data engineering techniques which combine with sophisticated preprocessing methods.

Data Integration Hurdles

Combining data from multiple different sources poses a major barrier because these systems normally maintain their data separately. The execution of essential steps for data consistency as well as different data frequencies and timestamp synchronization across modalities needs careful planning and execution.

Feature Engineering Complexity

The process of obtaining valuable features from multiple data forms proves substantially harder than deriving features from single data types. We must create approaches that explore both individual features from each modality and recognize how distinct modalities communicate within the data base. Innovative approaches must be used for feature engineering when handling feature extraction tasks.

Computational Demands

Multimodal AI models demand intensive computational resources during training since they work with big datasets and complex architectural structures. The availability of flexible computational processing along with effective training strategies represents a requirement for these systems to work effectively.

Model Interpretability and Explainability

The rise of complex models requires steps to guarantee that diagnostic processes remain transparent since regulations apply specifically to this domain. Visualizing how multimodal systems reach their output decisions remains harder than basic models due to which specific interpretative methods must be created to analyze their decisions.

Leveraging Snowflake's Data Cloud for Multimodal AI Development

Snowflake’s Data Cloud is purpose-built to address many of the challenges inherent in multimodal AI development. Its unique features make it a compelling platform for tackling diverse data types:

-

Semi-structured Data Handling: Snowflake excels at handling semi-structured data formats like JSON, XML, and Avro natively. This is crucial for multimodal AI as image metadata, audio transcripts, and video descriptions often come in these formats. You can directly load and query this data without complex transformations, streamlining the data ingestion process.

-

Scalable Storage and Compute: Multimodal datasets can be massive, encompassing high-resolution images, lengthy videos, and extensive text corpora. Snowflake’s massively parallel processing (MPP) architecture provides virtually unlimited scalability for both storage and compute. This ensures that you can handle growing data volumes and computationally intensive model training without performance bottlenecks.

-

Data Sharing and Collaboration: The Data Cloud facilitates seamless data sharing both within and outside your organization. This is invaluable for multimodal AI projects that often involve collaborating with external partners, accessing publicly available datasets (e.g., image datasets, language models), or leveraging data from the Snowflake Data Marketplace.

-

Secure Data Governance: Data governance and security are paramount, especially when dealing with sensitive multimodal data. Snowflake's robust security features, including role-based access control, data masking, and encryption, ensure that your multimodal AI projects are built on a foundation of trust and compliance.

-

Data Marketplace Access: The Snowflake Data Marketplace offers access to a vast array of external datasets, including demographic data, weather information, and financial data, which can be incredibly valuable for enriching your multimodal AI models and improving their performance.

How Snowflake's Data Cloud Supports Multimodal AI Development

Step-by-Step Guide to Creating Multimodal AI Models with Snowflake

Let's outline a step-by-step guide to practically building multimodal AI models leveraging Snowflake's power. Note that while Snowflake is excellent for data handling and compute, the core AI model training might often be done using specialized ML frameworks outside of Snowflake, while leveraging Snowflake for data preparation and feature engineering.

Data Ingestion and Preparation

-

Identify Data Sources: Determine the various data modalities relevant to your AI problem (text, images, audio, video, sensor data, etc.) and locate their sources (databases, cloud storage, APIs, etc.).

-

Utilize Snowflake Connectors: Leverage Snowflake’s connectors (Snowpipe for continuous ingestion, bulk loading utilities) to efficiently ingest data from diverse sources into Snowflake.

-

Data Staging and Landing: Land the raw data in Snowflake, ideally separating it into staging areas for each modality.

-

Data Cleaning and Transformation: Use Snowflake's SQL capabilities and Snowpark (for Python/Scala transformations) to clean and standardize the data within each modality. Handle missing values, inconsistencies, and noise.

-

Data Validation: Implement data quality checks and validation rules in Snowflake to ensure data integrity before proceeding to feature engineering.

Feature Engineering for Multimodal Data

-

Modality-Specific Feature Extraction: Within Snowflake (using SQL, Snowpark, or UDFs calling external libraries), extract relevant features from each modality independently.

-

Text: Tokenization, TF-IDF, word embeddings (using pre-trained models or models trained on your text data and stored in Snowflake).

-

Images: Image resizing, normalization, potentially extracting basic image features (if computationally feasible within Snowflake, otherwise, pre-process externally and store features in Snowflake).

-

Audio: Audio normalization, feature extraction like MFCCs (similarly, consider external pre-processing if complex).

-

Video: Frame extraction, potentially basic video features (again, complex video feature extraction might be better done externally).

Feature Fusion in Snowflake

Explore techniques to combine features from different modalities within Snowflake.

-

Early Fusion: Concatenate modality-specific features directly. This can be done easily in Snowflake by joining feature tables.

-

Late Fusion: Train separate models for each modality and then combine their predictions. Snowflake can be used to store and manage predictions from individual models.

-

Intermediate Fusion: More complex fusion techniques might require external ML frameworks, but Snowflake can store and manage intermediate representations.

Feature Selection and Dimensionality Reduction

-

Use Snowflake's SQL capabilities to perform feature selection and dimensionality reduction (e.g., using PCA on combined feature sets) to improve model efficiency and reduce noise.

Training & Evaluation with Snowflake's Compute

-

Data Export for Model Training: While the core model training often happens outside Snowflake using dedicated ML frameworks (TensorFlow, PyTorch, scikit-learn), Snowflake facilitates efficient data export to these environments. Use Snowflake connectors or data unloading to transfer prepared and feature-engineered data.

-

External Model Training: Train your multimodal AI models using your preferred ML frameworks, leveraging the data exported from Snowflake.

-

Model Evaluation and Metrics in Snowflake: Bring model predictions and evaluation metrics back into Snowflake. Store these results alongside your original data for analysis and comparison. Use Snowflake’s SQL to calculate performance metrics across different data slices and modalities.

-

Iterative Refinement: Analyze model performance, identify areas for improvement, and iterate on feature engineering, model architecture, and training process. Snowflake’s scalable environment enables rapid experimentation and iteration cycles.

Snowflake AI: Real-World Applications of Multimodal AI

Let's explore some real-world applications where multimodal AI, powered by Snowflake, can deliver significant business value:

Customer Sentiment Analysis with Multimodal Data

Imagine a customer service platform that analyzes customer interactions across all channels – text-based chats, voice calls, and video-based support sessions. A multimodal AI model in Snowflake can ingest and process text transcripts, audio recordings, and video feeds to provide a holistic view of customer sentiment. By combining text sentiment with audio tone analysis and facial expression recognition from videos, businesses can gain a much deeper and more accurate understanding of customer satisfaction and identify areas for service improvement with unprecedented granularity.

Fraud Detection Using Multimodal AI in Snowflake

Fraudsters are becoming increasingly sophisticated. Traditional rule-based fraud detection systems often struggle to keep pace. Multimodal AI offers a powerful countermeasure. Consider a financial institution using Snowflake to analyze transactional data (text descriptions, amounts), image data (scanned checks, documents), and potentially even audio data (voice recordings from phone transactions). A multimodal model can detect subtle anomalies and patterns across these diverse data types that would be invisible to single-modality systems, leading to more effective fraud prevention and reduced financial losses.

Personalized Recommendations with Snowflake AI

E-commerce businesses strive to deliver highly personalized product recommendations. Multimodal AI can take personalization to the next level. Using Snowflake, a retailer can combine customer profile data (text-based preferences, demographics), product image data, and even video reviews of products. A multimodal recommender system can then analyze user preferences, visual product features, and sentiment from video reviews to generate highly relevant and engaging product recommendations, leading to increased sales and customer loyalty.

Data Integration and Feature Engineering for Multimodal AI in Snowflake

Best practices for integrating multiple data sources in Snowflake for multimodal AI include:

-

Centralized Data Lake/Warehouse in Snowflake: Leverage Snowflake as the central repository for all your multimodal data. This eliminates data silos and provides a unified view of your diverse data landscape.

-

Schema-on-Read Approach: Snowflake's schema-on-read capability is ideal for handling the flexibility of semi-structured multimodal data. You can ingest data first and define schemas later, adapting to evolving data formats.

-

Data Virtualization: For data sources that cannot be directly ingested into Snowflake, consider using Snowflake’s data virtualization capabilities to query data in place without physical movement. This can be useful for accessing large image or video datasets stored externally.

-

Metadata Management: Implement robust metadata management practices within Snowflake. Catalog and document your data sources, modalities, features, and data pipelines to ensure data lineage, discoverability, and maintainability.

Advanced feature engineering techniques for multimodal data within Snowflake and its ecosystem encompass:

-

Cross-Modal Feature Interactions: Go beyond simply concatenating modality-specific features. Design features that explicitly capture the interactions and correlations between modalities. For example, in sentiment analysis, look for correlations between negative sentiment in text and specific facial expressions in video.

-

Attention Mechanisms: When training models externally, consider using attention mechanisms that allow the model to dynamically focus on the most relevant modalities and features for a given input.

-

Transformer Networks: Transformer architectures are proving highly effective for multimodal tasks. Explore using transformer-based models (trained outside Snowflake but leveraging Snowflake-prepared data) that can effectively process and fuse information from different modalities.

-

Pre-trained Multimodal Embeddings: Leverage pre-trained multimodal embeddings (if available for your specific modalities and domain) to jumpstart feature engineering and improve model performance, and store/access them efficiently within Snowflake.

Training Scalable AI Models with Snowflake's Data Platform

Leveraging Snowflake's distributed computing for fast model training in the context of multimodal AI primarily involves efficient data preparation and management, rather than direct model training within Snowflake itself. Snowflake excels at:

-

Accelerated Data Preparation: Snowflake's scalable compute dramatically speeds up data ingestion, cleaning, transformation, and feature engineering – the most time-consuming parts of the AI model development lifecycle.

-

Parallel Feature Extraction: Distribute feature extraction tasks across Snowflake's compute clusters to parallelize the process for large multimodal datasets.

-

Efficient Data Export for Training: Snowflake's efficient data unloading capabilities ensure fast data transfer to external ML training environments, minimizing bottlenecks.

Deploying and Serving AI Models Using Snowflake's Data Platform

Model Deployment outside Snowflake (typical approach)

For complex multimodal models, the most common approach is to deploy the trained model in a separate serving infrastructure (e.g., cloud-based ML platforms, containerized deployments). Snowflake then acts as the data provider and potentially the system for storing model predictions.

Snowflake User-Defined Functions (UDFs) for Model Integration (for simpler scenarios)

For less computationally intensive models, or for pre-processing steps within the serving pipeline, you can deploy models as UDFs within Snowflake. This allows you to run model inference directly on data residing in Snowflake.

Data Pipelines for Real-time Inference

Build robust data pipelines in Snowflake to feed real-time data to your deployed models and ingest model predictions back into Snowflake for downstream applications, dashboards, and business processes.

Snowflake Data Marketplace for Model Enrichment

Utilize the Snowflake Data Marketplace to access external datasets that can enrich your models and improve their real-time performance.

Best Practices for Enterprise AI Development with Snowflake

Ensuring data governance and security in Snowflake AI projects is critical for enterprise adoption:

Data Access Control

Implement granular role-based access control in Snowflake to restrict access to sensitive multimodal data based on user roles and responsibilities.

Data Masking and Anonymization

Use Snowflake’s data masking and anonymization features to protect sensitive data, especially when working with personally identifiable information (PII) within multimodal datasets.

Data Lineage Tracking

Establish clear data lineage tracking within Snowflake to understand the origin and transformations applied to your multimodal data. This is crucial for auditability and compliance.

Data Encryption

Leverage Snowflake’s built-in encryption capabilities for data at rest and in transit to protect your multimodal data from unauthorized access.

Compliance Frameworks

Ensure your Snowflake AI projects comply with relevant industry regulations and data privacy frameworks (e.g., GDPR, HIPAA).

Model Interpretability & Explainability in Snowflake

-

Feature Importance Analysis: When training models externally, analyze feature importance to understand which modalities and features are most influential in model predictions. Store and analyze these importance scores in Snowflake.

-

Explainable AI (XAI) Techniques: Explore XAI techniques (e.g., SHAP values, LIME) to provide insights into model decisions. Snowflake can be used to store and analyze these explanations.

-

Model Monitoring and Drift Detection: Implement robust model monitoring within your Snowflake environment to detect model drift and degradation over time. This is crucial for maintaining model accuracy and reliability in production.

-

Human-in-the-Loop Validation: Incorporate human-in-the-loop validation processes, where domain experts can review model predictions and provide feedback, especially for high-stakes applications of multimodal AI.

The Future of Multimodal AI and Snowflake's AI Roadmap

Emerging trends and research areas in multimodal AI are rapidly evolving:

-

Self-Supervised Learning for Multimodal Data: Self-supervised learning techniques are gaining traction in multimodal AI, enabling models to learn from unlabeled data across modalities, reducing reliance on expensive labeled datasets.

-

Multimodal Foundation Models: The trend towards large foundation models extends to the multimodal domain. We are seeing the emergence of powerful multimodal models pre-trained on massive datasets, capable of performing a wide range of tasks across modalities.

-

Integration of Knowledge Graphs and Multimodal AI: Combining knowledge graphs with multimodal AI is a promising direction, enabling models to reason about complex relationships and contextual information across different data types.

-

Edge Multimodal AI: Deploying multimodal AI models to edge devices (e.g., smartphones, IoT sensors) is becoming increasingly relevant, enabling real-time multimodal analysis closer to the data source.

Snowflake's Vision and Roadmap for Advancing AI Capabilities

Enhanced Snowpark for ML

Continued expansion of Snowpark capabilities to provide richer support for in-database ML, including potentially more direct integration with ML frameworks and libraries.

Pre-built ML functionalities within Snowflake

Future enhancements could include pre-built ML functionalities within Snowflake for common AI tasks, potentially including support for basic multimodal tasks or pre-processing modules.

Deeper Integration with ML Ecosystem

Strengthening integrations with external ML platforms and tools to create a seamless workflow for multimodal AI development, from data preparation in Snowflake to model training and deployment externally.

Data Marketplace Expansion for AI Datasets

Continued growth of the Snowflake Data Marketplace to offer more diverse and high-quality datasets specifically relevant for AI and multimodal AI applications.

Focus on Enterprise AI Governance

Further enhancements to data governance, security, interpretability, and explainability features within Snowflake, reflecting the growing importance of responsible AI in the enterprise.

Redefining Data Analysis with Multimodal AI

In conclusion, multimodal AI represents a paradigm shift in how we approach data analysis, moving beyond the limitations of single data perspectives to unlock richer, more human-like understanding. Snowflake's Data Platform, with its robust architecture, scalability, and commitment to data accessibility, provides an ideal foundation for developing and deploying the next generation of multimodal AI applications.

Next Steps in Implementing Multimodal AI with Snowflake

Talk to our experts about implementing multimodal AI systems. Learn how industries and different departments utilize agentic workflows and decision intelligence to become data-driven and AI-powered. Leverage AI to automate and optimize data processing, analytics, and decision-making, improving efficiency and responsiveness.