Large Language Models (LLMs) are transforming industries by enabling advanced natural language processing (NLP) capabilities, but their complexity demands robust operational frameworks. LLMOps (Large Language Model Operations) extend traditional MLOps principles to optimize the development, deployment, monitoring, and scalability of LLMs in production environments.

Organizations require efficient model lifecycle management strategies integrating automation, scalability, security, and compliance. XenonStack AI, a certified AI Managed Service Provider (MSP), specializes in LLMOps and FMOps (Foundation Model Operations) to facilitate scalable AI pipelines leveraging open-source tools. Our managed services streamline AI workflows, improve computational efficiency, and ensure regulatory compliance, enabling enterprises to maximize the value of their AI investments.

Understanding LLMOps and Its Strategic Significance

LLMOps is an essential discipline within AI engineering, addressing the unique challenges LLMs pose, such as their massive computational requirements, continual retraining needs, and complex inference pipelines. Implementing LLMOps ensures:

-

Automated Model Lifecycle Management – Streamlining model training, fine-tuning, and monitoring processes.

-

Advanced Data Engineering Pipelines – Handling large-scale data preprocessing, storage, and feature engineering.

-

Infrastructure Optimization – Efficient allocation of GPUs, TPUs, and cloud resources.

-

Governance, Security, and Compliance – Implementing best practices for ethical AI deployment and risk mitigation.

-

Model Performance Monitoring – Ensuring continuous evaluation and improvement to prevent model degradation.

-

Deployment Efficiency – Automating CI/CD pipelines for rapid and reliable model deployment.

-

Scalability and Cost Efficiency – Leveraging distributed computing frameworks to optimize LLM workloads.

XenonStack AI's LLMOps Managed Services

XenonStack AI provides a comprehensive LLMOps framework that ensures reliability, performance, and security throughout the AI lifecycle. Our managed services include:

1. AI Model Lifecycle Management

-

Automated Data Ingestion & Processing – Optimized ETL workflows integrated with feature stores.

-

Adaptive Model Training & Fine-Tuning – Using TensorFlow, PyTorch, and JAX for customized domain-specific adaptations.

-

Model Versioning & Experimentation – Enabling reproducibility through MLflow, DVC, and Weights & Biases.

-

Hyperparameter Optimization & Pruning – Utilizing Bayesian optimization and reinforcement learning for improved efficiency.

-

Domain-Specific Model Adaptation – Fine-tuning pre-trained models with specialized datasets for enhanced performance.

2. Scalable Infrastructure & Cloud Deployments

-

Multi-Cloud and On-Prem Deployments – Kubernetes-based orchestration across AWS, Azure, and GCP.

-

Serverless and Containerized Model Serving – Integrating ONNX, TensorRT, and Triton Inference Server.

-

Federated Learning & Edge AI – Enhancing privacy-preserving AI with decentralized learning architectures.

-

High-Performance Computing (HPC) Optimization – Leveraging AI accelerators (NVIDIA A100, TPU v4) for large-scale workloads.

3. Continuous Monitoring, Evaluation & Improvement

-

Real-Time Model Observability – Implementing Prometheus, Grafana, and EvidentlyAI for performance tracking.

-

Automated Model Retraining Pipelines – Closing the loop between real-world feedback and continuous learning.

-

Ethical AI Auditing & Bias Mitigation – Ensuring fairness using AI Fairness 360, InterpretML, and SHAP.

-

Anomaly Detection & Drift Prevention – Leveraging adversarial robustness techniques for resilient AI deployments.

-

Explainable AI & Interpretability – Enhancing transparency with LIME, Captum, and Model Explainability Toolkits.

4. Security, Governance, and Compliance

-

Data Privacy and Regulatory Compliance – Adhering to GDPR, HIPAA, and ISO 27001 standards.

-

Role-Based Access Control (RBAC) & Identity Management – Secure AI workflows with IAM policies and Vault integration.

-

Adversarial Attack Mitigation – Implementing AI security frameworks to detect and neutralize threats.

-

Comprehensive Audit Logging & Transparency – Enabling traceability of AI decision-making processes.

-

Risk Management & Ethical AI Governance – Aligning AI strategies with responsible AI principles.

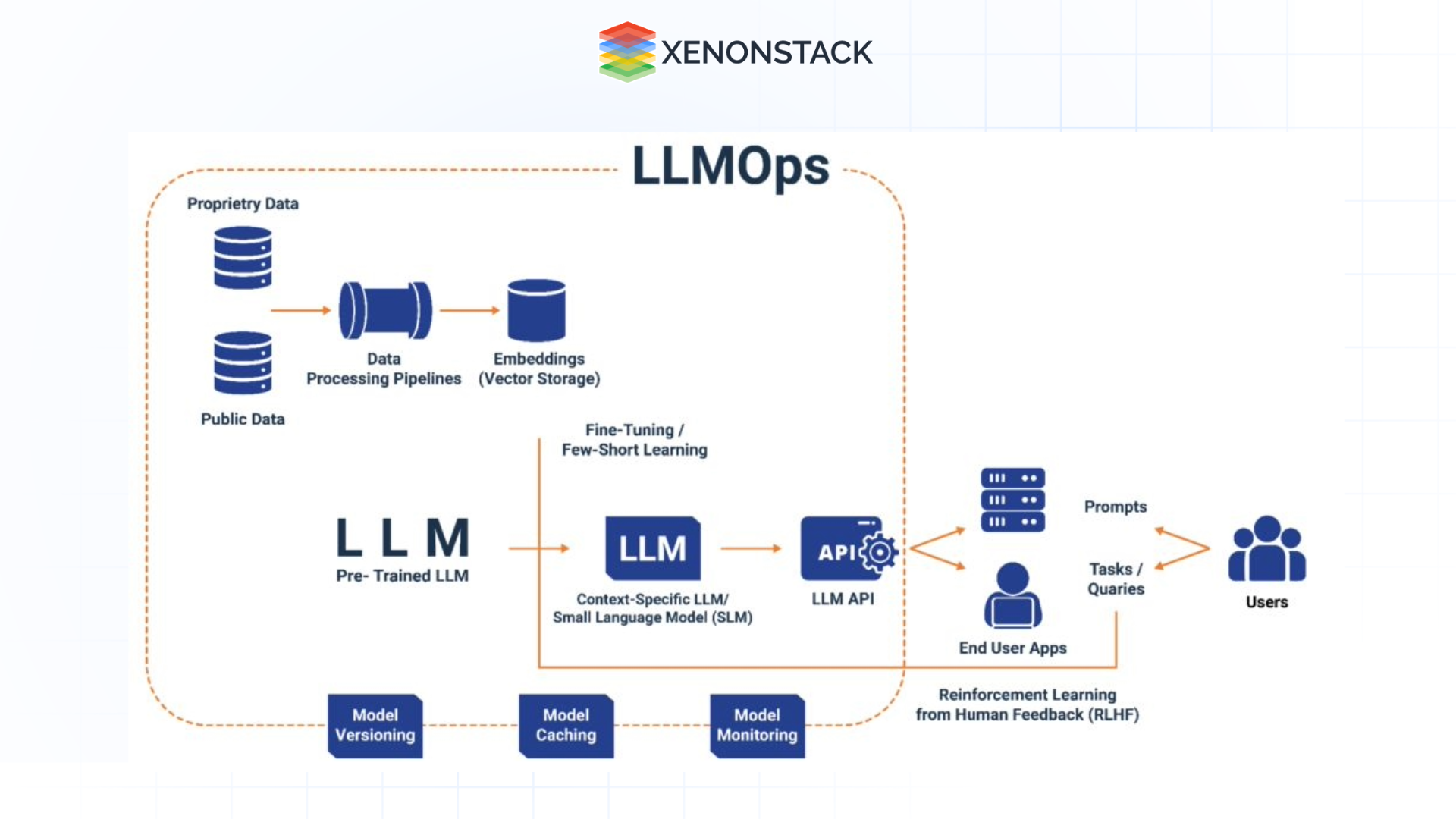

Fig 1: LLMOps Workflow diagram

Fig 1: LLMOps Workflow diagramOpen-Source Tools for LLMOps Pipelines

XenonStack AI leverages a robust ecosystem of open-source tools to facilitate scalable and cost-effective LLM workflows. Key tools include:

1. Model Development & Experimentation

-

Hugging Face Transformers – Fine-tuning pre-trained LLMs for diverse NLP tasks.

-

TensorFlow, PyTorch, and JAX – Providing scalable deep learning model development.

-

MLflow & DVC – Ensuring model tracking, versioning, and reproducibility.

-

AutoML Frameworks – Utilizing H2O.ai, AutoGluon, and TPOT for automated hyperparameter tuning.

2. Data Engineering & Feature Processing

-

Apache Spark & Ray – Accelerating distributed data processing.

-

Feature Stores (Feast, Tecton) – Managing real-time and batch feature pipelines.

-

Great Expectations & Data Validation – Automating data quality assurance.

-

Delta Lake & Apache Iceberg – Optimized data lake solutions for AI workloads.

-

Synthetic Data Generation – Using generative AI to augment training datasets.

3. Model Deployment & Inference Optimization

-

Kubeflow & MLFlow Pipelines – Enabling end-to-end model workflow automation.

-

Triton Inference Server & Ray Serve – Scalable, high-performance model serving.

-

FastAPI & Flask – Lightweight RESTful API frameworks for seamless model integration.

-

Edge AI & On-Device Inference – Optimizing models for real-time, low-latency execution.

4. Model Monitoring & Governance

-

Prometheus & Grafana – Advanced monitoring and logging solutions.

-

EvidentlyAI – Detecting data and model drift in real-time.

-

AI Fairness 360 & InterpretML – Enhancing explainability and mitigating bias.

-

IBM Adversarial Robustness Toolbox & OpenAI Guardrails – Strengthening AI security.

Why Enterprises Choose XenonStack AI for LLMOps

XenonStack AI delivers a holistic LLMOps solution that ensures:

Comprehensive AI Lifecycle Management – Automating the entire AI workflow, from training to monitoring.

Vendor-Agnostic, Open-Source Implementations – Reducing reliance on proprietary solutions.

Seamless Multi-Cloud and Hybrid Deployments – Optimized orchestration across cloud and on-prem environments.

Enterprise-Grade Security & Compliance – Implementing industry-best AI governance standards.

Performance & Cost Optimization – Efficient resource utilization for maximized ROI.

Custom AI Model Engineering – Specializing in domain-adaptive LLM solutions for specific industry applications.

Future Trends of LLMOps Managed Services

Scaling and maintaining large language models in production requires a specialized approach integrating robust infrastructure, continuous monitoring, security, and compliance. XenonStack AI’s LLMOps Managed Services provide enterprises with the expertise and technology stack needed to develop, deploy, and scale AI workloads efficiently. Organizations can adopt automated AI pipelines, federated learning, and resilient governance frameworks to ensure their AI models remain performant, cost-efficient, and aligned with ethical AI standards.

Next Steps with LLMOps Managed Services

Talk to our experts about implementing compound AI system, How Industries and different departments use Agentic Workflows and Decision Intelligence to Become Decision Centric. Utilizes AI to automate and optimize IT support and operations, improving efficiency and responsiveness.