Introduction to Data Processing in Healthcare

The key to new advancements is Data. Today, we get tons of data from almost every aspect of our life. This level of data has become large and unmanageable, and "Big Data" is used to describe it. Therefore, we require new technologies to process, perform analysis and derive meaningful insights from the big data.

Healthcare is one of the numerous prominent sectors that big data has affected. Data, analytics, AI, and machine learning are the top game-changing technologies for medical providers. New patient data sources can provide deeper insights that can assist medical providers in enhancing care quality and streamlining operations. Apart from Healthcare, data analysis is done in various industries, these are

1. banking and securities

2. media and entertainment

3. education

4. insurance

5. transportation

What is Data Processing?

Raw data is not helpful for any organization. To make the data useful or in a readable format use graphs, charts, and texts, we can use it. Data processing directs to collecting raw data and converting it into usable information. The raw data is processed, gathered, filtered, sorted, analyzed, and stored before being presented in a readable format.An open-source, distributed processing engine and framework of stateful computations written in JAVA and Scala. Click to explore about our, Distributed Data Processing with Apache Flink

Why is Data Processing required in the Healthcare sector?

Breaking data silos to merge medical data from numerous sources and acquire a holistic view of your business from one source rather than multiple, divergent sources is one of the most challenging tasks in the industry.

A genuinely data-driven medical analytics solution should be able to integrate all the data sources and analyze both structured and unstructured data in real-time. Your insights aren't as successful as they may be unless you include all the patient data, including diagnostic information, doctor observations, and real-time data from medical equipment.

However, there are numerous reasons why it is helpful in it. Below are a few listings.

1. Increase outcomes

By delivering real-time patient information and decreasing the risk of human error and incorrect diagnosis, it can improve the quality of patient care and safety levels.

2. Increase operational efficiencies

You can cut costs, decrease waste, and streamline your operations by combining data from numerous sources. It will also provide a better understanding of resource use, inventory levels, and procurement.

3. Medical Equipment model development for recommendations

Big data analysis help in the development of new medical equipment in it. Its specialists can detect potential strengths and weaknesses in trials of the development of medical equipment by combining historical, real-time, predictive information and a cohesive blend of data visualization tools.

Explore more about data analysis in healthcare sector.

4. Enhanced Medical research on chronic diseases

The healthcare sector can perform intensive research on chronic diseases by drilling down insights such as medication type, symptoms, transmission methods, and prevention methods from various patients' data.

5. Patients' Disease analysis over time

Patient data can be cleaned, aggregated, and stored in a database every visit. The stored data can help analyze the patient's disease pattern and medications taken over time for better health care.

6. Finance and accounting should be simplified

Big data analytics in healthcare generates insights that can help you swiftly discover and correct financial mistakes, allowing you to align your KPIs to achieve your financial objectives.

Learn more about data analysis in Financial sectors.

A high field growing swiftly, obviously because of its wide range of use cases and results of such precision. Click to explore about our, Rust for Big Data and Parallel Processing Applications

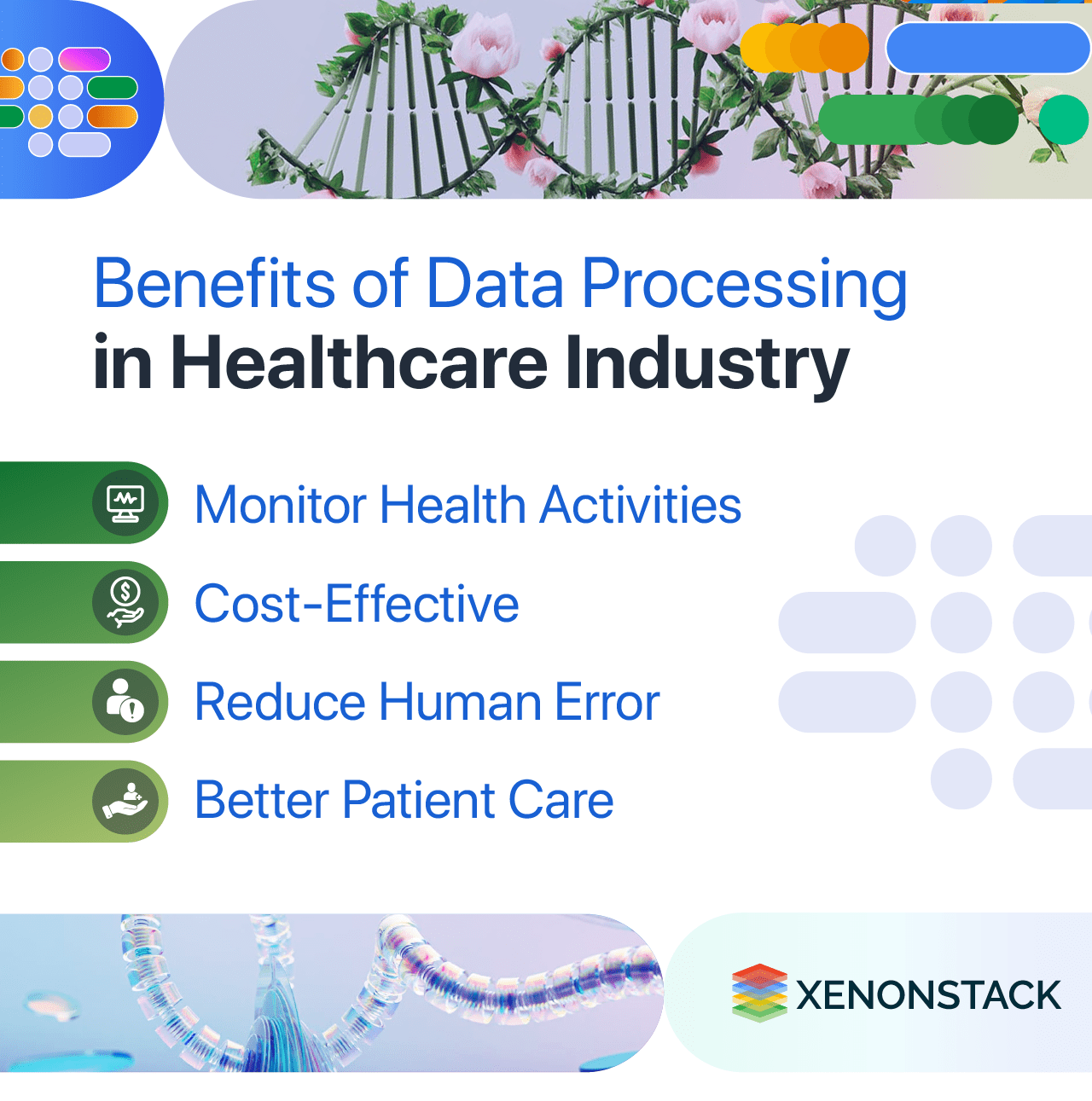

Benefits of Data Processing in the Healthcare Industry

The benefits of data processing in the Healthcare sector are listed below.

1. Monitor Health Activities

The goal of doctors is to have a healthy patient. With the help of sensor data collecting, healthcare companies are focusing increasingly on continuous monitoring of patients' vitals allowing hospitals to reduce patient visits by identifying potential health risks and providing treatment before the situation worsens. Basic wearables that detect the patient's sleep, heart rate, activity, distance walked, and other activities can provide the data. Patients can use data from new medical innovations/devices such as blood pressure monitors, pulse oximeters, glucose monitors, and other gadgets to assess and administer treatments proactively and monitor and track their health activities.

2. Cost-Effective

Its methodology is one of the cost-cutting possibilities for hospitals. Healthcare firms will find tangible ways to increase performance and efficiency without spending more than they are budgeted for by collecting and analyzing massive volumes of data. One of the benefits of employing data processing is that hospitals and clinics can estimate and allocate the appropriate staff to deal with patients.

3. Reduce Human Error

Data processing is used in healthcare to analyze user data and prescribe medication. As a result, errors like prescribing the wrong drug or dispatching a different medication by accident become fewer. Data analysis can discover and alert potentially inappropriate medicines to prevent mistakes and save lives.

4. Better Patient Care

Healthcare processed data provides the insights needed to achieve a higher level of personalization for health systems. The existing healthcare system is moving toward a new benchmark of patient convenience and tailored care. For example, the perfect date can be accessible by digitizing hospital records to grasp the patterns of numerous patients. As a result, hospitals can provide better patient care and acquire knowledge about corrective methods to reduce frequent visits.

A stream processing framework which is developed by Apache Software Foundation. Click to explore about our, Stream Processing with Apache Flink

How can real-time data improve patient care?

Assisting with clinical therapy choices. Providing real-time warnings to healthcare practitioners. Giving in-depth knowledge into a patient's health and treatment. Enhancing the identification of disease risk in patients with greater speed and accuracy. Medical professionals and administrators can pinpoint areas of risk or improvement within the current pathways by using data from the health sector. With this knowledge, they can endeavor to improve the overall standard of patient experience and address any shortcomings in patient care.

Best practices of Data Processing in the Healthcare Industry

The best practices for data processing in healthcare are listed below:

1. Data Collection

The Healthcare sector displays the best examples of how data tracking and analysis improve the world. Health systems can use data collection to generate holistic views of patients, tailor therapies, decrease the communication gap between doctors and patients, and advance treatment procedures.

2. Healthcare Data Collection Method

Questionnaires, observations, and document examinations are examples of healthcare data collection approaches. Today, most information is received using digital channels and numerous applications accessible on the market. The following are some of the most common medical data-gathering tools:

CRM - Customer Relationship Management System (CRM) generates and manages general data and reports and analyzes various difficulties.EHR- Electronic health record (EHR) systems collect and analyze personal patient data to generate deeper insights.

Mobile applications- They are software that operates on mobile devices. It connects doctors and patients so they can discuss the issues quickly in an emergency.

3. Data Aggregation

In this business, data aggregation refers to integrating multiple data types (health information, finances, test findings, and so on) into a single unified data asset.

The basis for the shift from volume to value-based care is data aggregation.

Generate insights, patterns, and predictions. - It lets a company pull all its data together to identify things that wouldn't be observable in a single patient chart.Transforming transactional data into informative data - Aggregation allows you to transform transactional data into informative data. A physician can then turn aggregated data into a forecast (If "A" occurs, "B" is likely to follow) and, most significantly, a prescription (if a patient is experiencing "A," perform "B" to achieve "C" outcome). While data from a single patient may be helpful at the time, it will not allow you to recognize this type of critical, transformational insight.

4. Data Analytics

Data Analytics refers to analyzing raw datasets to gain insights, detect patterns and trends, and propose areas for improvement. Current and historical data are used to generate macro and micro insights and help decision-making at the patient and corporate levels.

Big Data Architecture helps design the Data Pipeline with the various requirements of either the Batch Processing System or Stream Processing System. Click to explore about our, Big Data Architecture Layers

What are the Data Processing strategies in various clouds?

The data processing strategies in the cloud are:

1. AWS (Amazon Web Services)

Amazon EMR: A cloud big data platform that uses open-source analytics frameworks to conduct large-scale distributed its jobs, interactive SQL queries, and machine learning (ML) applications.

AWS Glue: A serverless data integration tool for finding, preparing, and combining data for analytics, machine learning, and application development.

Amazon Kinesis: Gathers, process, and analyze real-time and streaming data.

Amazon S3: An object storage service with industry-leading scalability, data availability, security, and performance.

Know more about Building AWS Data Pipeline

2. GCP (Google Cloud Platform)

Dataproc: A purpose-built, fully managed, highly-scalable cluster.

Cloud Data Fusion: A fully managed, cloud-native data integration tool at any scale.

Dataflow: A serverless streaming analytics service.

Cloud Storage: An object storage service in google cloud.

Explore the role of GCP in Data Processing

3. Azure

HDInsight: A serverless cloud Hadoop and Spark service with a 99.9% SLA.

Data Factory: Data transfer and transformation can be orchestrated and automated with the help of a data integration service.

Azure Synapse Analytics: Unlimited information analytics service.

Investigate the importance of Azure in Data Analytics

4. On-Premises

Hadoop: Open-source, distributed framework.Spark: Distributed, open-source data processing engine that processes data using random access memory (RAM).

Data Lake: A centralized data storage, processing, and security solution for massive amounts of organized, semistructured, and unstructured data.

Hive: Open-source, distributed data warehousing database operating on Hadoop Distributed File System.

A technique that is executed at the time of making an interactive model. Click to explore about our, Data Preprocessing and Data Wrangling in Machine Learning

Conclusion

The world is moving toward evidence-based medicine today. It necessitates utilizing all clinical data and incorporating it into advanced analytics. Obtaining meaningful insights requires capturing and bringing together all the information regarding a particular patient. It will help eliminate costly testing and resource waste and proper prescribing of pharmaceuticals. Furthermore, it contributes more to the economy by using capital wisely. The possibilities are unlimited in Big Data, and new and innovative approaches to assist the business will continue to emerge.

- Importance of Data Processing in IoT for Smart Cities.

- Read more about Large Data Processing with Presto.