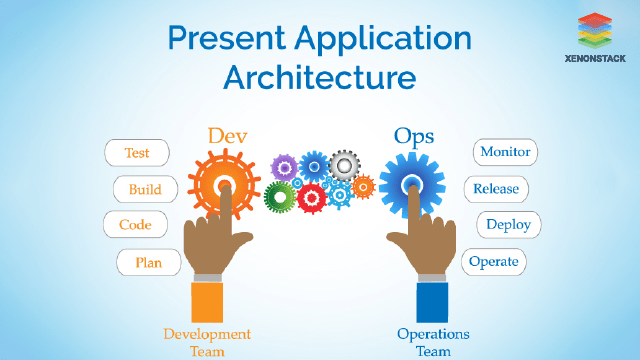

DevOps is a process in which Modern software engineering Culture and Practices develop software where the development and operation teams work hand in hand as one unit, unlike the traditional ways; it is called the Agile Methodology. The traditional methods before it were time-consuming and lacked understanding between different departments of Software Development, which led to more time for updates and to fix bugs, ultimately leading to customer dissatisfaction. The developer must change the software from the beginning to make a small change. That's why DevOps Processes provide such a culture that allows fast, efficient, reliable software delivery through production.

What are the Business benefits?

Listed below are the benefits of DevOps -

-

Maximise the speed of product delivery.

-

Enhanced customer experience.

-

Increased time to value.

-

Enables fast flow of planned work into production.

-

Use Automated tools at each level.

-

More stable operating environments.

-

Improved communication and collaboration.

-

More time to innovate.

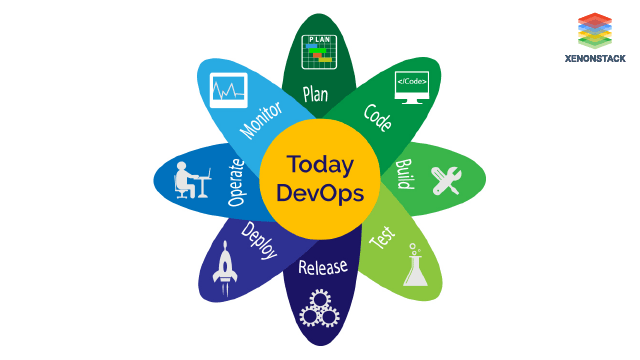

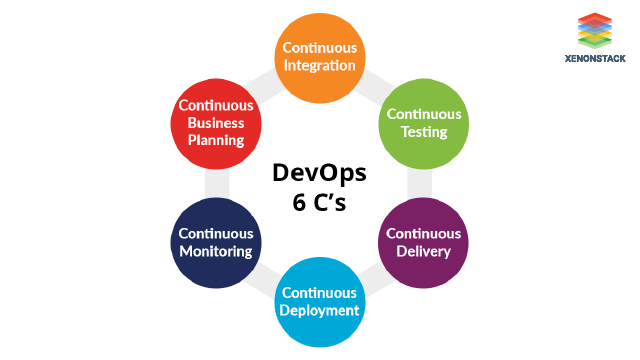

What are the 6 C's of DevOps Processes?

Its practices lead to high productivity, minor bugs, improved communication, enhanced quality, faster problem resolution, more reliability, and better and timely software delivery.

Continuous Integration

This means isolated changes are tested and reported when added to a larger codebase. Continuous integration aims to give rapid feedback so defects can be identified and corrected immediately. Jenkins is used for continuous integration, which follows 3 step rule, i.e., build, test, and deploy. Here, the developer frequently changes the source code in the shared repository several times a day. Along with Jenkins, we have more tools, such as BuildBot, Travis, etc. Jenkins is widely used because it provides plugins for testing, reporting, notification, deployment, etc.

Continuous Testing

Continuous Testing obtains immediate feedback on the business risk associated with Software Releases. It's a challenging and essential part of software. Software rating depends upon Testing. The test function helps the developer balance quality and speed. Automated tools are used for testing, as it is easier to test continuously than testing a full software. The tool used to test the software is Selenium.

Continuous Delivery

It is the ability to make changes like including new features, configuration management, fixing bugs and experiments into production. Our motive for continuous delivery is continuous daily improvement. If there is an error in the production code, we can quickly fix it at that time. So, here we are developing and deploying our application rapidly, reliably and repeatedly with minimum overhead.

Continuous Deployment

The code is automatically deployed to the production environment as it passes all the test cases. Continuous versioning ensures that multiple code versions are available in the proper places. Every changed code is put into production, automatically resulting in many daily deployments in the production environment.

Continuous Monitoring

It is a reporting tool because developers and testers understand the performance and availability of their application, even before it is deployed to operations. Feedback provided by continuous monitoring is essential for lowering the cost of errors and change. The Nagios tool is used for continuous monitoring.Continuous Business Planning

Continuous Business Planning begins with determining the resources required by the application. The goal of continuous business planning is to define the application's results and capabilities—key Technologies and Terminologies in its Processes.

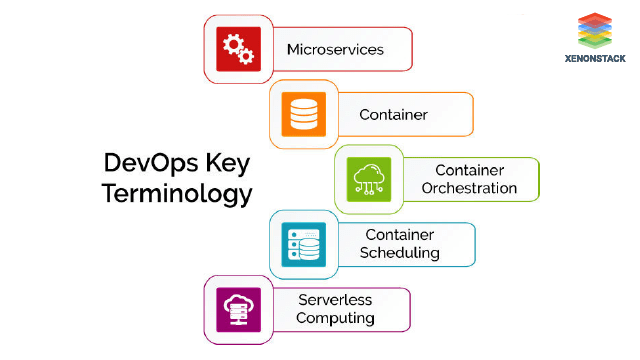

Key Technologies and Terminologies in its Processes

What are Microservices?

Microservices is an architectural style of developing a complex application by dividing it into smaller modules/microservices. These Microservices are loosely coupled, deployed independently, and appropriately focused by small teams. With Microservices, developers can decide how to use, design, and use language to choose a platform to run, deploy, scale, etc.

Advantages of Microservices Architecture

-

Microservices can be developed in various programming languages.

-

Errors in any module or microservices can easily be found, thus saving time.

-

Smaller modules or microservices are easier to manage.

-

Whenever any update is required, it can be immediately pushed on that particular microservice; otherwise, the whole application needs to be updated.

-

We can scale up and down a particular microservice according to client needs without affecting the other microservices.

-

It also leads to an increase in productivity.

-

If any module goes down, the application will remain largely unaffected.

Disadvantages Of Microservices Architecture

-

If an application involves several microservices, managing them becomes a bit difficult.

-

Microservices lead to more memory consumption.

-

In some cases, Testing Microservices becomes difficult.

-

In production, it also leads to the complexity of deploying and managing a system comprising different services.

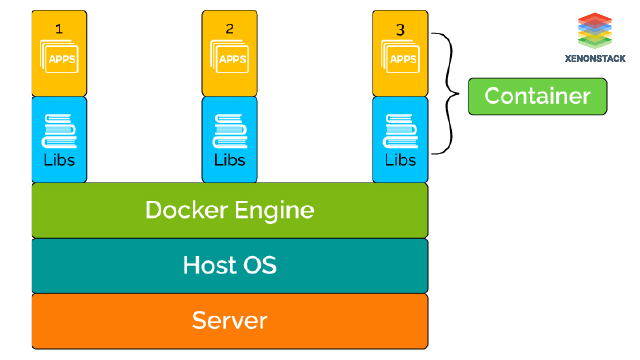

Overview of Containers and Docker

What are Containers?

Before, we faced a problem that code could efficiently run on the developer environment, but a dependency issue occurred while executing it in the production environment. Then virtual machines came, but they were heavyweight, which led to a wastage of RAM; the processor was also not utilized thoroughly. VM is not the best option if we need more than 50 microservices.

What are the advantages of using Containers?

-

Resource waste like RAM, processor, and disc space is controlled as there is no need to pre-locate these resources, and they are met according to application requirements.

-

Sharing a container is easy.

-

Docker provides a platform to manage the lifecycle of containers.

-

Containers provide a consistent computation environment.

-

Containers can run separate applications within a single shared operating system.

Container Orchestration

It is the Automated Arrangement, Coordination, and Management of containers and the resources they consume while deploying a multi-container-packed application.

Container Orchestration Features

There are various features of Orchestration. Some of them are given below:

-

Cluster Management - The developer's task is limited to launching several container instances and specifying the functions needed to run. Orchestration does the administration of all containers.

-

Task Definitions—This allows the developer to define a task and specify the number of containers required for the work and their dependencies. A single job definition can launch many tasks.

-

Programmatic Control—With simple API calls, tasks can be registered and deregistered, and Docker containers can be launched and stopped.

-

Scheduling - Container scheduling deals with placing the containers from the cluster according to the resources needed and the requirements' availability.

-

Load Balancing - Helps distribute traffic across the containers/deployment.

-

Monitoring - One can monitor CPU and memory utilization of running tasks and alert if containers need scaling.

Containers can give DevOps OS-level virtualization by which OS distributions and the infrastructure are not the concerns of DevOps anymore. Click to explore the Guide to Understand the Role of Containers in DevOps

What is Docker?

Docker is a lightweight Container with inbuilt images that occupies much less space. But to run a Docker, we need Linux or Ubuntu as a host machine.

What is Docker Hub?

It's a cloud-hosted service provided by Docker. Here, we can upload our images or pull them from a public repository.

-

Docker Registry—This is the storage component for Docker images. We can store them in a public or private repository. We use this to integrate image storage with our in-house development workflow and control where images are stored.

-

Running Docker Images- The read-only template used to create the container. Built by Docker user and stored on Docker hub or local registry.

-

Docker Containers are runtime instances of Docker images built from one or more images.

Hence, Docker helps solve application issues, resolve application isolation, and facilitate faster development.

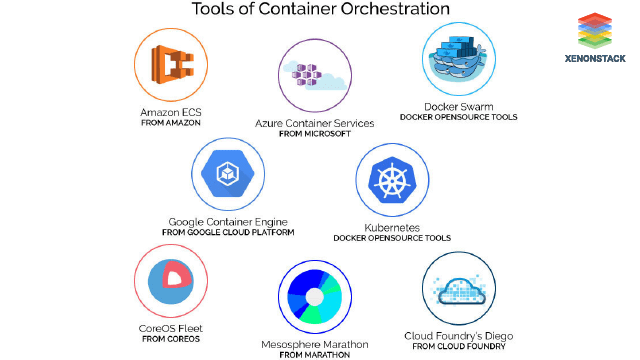

What are the best Container Orchestration Tools?

For Container orchestration, different tools are used, few are open-source tools like Kubernetes, and Docker Swarn which can be used privately, also some paid tools are there like AWS ECS from Amazon, Google Containers, and Microsoft Containers. Some of these tools are briefly explained below -

Amazon ECS

Amazon ECS is another product from Amazon Web Services that provides the runtime environment for Docker Containers and orchestrates. It allows for running Dockerized applications on top of Amazon's infrastructure. Click here to learn how to set up a DevOps pipeline on AWS.

Azure Container Services

Microsoft's Azure Container Service product allows similar functionalities and has excellent support for the .NET ecosystem. Click here to learn how to build a DevOps pipeline on Azure.

Docker Swarm

It's an open-source tool that is part of Docker's landscape. This tool can run multiple Docker engines as a single virtual Docker. It is Docker's own container orchestration Tool. Docker Swarm consists of the manager and worker nodes that run different orchestration services. Managers distribute tasks across the cluster, and worker nodes run containers assigned by administrators.

Google Container Engine

Google Container Engine allows us to run Docker containers on the Google Cloud Platform. It schedules the containers into the cluster and manages them according to the requirements. It is built on top of Kubernetes, an open-source Container Orchestration tool. Click here to learn how to implement DevOps on GCP.

Kubernetes

Kubernetes is one of the most mature orchestration systems for Docker containers. It's an open-source system for automating the deployment and management of containerized applications. Also, according to the user's need, it scales the application. Hence, It provides basic mechanisms for deploying, maintaining, and scaling applications. Check out the Guide to Cloud Native DevOps with Kubernetes

CoreOS Fleet

CoreOS Fleet is a container management tool that lets you deploy Docker containers on hosts in a cluster and distribute services across a cluster.

Cloud Foundry's Diego

Cloud Foundry's Diego is a container management system combining schedulers, runners, and health managers. It is a rewrite of the Cloud Foundry runtime.

Mesosphere Marathon

Mesosphere Marathon is a container orchestration framework for Apache Mesos designed to launch long-running applications. It offers vital features for running applications in a clustered environment.

Encourages collaboration and communication between the developers and operations teams in all stages of the Software Development Life Cycle. Click to explore the top Six Challenges in DevOps Adoption

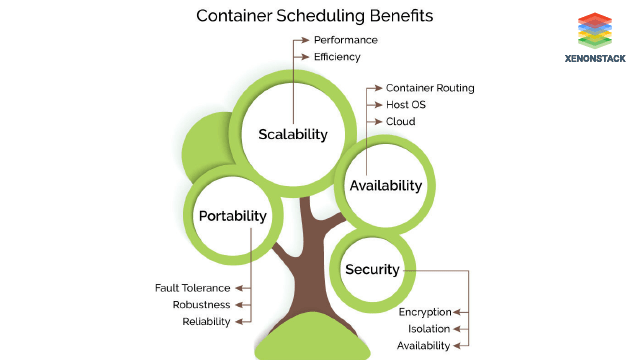

What is Container Scheduling?

Container Scheduling is one of the key features of Container Orchestration. Schedule means optimizing, arranging and controlling the task or resources. It includes upgrades, downgrades of the resources, rescheduling, placement, scaling, and replicating resources needed to run a container. Container Scheduling also helps in two important aspects -

-

Auto-Recovery - This means recovering the unhealthy containers required to work applications properly.

-

Container Deployments - When a new version of a task definition is uploaded, schedulers automatically stop the containers needed for the previous version and start running the new containers as defined in the uploaded image. Thus allowing easy updating of containers to the latest version.

Container orchestration and container scheduling are assumed to be the same, but actually, they are different. Container Scheduling is a feature of Container Orchestration. Scheduler job is to assign work to the container and orchestration ensures the resources needed to perform the work when needed, like scheduler assigned work to do load balancing, failure recovery and scaling, then for executing these task orchestration ensures or creates the environment which of these services are available.

Continuous Monitoring and Alerting

Monitoring refers to analyze resources and their metrics continuously like CPU, Host, Memory, Storage, Network and take decision accordingly. Sometimes CPU Utilization goes beyond the limit, and then we make decisions accordingly. also, if the host is not working, then we can replace it or troubleshoot the host. So, Monitoring works as feedback from the production environment. By Monitoring our application, we can analyze the application’s performance and usage patterns. We do so to detect the errors, and as soon as we find them, we can immediately correct them.

Monitoring refers to analyze resources and their metrics continuously like CPU, Host, Memory, Storage, Network and take decision accordingly. Sometimes CPU Utilization goes beyond the limit, and then we make decisions accordingly. also, if the host is not working, then we can replace it or troubleshoot the host. So, Monitoring works as feedback from the production environment. By Monitoring our application, we can analyze the application’s performance and usage patterns. We do so to detect the errors, and as soon as we find them, we can immediately correct them.

What are the benefits of Continuous Monitoring?

Given below are the benefits of Continuous Monitoring:

-

Effective monitoring is essential to allow its teams to deliver at speed, get feedback from production, and increase customer satisfaction, acquisition, and retention.

-

Monitoring is not only to raise our application, i.e., to find new things and ideas, analyze the usage data, and figure out new things that can add value to the application.

-

Effective Monitoring allows the team to deliver the product on time at better speed and also important gets feedback from customers, our primary focus is towards customer satisfaction, we also focus on delivery much better than customer expectation.

-

There are many ways for monitoring the application by which it is predicted that the application is usage Monitoring, Availability Monitoring, Performance Monitoring, etc.

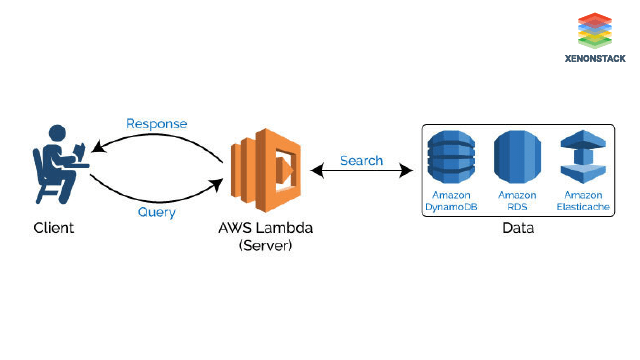

Serverless Computing Architecture

Serverless Computing is a technology that allows developers to focus only on developing value-adding code and not to concentrate on provisioning or managing servers. Serverless computing relieved developers from worrying about infrastructural and operational details like scalability, high availability, infrastructure, security etc., and allowed developers to do what they enjoy doing, i.e., writing code and creating the "next big thing” and someone else will manage and handle all the issues regarding servers and other infrastructure.

Serverless Computing is a technology that allows developers to focus only on developing value-adding code and not to concentrate on provisioning or managing servers. Serverless computing relieved developers from worrying about infrastructural and operational details like scalability, high availability, infrastructure, security etc., and allowed developers to do what they enjoy doing, i.e., writing code and creating the "next big thing” and someone else will manage and handle all the issues regarding servers and other infrastructure.

Serverless Computing is simply a building code and runs of applications without thinking about servers. “Serverless” doesn’t mean servers are no longer involved. This means that servers are hidden from developers. So, with serverless computing, developers shift their focus from the server to the coding level. The key benefit of serverless computing is that it encourages Microservices, i.e., dividing complex problems into smaller modules and then solving those modules.

Understanding AWS Lambda

Nowadays, AWS Lambda is the most used platform for Serverless Computing. The developer has just one task, i.e., to provide the code; AWS Lambda takes care of the rest, including managing and provisioning servers.

AWS Lambda Features For Serverless Computing

Given below are the AWS Lambda features for serverless computing:

-

It supports various languages, including Node.js, Java, C+, and Python.

-

In AWS Lambda, we have to pay only for our computer time and not for the time when the code is not running.

-

According to our function memory requirement, Lambda automatically allocates CPU power, network bandwidth, etc., proportionally.

-

It provides continuous scaling of our application.

Holistic Approach Toward DevOps Processes

We Started transformation towards its Strategy by adopting processes like the Integration of DevOps Tools, Processes, and Data into our work culture. Parallelly, we started adopting different infrastructure architectures, such as building a private cloud, Docker, Apache Mesos, and Kubernetes. During the Transformation Towards Agile & DevOps, we realized a platform was needed to define a workflow with different Integrations.

- Read here about DevOps on Google Cloud Platform Benefits

- Explore the Role of ML and AI in DevOps Transformation

- Click here to know the Best Continuous Security Testing Tools

- Discover about The Role of Virtualization in DevOps

Next steps in DevOps and its processes

Talk to our experts about implementing compound AI systems and how industries and different departments use Decision Intelligence and DevOps processes to become decision-centric. Discover how AI automates and optimizes IT support and operations, enhancing efficiency and responsiveness through DevOps practices.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)