Overview of Computer Vision

As the world focuses on a breakthrough technological advancement in artificial intelligence, the application of computer vision has tremendously expanded across many sensitive fields like healthcare, the automobile industry and security. The competencies from object recognition are profoundly redefining the world by driving innovations that earlier had implications for science fiction. However, with this advancement comes a pressing concern: concern with the efficiency and, thus, with the energy consumption of these complex systems. Basically, computer vision models, especially deep learning models, can be very computationally costly. As such, this blog’s purpose is to address such topics as the history of computer vision, the modern need for efficient models, the evolution and development of the field, the current challenges and solutions, as well as the modern trends in the field.

The Historical Context of Computer Vision

Early Days: The Birth of Computer Vision

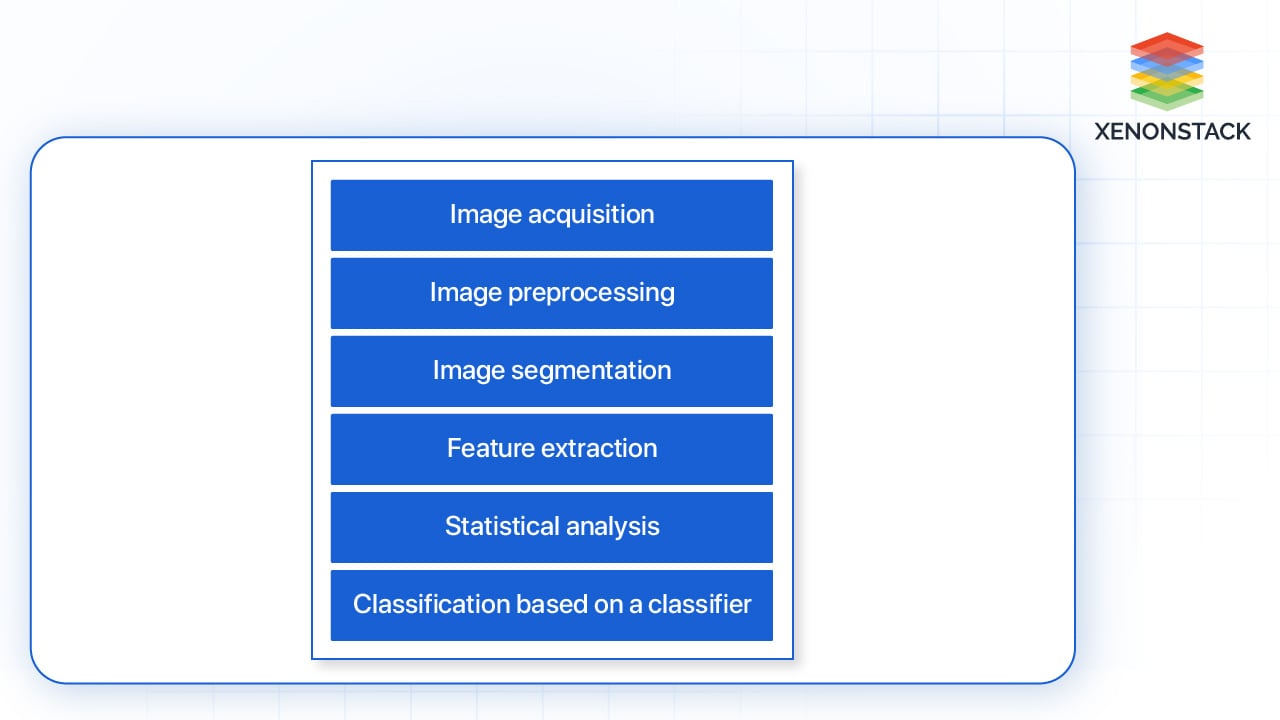

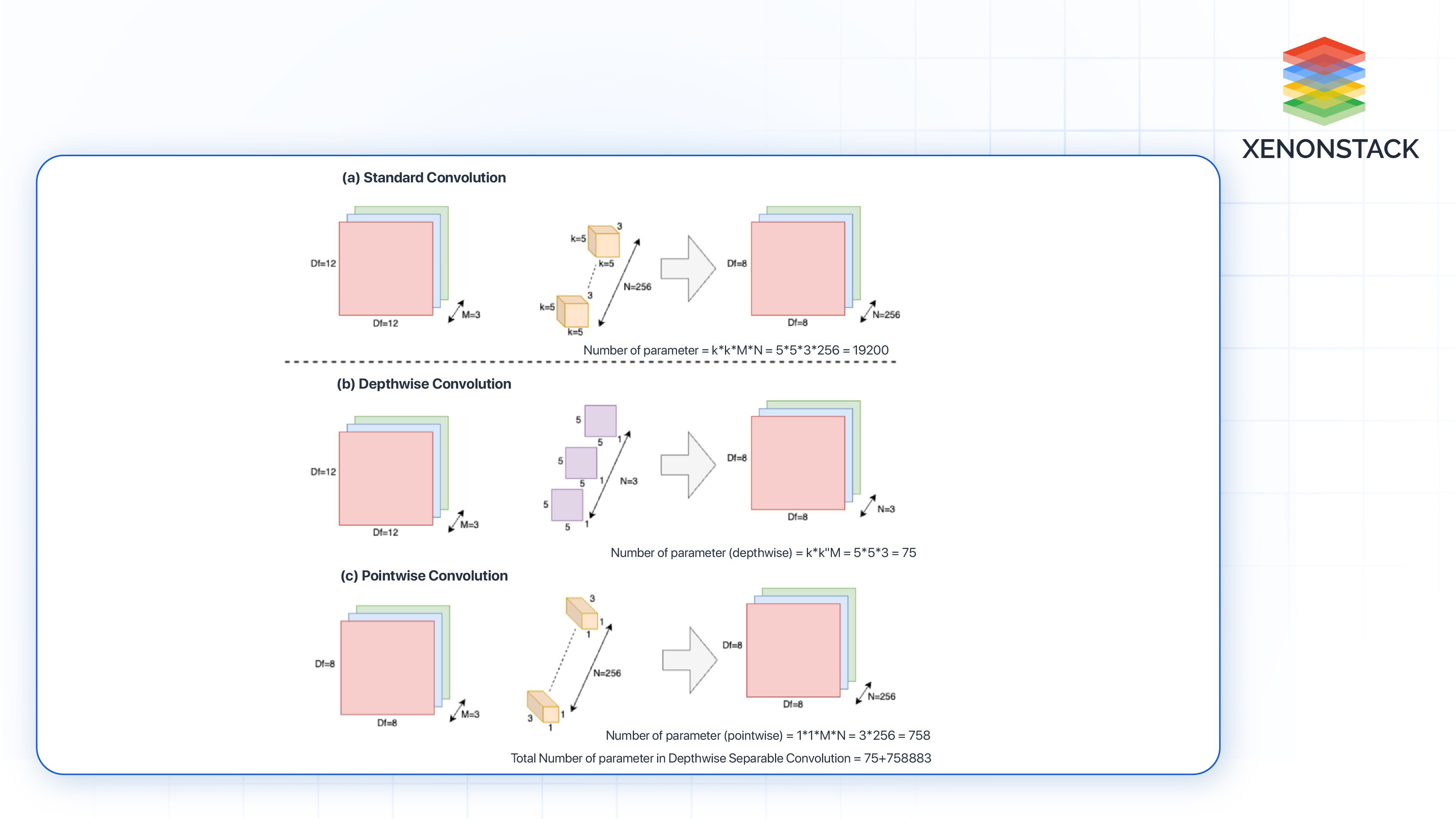

Figure 1: Different processes in Image Processing

Computer vision as a field started to emerge in the mid-1960s, with figures like Larry Roberts and David Marr drawing a pathway on how the computer could understand the visual environment. Esoteric tasks were first attempted and investigated, and the first tasks that AI researched were things like edge detection and pattern recognition. Such attempts were made in the past as newcomers, where the knowledge engineering aspect was a bit restricted by the technological level at the beginning.

The 1980s and 1990s: Neural Nets and New Generation Tools

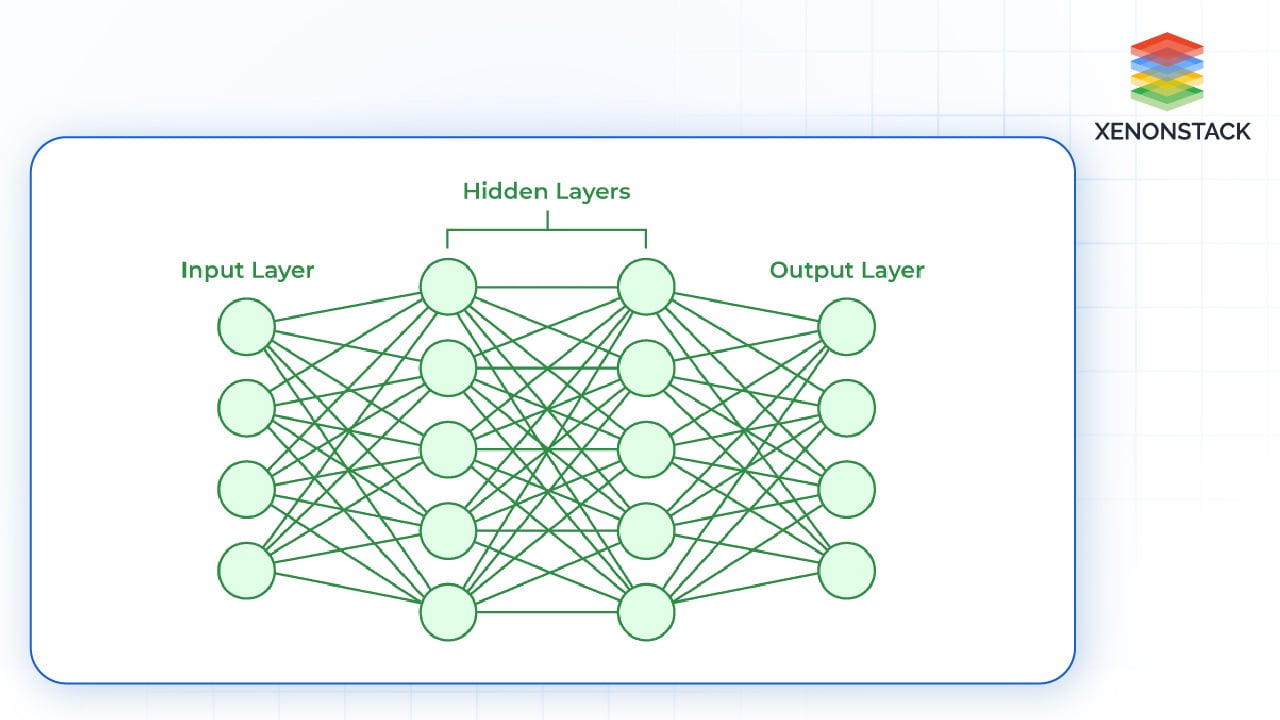

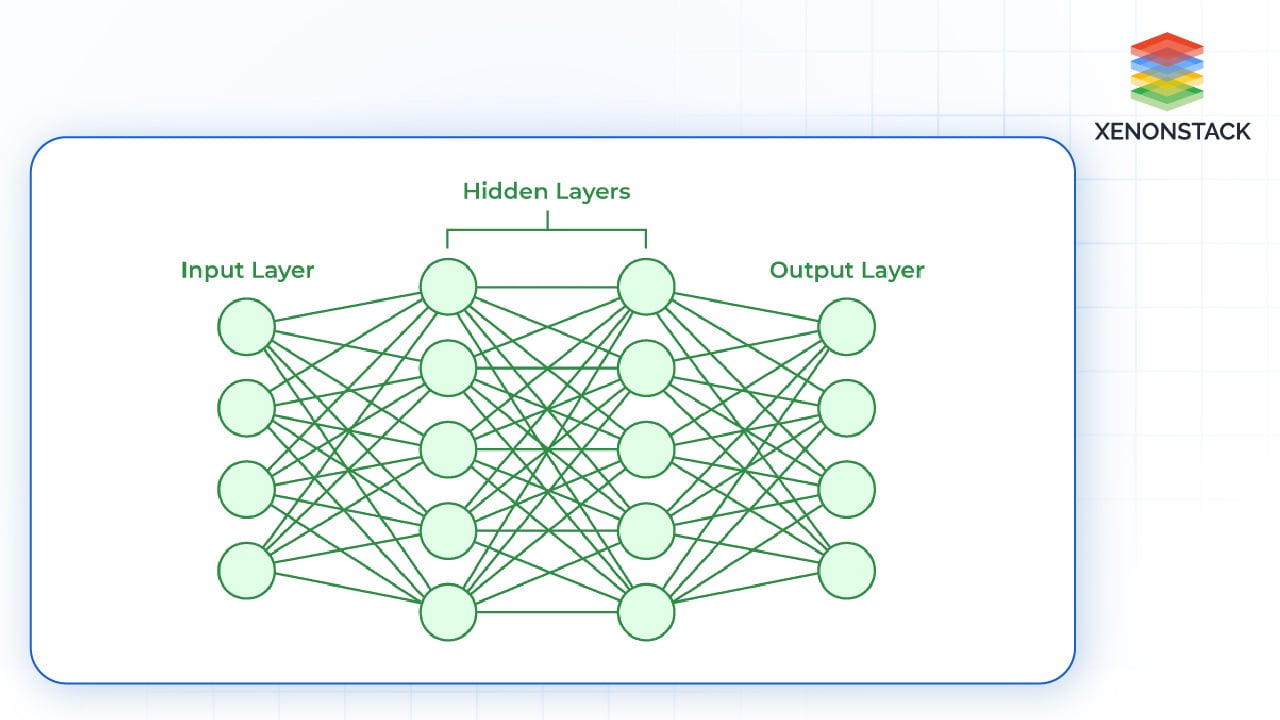

Figure 2: Diagram of a Basic Neural Networks

Another breakthrough that occurred in the 1980s has been the application of neural networks into the framework of computer vision. The researchers thus started applying artificial neural networks (ANNs) to identify patterns in given images. Additional activities were also carried out in the 1990s, referring to more elaborate techniques, including template matching and feature-based approaches, all of which enhanced the possibility of detecting objects in relation to images. However, these models were still rather primitive, as they failed complex visual tasks even on very simple models.

The Deep Learning Revolution

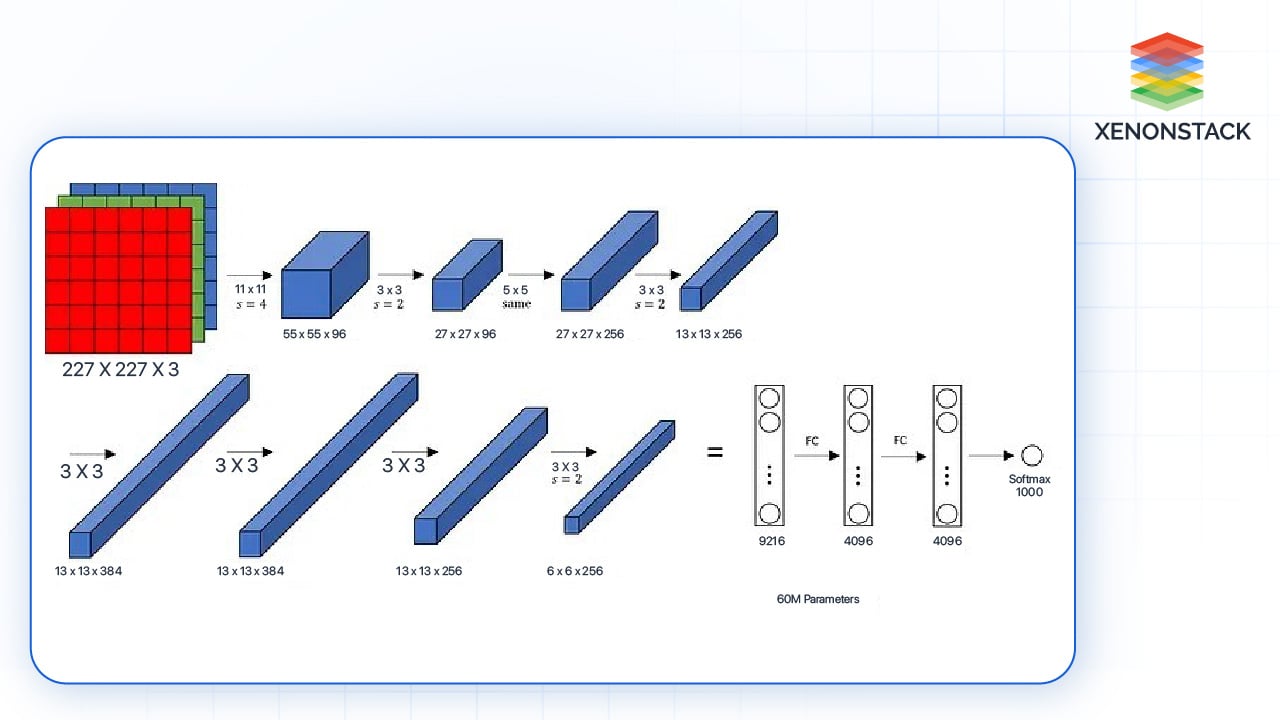

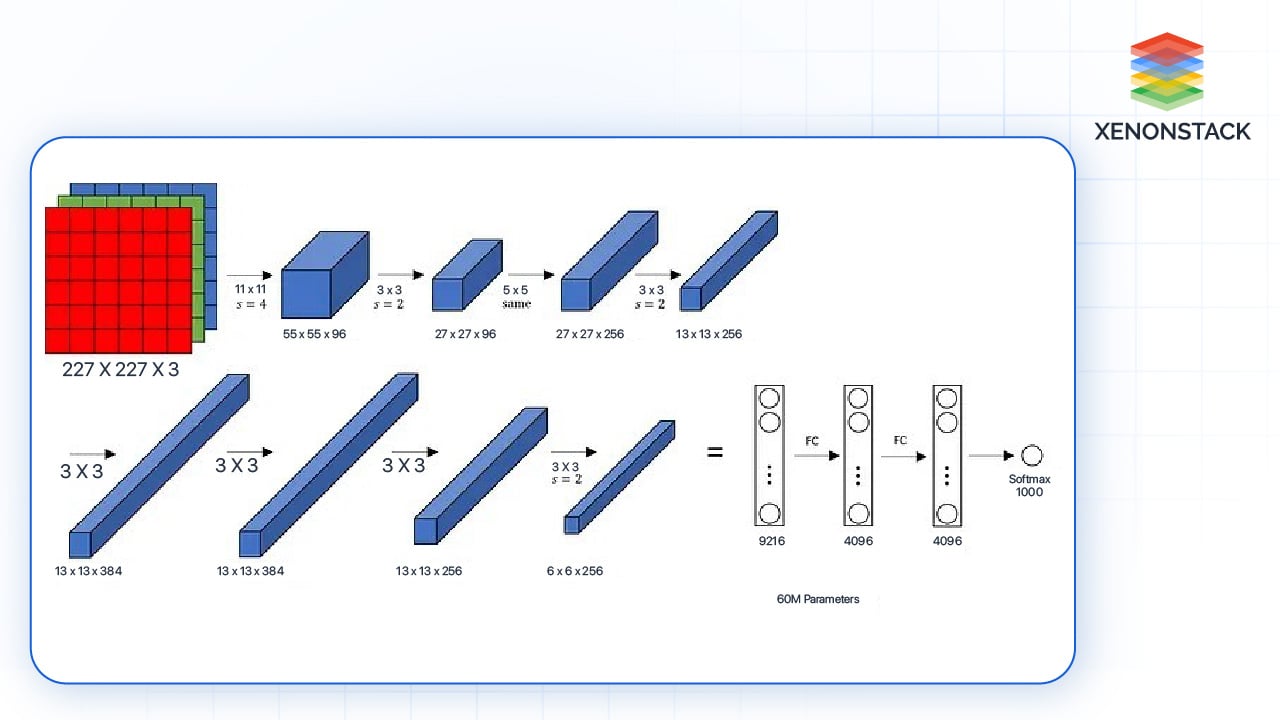

Figure 3: Architecture of AlexNet

The state of computer vision received another boost in the early 2010s with the reemergence of deep learning. In 2012, a deep learning neural network known as AlexNet, created by Alex Krizhevsky, Geoffrey Hinton, and Ilyas Sutskever, took a large lead in the ILSVRC. These results revealed the enabling impact of convolutional neural networks, or CNNs, on transforming the field's capacity.

After that, several other deep learning architectures were proposed, such as VGGNet, GoogLeNet, and ResNet. These models enhanced the efficiency of image classification, detection, and segmentation, as well as their applications in object identification. However, their performance came at a cost: amidst this event, the computational resources needed to train and use these models dramatically increased, heightening issues of energy and ecological concern.

Why Energy-Efficient Models Are Essential

As more computer vision applications are added and moved to mobile and edge devices, the requirement for efficient models has never been higher. Here are several factors driving this demand:

-

Environmental Concerns

The rising concern for the environmental effects of our planet has made conversations around the environment and the use of artificial intelligence more prominent worldwide. Many deep learning models are hosted in data centres, and they inevitably utilize large amounts of energy. In its report, the International Energy Agency (IEA) estimated that data centres consumed 1% of electronic energy worldwide in 2020, which is set to rise. This growth presents the group with the obligation of making sure that the advance in computer vision does not contribute to the deterioration of the environment.

-

Economic Considerations

Excessive energy utilization is not healthy for the environment, and most importantly, it leads to high operational costs for organizations. Executives using computer vision systems need to understand that they are operating heavy, power-consuming models. This means that in times of tight margins, the cost of power could balloon drastically. These models can be cost-saving and thus appeal to different ventures since they utilize minimum energy.

-

Device Limitations

Devices such as portable handhelds, mobiles, sensors in smart objects, and other embedded systems are constrained regarding power availability. Therefore, running computationally demanding computer vision models on these devices presents certain challenges. Energy-efficient models can help address these issues, making it possible to conduct complex visual analyses on constrained power devices in real time.

-

User Experience

Whenever applications must provide real-time performance, like self-driving cars or AR applications, energy-efficient models improve user experiences since devices do not freeze while the applications run. Customers expect their devices to meet the highest performance standards, and power is one of the few factors that set that standard.

AI-based Video Analytics's primary goal is to detect temporal and spatial events in videos automatically. Click to explore about our, AI-based Video Analytics

Recent Advances in Energy-Efficient Computer Vision

Finally, over the years, researchers and practitioners have developed efficient methods to utilize computer visions and implement energy-efficient models. Here are some of the key advancements:

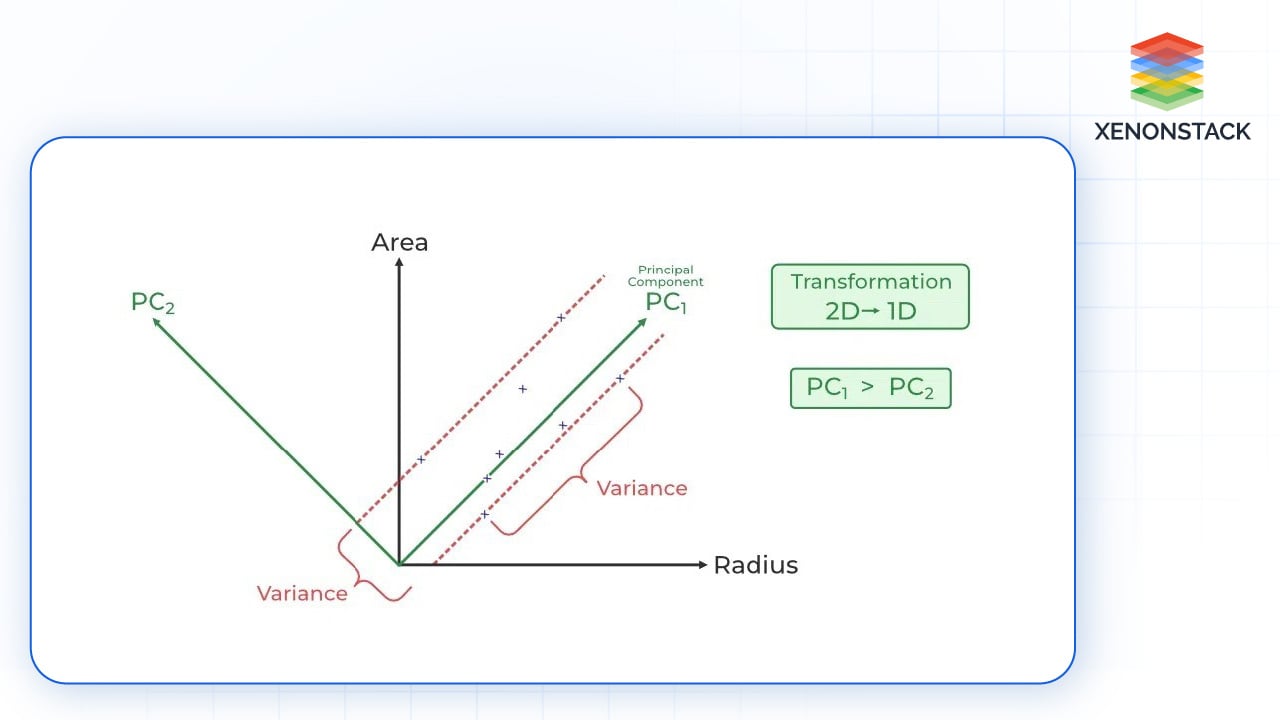

Optimization Techniques

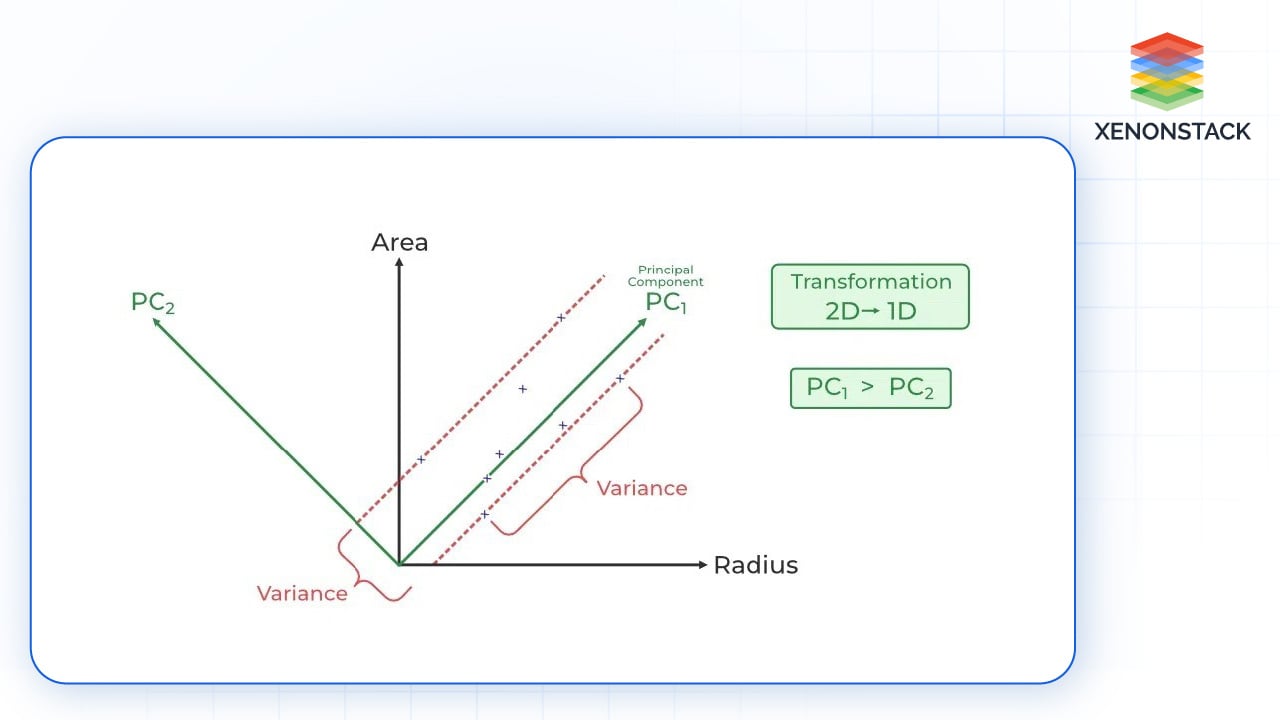

In the initial days of machine learning and deep learning, the first attempts were made to gain energy efficiency by perfecting algorithms and model structures. Mechanisms such as feature selection and dimensionality reduction were used to help prevent models from being overwhelmed. For example, where we have a large number of features for the dataset, it can be helpful to feed those features into a method such as Principal Component Analysis (PCA) to determine the most salient attribute of the dataset; this method can decrease the complexity in the model, and thus, conserve power at the time of inference.

Figure 4: Principal Component Analysis

Model Compression

The beginning of the late 2010s brought a shift in model compression techniques. Researchers applied techniques like quantization to create smaller but just as accurate models, pruning for similar reasons, and knowledge distillation so that students and teachers could have efficient devices and models without losing efficacy.

-

Quantization is the process of making a reduction adjustment to a model's weights and activations. For example, quantizing floating point 32-bit numbers into 8-bit integers leads to a smaller model size, faster inference, and an acceptable quality drop.

Figure 5: Implementing Pruning on a neural network

-

Knowledge distillation is a process in which a small model, termed the “student,” replicates the behaviours of a large model known as the “teacher.” This approach makes it possible to transfer the knowledge from a complex model to a simpler one, and the latter performs competitively to the former model but with relatively less computational overhead.

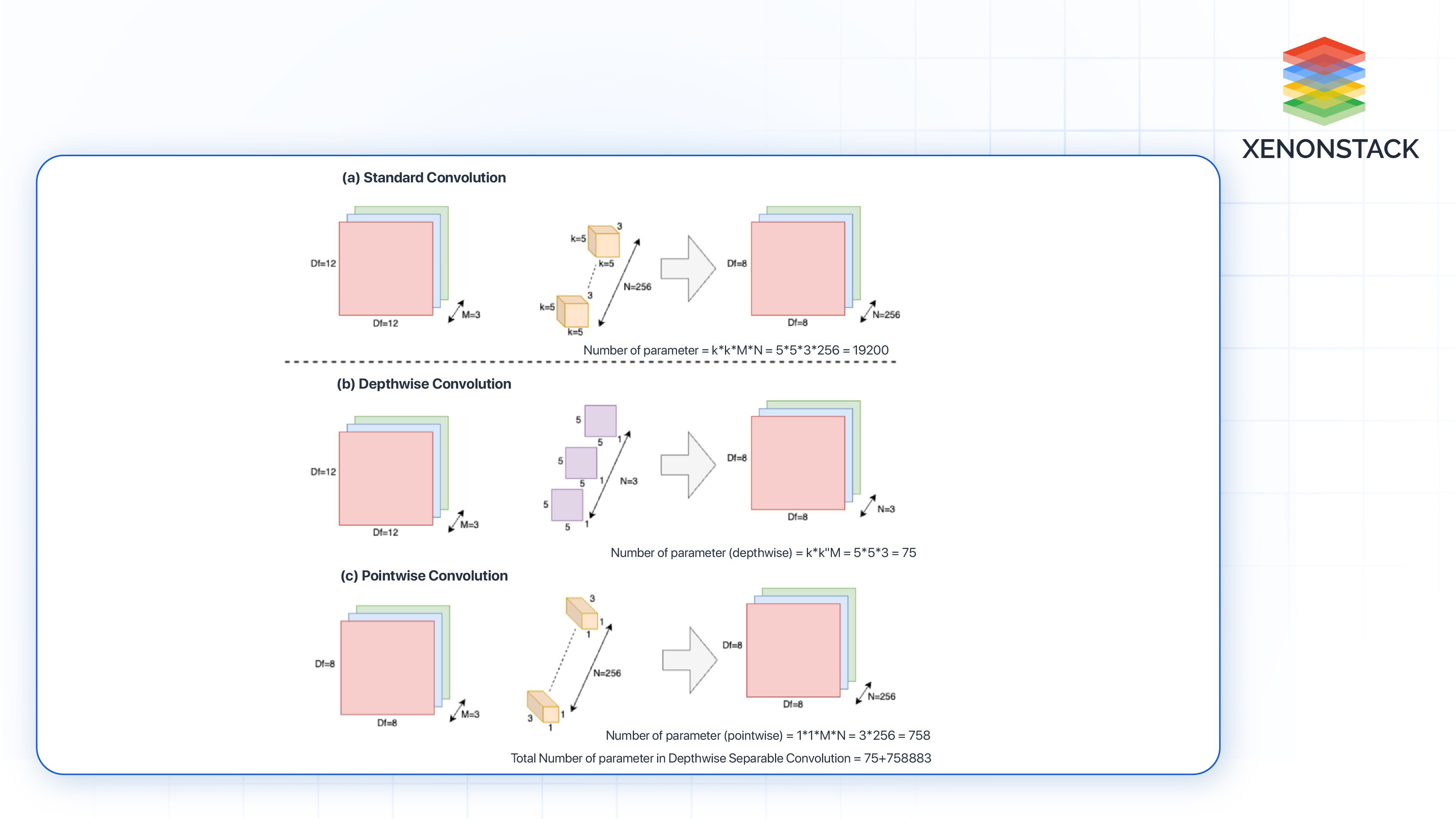

Practical Network Structures

After a long search for better energy management plans, efficient neural network architectures have become possible. Models like MobileNet, SqueezeNet, and EfficientNet's professed objective are actually designed to use lower computing power yet have higher accuracy.

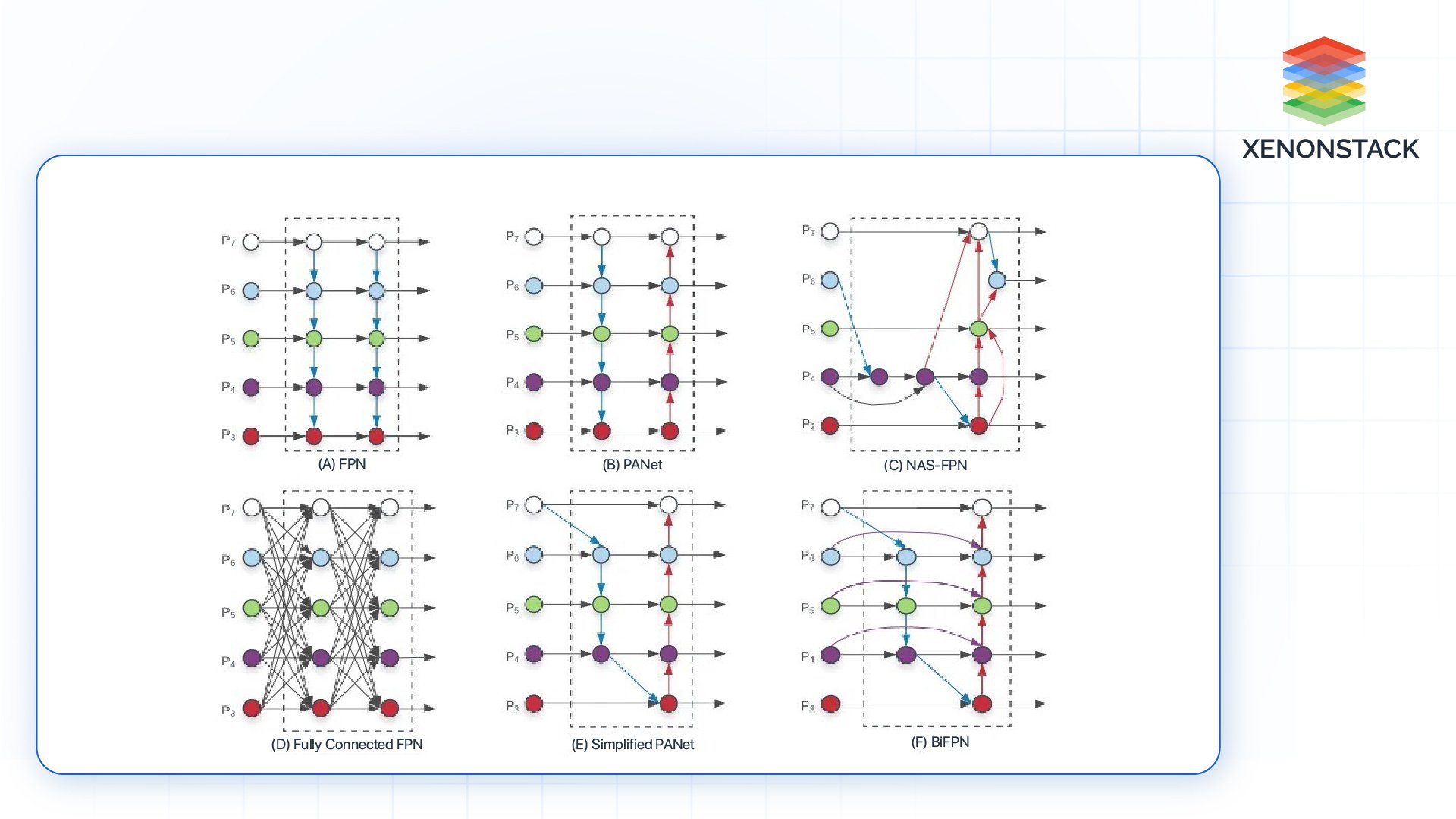

Figure 6: Explaining (a) Standard Convolution and (b)&(c) Depthwise Separable Convolutions

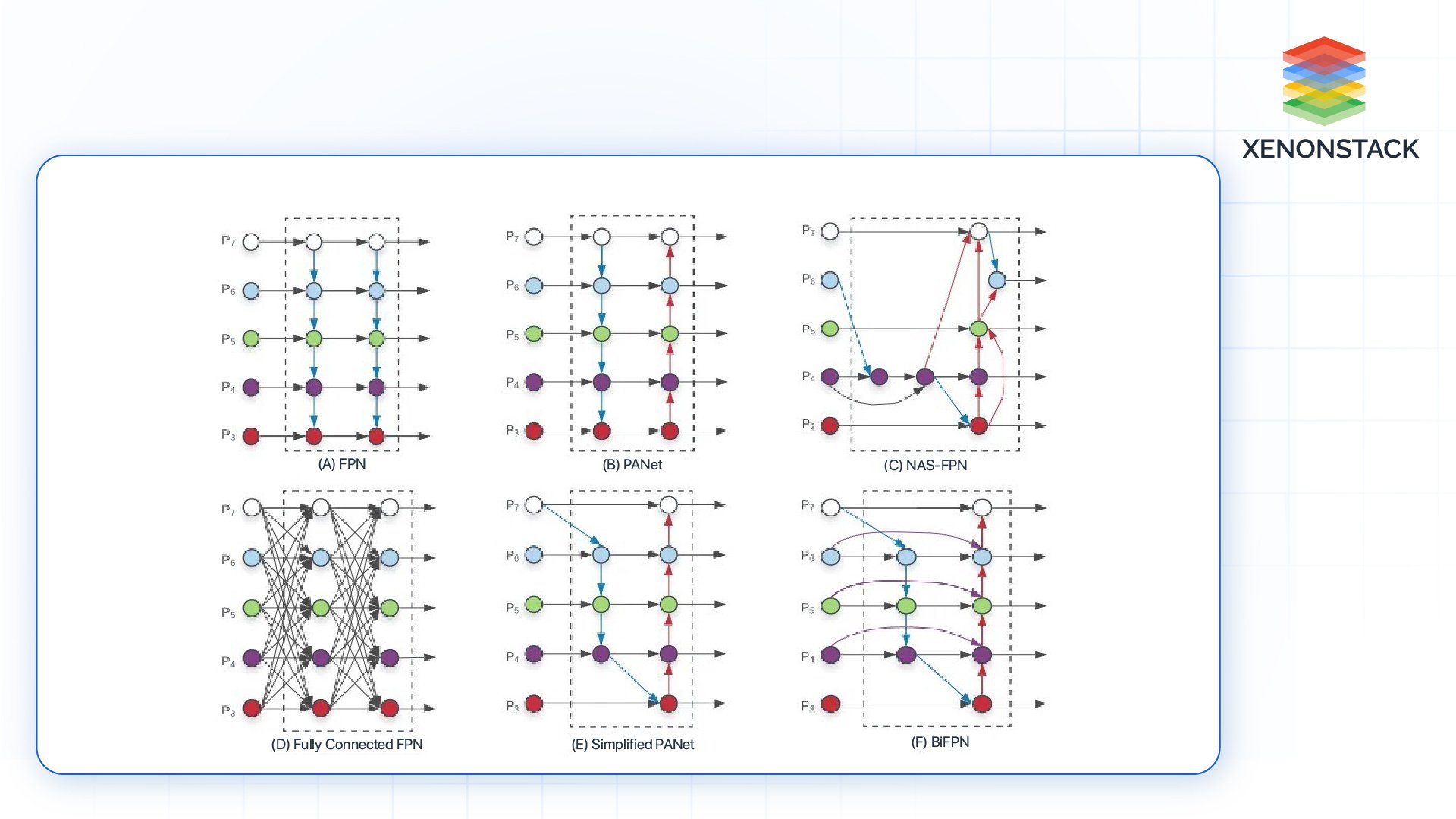

Figure 7: Architecture of (a) FPN, (b) PANet, (c) NAS-FPN, (d)FC-FPN, (e) Simplified PANet and (f) BiFPN models

Hardware Innovations

Therefore, advanced software optimization procedures are useful, yet the importance of improving hardware cannot be denied. Additional specific processors like GPUs, TPUs, and FPGAs are growing as deep learning processes have significantly changed how deep learning tasks are done. These hardware solutions are intended to step up deep learning processes relative to the traditional CPUs while being more power-efficient.

Challenges in Energy Efficiency and Solutions

However, a few challenges can still be noted even though the computer vision models have enjoyed considerable improvements in terms of energy efficiency. The following are some of the problems highlighted, solutions included:

The Accuracy-Efficiency Trade-off

Another critical aspect of developing energy-efficient models is deciding between accuracy and performance. As models become less complex, they tend to be less accurate, a con that can heavily affect precision-driven industries like Ameca MRI or self-driving cars.

-

Solution: To address this problem, researchers can use distillation processes, such as training a large model (the teacher) to train another smaller model (the student). This approach assists the smaller model in working in a way that emulates the teacher’s performance, hence achieving near-optimal accuracy with a reduced number of items required.

Managing Change and Continual Growth

Many computer vision applications work in conditions that are constantly changing within environments. Models might have to converge on new data, which is difficult for energy-proportional systems.

- Solution: Parameterizable models can be made for optimization so that the degrees of freedom in a model, and thus computational complexity, can be automatically tuned depending on available resources or external conditions. For instance, it can downscale the model processing during low-energy labels while still delivering better performance during important tasks.

Data Availability and Quality

To train such models energy efficiently, it is often necessary to use large, high-quality data collections, which are often scarce. Small data also poses problems in model effectiveness and speed.

-

Solution: This section reveals various approaches to transforming small datasets and extending current training sets. Activities like rotation, scaling, and flipping images will diversify the training data, improving the model. Moreover, knowledge transfer can capitalize on models learned on extensive datasets; highly efficient models can perform acceptably with limited training data.

Benchmarking and Evaluation

Since energy efficiency is becoming a more important factor, specific measures for energy consumption and the creation of reference points for analyzing the results of testing AI models are required. However, the absence of standardized measures offers a relative drawback in assessing the efficiency of various strategies.

-

Solution: Standard initiatives, including MLPerf and Green AI, are benchmarking tools that seek to establish a base for measuring AI models’ energy consumption. With these tools, researchers and practitioners can identify the models' performance from a precision standpoint and their energy consumption, promoting conscious practices regarding energy usage in society.