Understanding Data Strategy

A Data Strategy is a comprehensive plan that outlines how organizations collect, manage, govern, and utilize their data to support business goals. It serves as a roadmap for aligning data initiatives with broader objectives, ensuring that data is treated as a strategic asset. Key components of an effective data strategy include data governance, which establishes the policies and standards for data management, and data management practices that ensure efficient handling of data throughout its lifecycle. The strategy emphasizes the importance of data literacy, enabling employees to understand and leverage data effectively in their roles.

To build a successful data strategy, organizations should focus on collaboration across departments, breaking down silos to enhance data sharing and usage. Standardization of processes and definitions is crucial for maintaining data quality and consistency. Additionally, defining clear metrics allows organizations to measure the success of their data initiatives. The strategy also encompasses data architecture, which refers to the technical framework that supports data flow and accessibility within the organization. By integrating these elements, a well-defined data strategy not only enhances operational efficiency but also drives innovation and competitive advantage in today's data-driven landscape.

The process of developing a data strategy requires business leaders to consider:

- What employees require to be empowered to use data.

- Processes that ensure data is accessible and of high quality.

- Technology that will enable the storage, sharing, and analysis of data.

The Necessity of a Data Strategy

Having a solid data strategy that allows businesses to make the most of their data and business intelligence investments by achieving meaningful business benefits and identifying new revenue opportunities.

It assists business executives in laying the foundation for data-driven businesses and fostering a data-driven culture. In fact, according to a PWC study, highly data-driven firms were 3x more likely than those who weren't to claim advances in their data decision-making. A data strategy enables businesses to do things like:

-

Recognize the kind of data being generated and how to store it effectively.

-

Create a framework for analyzing the correct data and gaining valuable insights.

-

Select the appropriate business intelligence and analytics software.

-

Enhance decision-making to drive corporate development and value.

Core Components of Data Strategy

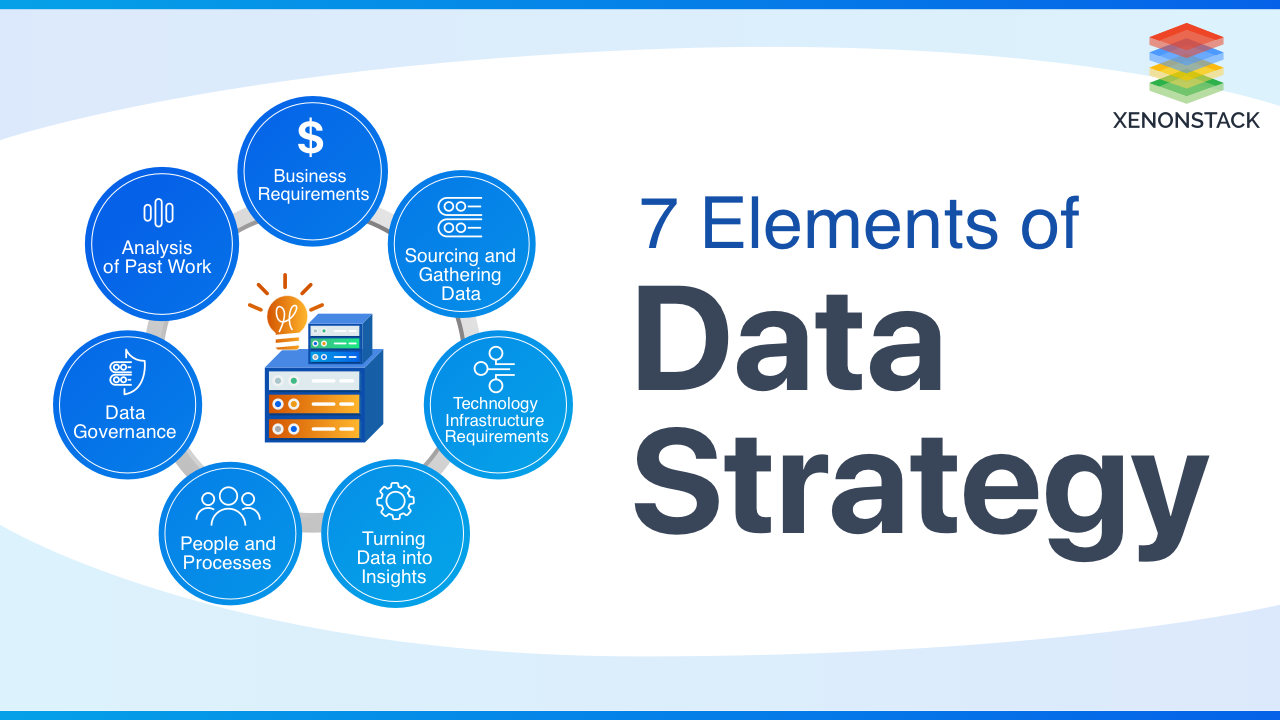

The below mentioned are the 7 elements of data strategy:

What are the Business Requirements for Data Strategy?

Data ought to deal with particular enterprise wishes, an excellent way to attain strategic dreams and generate actual value. The first step of defining the enterprise's necessities is to pick out a champion, all stakeholders, and SMEs inside the enterprise. The champion of the information approach is the govt chief who will rally aid for the investment. Stakeholders and different SMEs will constitute particular departments or features in the enterprise.

Next is to outline the strategic dreams and tie branch sports to enterprise dreams. It’s herbal for dreams to exist on the enterprise and branch degree. However, the said dreams for each degree ought to sync up. These targets are successfully collected through an interview that begins off evolving on the govt degree and keeps right down to branch’s leaders. Through this manner, we’ll find out what leaders are looking to measure, what they're looking to improve, questions they need to be answered, and ultimately, the KPIs to reply to the one's questions.

How to Source and Gather Data for Data Strategy?

With awesome expertise of what questions the commercial enterprise is asking, we will flip to the following elements: reading sources, gathering facts, and wherein the facts exist. It’s not likely that each fact might be to be had inside the enterprise and that it already exists in an area that’s accessible. So, we want to paintings backward to discover the supply.

For facts that may be discovered in-house, we are aware of the supply device and any roadblocks to gaining access to that facts. We also want to decide whether or not the facts have the proper stage of element and are up to date with the appropriate frequency to reply to the query effectively. For example, is the facts private (mainly in mild of GDPR and CCPA)? Is it guarded via means of regulations added on via way of means of software program license?

We’ll be aware of facts that aren’t to be had right here and choose it up withinside the subsequent step. For example, a retail employer also needs to understand how an emblem is perceived earlier than and after a huge product launch. Let’s say the store has name middle facts, site visitors count for shops and online, and normal income and go back numbers. That doesn’t degree how clients are feeling or what they’re pronouncing approximately the emblem. The store can also additionally pick to tug in social media facts to degree sentiment. Call middle facts, site visitors, and income might be stated on this step, together with the possibility to tug in social media facts.

Data governance that is effective protects against misuse and maintains data consistency and reliability.Explore more about Big Data Governance Tools, Benefits, and Best Practices

What are the technology infrastructure requirements for Data Strategy?

Our first piece of recommendation is: Don’t get stuck up within the hype and today's technologies; pay attention to the enterprise motives in your initiatives. Building a bendy and scalable facts structure is a complicated subject matter for which there are numerous alternatives and approaches, so right here are a few vital matters to consider:

-

To what volume can an operational device help analytics needs? Likely very little. It usually’s now no longer a high-quality exercise to depend on an operational device to satisfy analytical needs. Because of this that a critical facts repository could be useful.

-

Does the enterprise have the talents and technical infrastructure to help a facts warehouse on-prem, or could leveraging a cloud-primarily based answer make greater sense?

-

Where the facts don’t exist today, how will the gaps be filled? Can the facts be calculated or estimated? Can it be bought from third-celebration marketplace fashion facts or macro-monetary facts? Or can a brand new supply device be carried out to generate the facts?

-

Is there an intelligent integration device to get the facts from supply structures into the critical repository? Will this deposit of the structure be leveraged for enterprise good judgment so that the facts are prepared to be used?

-

How will you offer or provision get entry to the facts? Will IT create reviews, or will you permit self-service? Will the reviews be pixel-perfect (printable) reviews, or will the reviews permit a person to interplay with the facts? Will they be embedded into websites and furnished to human beings out of doors the network?

Turning Data into Insights

Data visualization gear must make the information appearance good, however extra importantly, it makes the information less difficult to apprehend and interpret. Some elements that must be taken into consideration while deciding on an information visualization device include:

- Visualizations: You must be capable of fast spot traits and outliers and keep away from introducing confusion through an awful presentation.

- Story Telling: The dashboard must gift the context of metrics and expect the person's course of research and diagnosis.

- Democratization of Data: Who has to get entry to what information? Encourage sharing and wide-unfold adoption and outline not unusual place definitions and metrics throughout the organization.

- Data Granularity: Be capable of offering the proper degree of element for the proper audience. An analyst can also additionally want extra precise statistics than an executive, and a few humans can also additionally want drill-down capabilities.

A data pipeline is a technique for transferring raw data from various data sources to a data store.Click to explore What is a Data Pipeline?

Data Governance

In the end, data governance lets the organization share facts and the oil that lubricates the equipment of an analytics practice. A facts governance application will make certain that:

-

Calculations used throughout the organization are decided primarily based totally on entering from throughout the organization.

-

The proper humans have the right to access the proper facts.

-

Data lineage (in which the facts originate and the way changed into it converted, seeing that that origination) is defined.

People and Processes

When it involves a system, many agencies have unintended roadblocks to using their information in choice-making. Business techniques might also need to be re-engineered to comprise information analysis. This may be done by documenting the stairs in a system wherein unique reviews are leveraged for a choice. We also can mandate that unique information be furnished as intent for an enterprise choice. Recognition can also be protracted—whilst you gain a win, this is primarily based on a new use of information. It has to be celebrated and promoted to construct inner momentum and inspire high-quality behaviors with information.

A data catalogue is a collection of metadata, along with data management and search tools, that aids analysts and other data users in finding the data they require.Click to explore about Data Catalog with Data Discovery

Analysis of past work for Data Strategy

The facts method roadmap results from all of the paintings we’ve performed thus far and makes all our preceding paintings actionable. We’ve diagnosed all that wishes to convey you from where you're to in which you’d want to go; however, earlier than getting beginning out with any design, build, training, or re-engineering of an enterprise process, it’s vital to prioritize the sports. For every piece of advice to assist in bridging the distance from the contemporary nation to the destiny nation, outline the feasibility and predicted enterprise cost it'll offer. The plan must prioritize sports that can be simplest to enforce; however, it offers brief wins to the enterprise.

Other elements to consist of with inside the facts method roadmap are:

-

Staff availability and whether or not door assistance is required.

-

A company’s budgeting process, particularly if capital funding is needed.

-

Competing initiatives that could save you the proper assets from participating.

Advantages of Implementing a Data Strategy

A well-defined data strategy is essential for organizations looking to harness the full potential of their data. Here are some key benefits:

-

Enhanced Decision-Making: A robust data strategy enables businesses to leverage data analytics and insights, leading to informed decision-making. This agility allows companies to respond swiftly to market changes and consumer preferences.

-

Improved Operational Efficiency: Streamlined data processes can enhance operational efficiency by reducing redundancy, automating report generation, and minimizing manual data entry, ultimately lowering costs.

-

Informed Customer Insights: A data strategy can help organizations capture and analyze customer behaviors, preferences, and feedback, resulting in personalized products and services that drive customer satisfaction and loyalty.

-

Regulatory Compliance: By implementing a solid data governance framework, organizations can ensure compliance with data protection regulations, reducing the risk of costly penalties and reputational damage.

-

Competitive Advantage: Companies with a clear data strategy can innovate faster and outperform competitors by transforming insights into actionable strategies.

-

Better Collaboration: A centralized data strategy fosters collaboration among departments, breaking down silos and allowing for a unified approach to analyzing and utilizing data across the organization.

-

Future-Readiness: Organizations that invest in a robust data strategy position themselves for future growth, as they can adapt more readily to new technologies and market dynamics.

Steps to Develop an Effective Data Strategy

Creating an effective data strategy involves several key steps:

-

Define Objectives: Begin by clearly outlining the goals you want to achieve through your data strategy. This can include improving customer experiences, increasing revenue, or enhancing operational efficiency.

-

Assess Current Data Capabilities: Evaluate your existing data infrastructure, governance frameworks, and analytics capabilities. Identify strengths, weaknesses, and gaps that need to be addressed.

-

Identify Key Data Sources: Determine the internal and external data sources that will be vital for your strategy. This may include customer data, sales data, market research, and third-party datasets.

-

Establish Data Governance: Implement data governance practices to ensure data quality, accuracy, and privacy. This includes defining roles, responsibilities, and policies related to data access and usage.

-

Invest in Technology and Tools: Select the right technology stack that can support data collection, storage, processing, and analysis. Consider data management platforms, analytics tools, and cloud solutions.

-

Build Analytics Competencies: Develop the skills and capabilities needed for effective data analysis. This may involve training existing staff, hiring new talent, or collaborating with external partners.

-

Create a Data Culture: Foster a culture that values data-driven decision-making. This requires ongoing communication about the benefits of data and encouraging employees to leverage data in their daily work.

-

Monitor and Iterate: Regularly review the effectiveness of your data strategy. Track progress against your objectives, gather feedback, and be prepared to adapt your strategy based on what you learn.

Considerations for Crafting a Data Strategy

When developing a data strategy, it's crucial to consider the following factors:

-

Alignment with Business Goals: Ensure that your data strategy aligns with your overall business strategy. Data initiatives should support broader organizational objectives.

-

Stakeholder Engagement: Involve key stakeholders across departments to gain insights and buy-in. Cross-functional collaboration can enhance the effectiveness of your data strategy.

-

Data Privacy and Security: Prioritize data privacy and security throughout your strategy. Implement measures to protect sensitive information and comply with regulations, such as GDPR and CCPA.

-

Scalability: Choose solutions that can scale with your data needs. As your organization grows, your data infrastructure should be able to accommodate increasing volumes and complexity.

-

Change Management: Be prepared for cultural and operational shifts that accompany new data initiatives. Metrics for success should be clear, and communication is key in managing transitions smoothly.

-

Ethical Considerations: Address ethical concerns related to data use, including bias in data analytics and algorithmic decision-making. Developing clear ethical guidelines can build trust and minimize risk.

Industry Transformations Through Data Strategy

Industries across the spectrum have experienced transformative change through effective data strategies:

-

Retail: Retailers leverage data analytics to understand customer preferences, optimize inventory management, and create personalized marketing campaigns, enhancing customer experiences and increasing sales.

-

Healthcare: In healthcare, data strategies are used to improve patient care and operational efficiency. By analyzing patient data, providers can enhance treatment approaches, predict patient outcomes, and streamline administrative processes.

-

Finance: Financial institutions employ data strategies to mitigate risks, enhance fraud detection, and tailor products to customer needs. Data-driven insights aid in making informed lending and investment decisions.

-

Manufacturing: Manufacturers utilize data analytics for predictive maintenance, supply chain optimization, and quality assurance. This leads to reduced downtime, lower costs, and improved product quality.

-

Telecommunications: Telecom companies analyze usage patterns and customer feedback to develop better service packages, enhance customer support, and minimize churn rates.

-

Transportation: Data strategies in transportation optimize routing, improve fuel efficiency, and enhance logistics management. Companies can analyze traffic data to provide real-time updates to their customers.

By adopting a comprehensive data strategy, industries can unlock value, drive innovation, and maintain a competitive edge in an increasingly data-driven world.

Final Thoughts on Data Strategy

Emerging technologies may allow a new generation of data-management skills, possibly making defensive and offensive plans easier to deploy.

Only a few back-office activities, such as payroll and accounting, used to rely on data. It is now essential to any business, and the necessity of strategically managing it is only increasing. The consequences are unavoidable: companies who have not yet developed a data strategy and a solid data management function must either catch up quickly or prepare to exit.

- Discover more about Top 9 Challenges of Big Data Architecture

- Read more about Graph Database: Working | Advantages | Use-Cases