Artificial intelligence (AI) is changing robotics, allowing machines to sense, make decisions, and act in real time. From factory assembly lines to healthcare service robots, AI-driven robots depend on massive computational power to process information, learn from their surroundings, and make decisions in milliseconds. This blog discusses how faster computing, GPUs, and edge computing platforms are transforming robotic performance and efficiency and looks into the future of AI chips and quantum computing.

Need for High Performance Computing in Robotics

The core of any AI-driven robot is its capacity to process information and make decisions in a timely manner. Robotic systems rely heavily on artificial intelligence algorithms, especially machine learning (ML) and deep learning (DL), to process input data and respond accordingly. These algorithms require extensive computing power due to several factors:

-

Real-Time Decision-Making: Robots may need to make decisions within a fraction of a second based on data from sensors like cameras, LIDAR, temperature sensors, and accelerometers. The need for high-speed processing makes accelerated computing in robotics crucial for achieving real-time responsiveness.

-

Complexity of AI Models: The complexity of machine learning and deep learning models employed in robotics—like CNNs for computer vision, reinforcement learning for making decisions, and RNNs for handling sequential data demands requires massive computational capacity to train and deploy.

-

Sensor Data Processing: Robots capture massive amounts of real-time data from a host of sensors. To process such data at speed and accuracy, there is a need for special hardware. A case in point is an autonomous robot that's mapping a warehouse, or a drone making deliveries within cities and must process LIDAR data, video feeds, GPS locations, and other data virtually in real-time to ensure safety and travel effectively.

Without fast computation, even the most advanced AI algorithms can suffer from latency issues, inaccuracies, and eventual failure in real-world applications. This is where accelerated computing in robotics, powered by GPUs, TPUs, and custom AI chips, plays a pivotal role. Additionally, solutions like Azure IoT Edge further enhance robotic performance by enabling real-time AI processing at the edge, reducing reliance on centralized cloud systems.

GPUs vs CPUs vs TPUs: Choosing the Right Compute Power

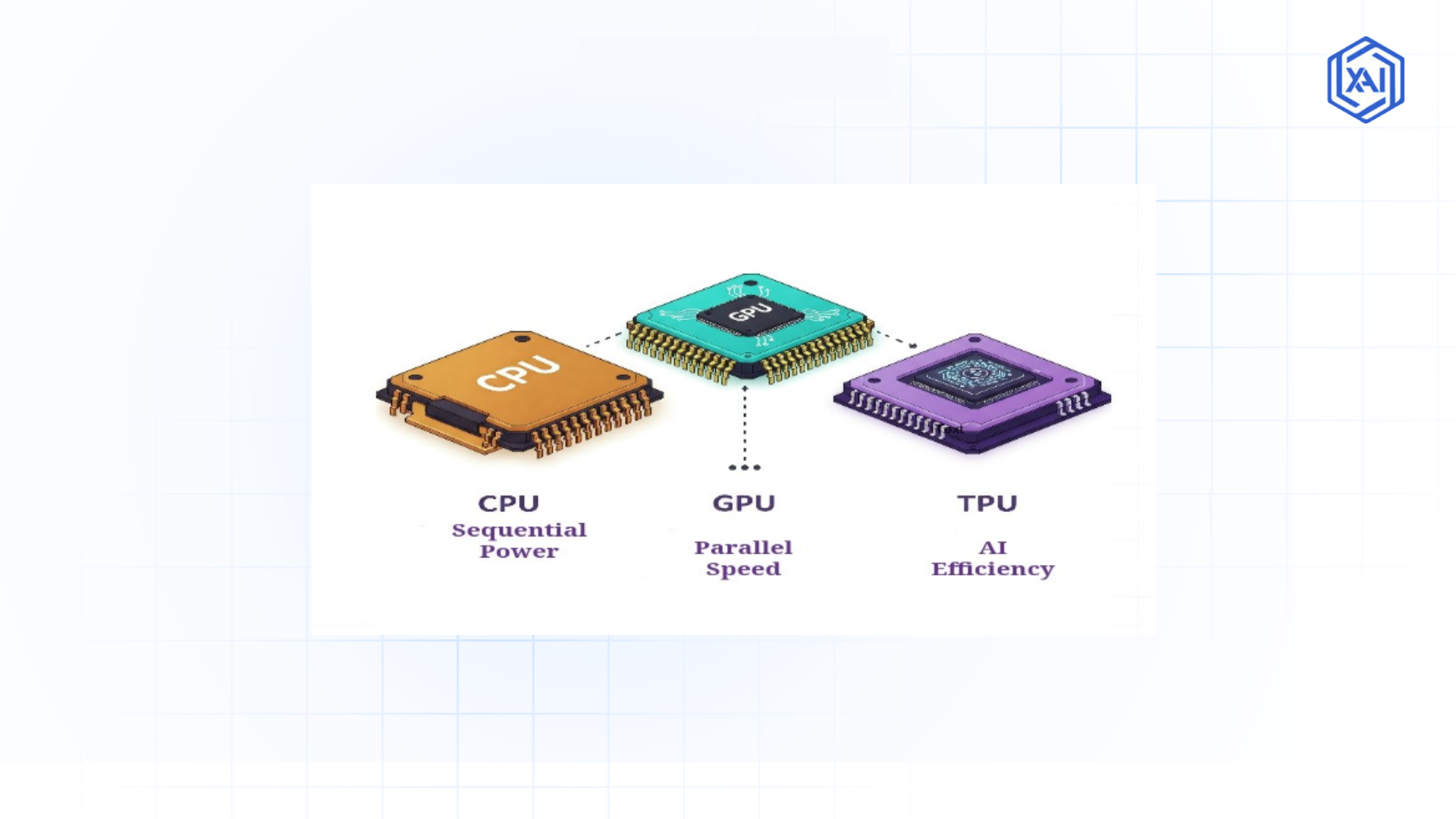

Fig 1: CPU, GPU, and TPU

Fig 1: CPU, GPU, and TPU

When it comes to powering AI in robotics, several processing units are deployed, each offers a unique advantage which depends on the task. Let’s break down the big three:

CPUs (Central Processing Units)

CPUs are the flexible processors which are found in approximately every computer device. CPUs are general-purpose computing workhorses; CPUs do sequential tasks exceedingly well. Yet they struggle when it comes to executing the parallel computations needed in AI applications such as training a neural network or processing high quality video streams.

Pros

-

Flexible and general purpose.

-

Good at handling low-latency, single threaded task .

-

Commonly used in robots for control systems and general computing.

Cons

-

Slower compared to GPUs and TPUs.

-

Not suitable for parallel processing tasks.

GPUs (Graphics Processing Units)

First used for rendering graphics, GPUs excel at parallel processing. With thousands of cores, they can do many tasks at once which is ideal for speeding up matrix operations in deep learning. NVIDIA's GPUs, for instance, have become global in robotics for their capability to process sensor data and train AI models with efficiency.

Pros

-

Best suitable for parallel processing tasks.

-

High speed, allowing for faster model training.

-

Scalability in cloud computing for large scale AI tasks

Cons

-

Power consumption can be higher than CPUs.

-

Expensive than CPUs.

TPUs (Tensor Processing Units)

Google-created TPUs are designed specifically for speeding up tensor computations in machine learning. They are optimized for certain AI platforms such as TensorFlow, providing super-fast performance for inference. While they are fast, their special purpose makes them less general-purpose than GPUs.

Pros

-

Highly suitable for machine learning tasks, also for deep learning.

-

Extremely fast for matrix operations, which are basic in neural networks.

-

Lower power consumption the GPUs.

Cons

-

Limited in versatility compared to CPU and GPU.

-

It is more expensive and difficult to integrate into small scale robots.

In the case of robotics, GPUs generally provide the optimal cooperation between flexibility and performance, particularly in dynamic situations. CPUs are still involved in operating system-level activities, and TPUs are best suited for very specific, high-throughput AI workloads. The decision is based on the intended use of the robot flexibility, speed, or cost-effectiveness.

Edge AI and On-Device Processing for Faster Responses

Traditionally, robots uploaded information to cloud servers for processing, adding latency and internet dependence. Step in edge AI: the method of executing AI algorithms directly on the robot's hardware. Through the utilization of high-capacity on-device processors, edge AI allows quicker decision-making and more autonomy.

Imagine a delivery drone flying through a crowded city. Cloud computing might slow its response to an unexpected obstacle, leading to a crash. Edge AI allows the drone to process sensor data locally with small, power-saving chips (such as NVIDIA's Jetson series), which will respond in milliseconds. This not only improves performance but also saves bandwidth costs and increases privacy by keeping information onboard.

Edge AI is revolutionizing robotics for remote or timely applications, such as search and rescue to driverless cars.

Benefits of Edge AI

-

Low Latency: Robots can respond quicker by processing data locally instead of waiting for a round-trip to the cloud. For instance, an autonomous car must sense and respond to pedestrians, other cars, or roadblocks in real-time to prevent accidents. Edge AI minimizes the latency between sensing and responding to prevent accidents.

-

Less Bandwidth Usage: Edge AI minimizes the necessity to continuously transmit huge amounts of sensor data to the cloud, thus minimizing bandwidth usage. This is highly beneficial in areas where connectivity is poor or unreliable.

-

Enhanced Security and Privacy: With processing sensitive information on-device, edge AI decreases the exposure risk of sending information to the cloud that may result from breaches.

-

Energy Efficiency: Offloading AI computation onto local hardware helps robots to perform more effectively without having to greatly depend on the cloud's power consumption.

Real Time AI Decision Making: The Role of Parallel Processing

Real-time decision-making is the throb of current robotics, and parallel processing is its machine. In contrast to sequential processing, where operations are processed one at a time, parallel processing processes several operations simultaneously. This is essential for robots dealing with perception, planning, and actuation simultaneously.

GPUs are better at this, splitting workloads between thousands of cores. For example, robotic arm building electronics could utilize one group of cores to process inputs from camera, another to forecast component placement, and a third to fine-tune motor controls all parallelly. This parallelism allows decisions to occur quickly enough to keep up with the physical world.

Software frameworks such as CUDA (for NVIDIA GPUs) and ROS (Robot Operating System) further streamline these workflows, making parallel processing available to developers of next-generation robots.

Case Studies: AI in Industry and Service Robotics

Let’s look at how these technologies play out in the real world:

The Future of AI Chips and Quantum Computing in Robotics

Robotic computing is only at the beginning of its evolution. Dedicated AI chips such as NVIDIA's H100 or Google's future TPUs are continuing to blur the lines of efficiency and power. They are faster, smaller, and more efficient, which can be perfectly suitable for miniaturized robots working in confined areas or on limited battery resources.

Looking even further ahead, quantum computing is on the horizon. Unlike traditional computers, quantum systems rely on qubits to calculate exponentially faster for specific problems—such as optimizing robot pathfinding or simulating molecular interactions for medical robots. In its infancy at present, quantum computing has the potential to open unprecedented ability, such as robots that can learn and adapt at an unimaginable scale.

The Role of High-Performance Computing in AI-Driven Robots

At the core of AI driven robots high-performance computing lies. From TPUs and GPUs to edge AI, the computing power of robots has a direct effect on their efficiency, decision making abilities, and real time responsiveness. As the robotic systems are only growing stronger, the need for increasingly fast, dedicated computing hardware will continue, with edge AI and parallel processing playing pivotal roles in delivering next-generation autonomous machines.

With these developments, robots will become increasingly central to industries from manufacturing to healthcare, revolutionizing how we work and live. Looking ahead, the possibilities of AI chips, quantum computing, and neuromorphic processors may stretch the limits of what robots can do, increasing their autonomy, intelligence, and efficiency in a constantly changing world.

Next Steps for Implementing High-Performance Computing in Robotics

Talk to our experts about leveraging accelerated computing in robotics. Learn how industries utilize AI-powered automation and high-performance computing to enhance robotic efficiency, decision-making, and real-time responsiveness.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)