Edge computer vision empowers organizations to analyze images and video streams locally, dramatically reducing latency, saving bandwidth, and enhancing privacy.

In this blog, we’ll dive deep into how Azure IoT Edge forms the backbone for deploying intelligent vision workloads on diverse edge computing platforms, and we’ll explore best practices, development techniques, and real‑world use cases, including object counting for industrial automation.

What is Edge Computer Vision and Its Benefits?

Edge computer vision refers to performing image processing and analyzing visual data directly on source devices such as industrial cameras or rugged PCs or smart sensors which operate instead of sending information to remote cloud servers. Companies gain real-time insights through executing advanced tasks at the data collection point which enables instantaneous decision-making and automation capabilities.

Processing visual data locally offers several critical advantages:

-

Ultra-Low Latency: The absolute necessity of instant decision-making exists in situations where vehicles operate autonomously and robotic systems work in manufacturing and safety operations.

-

Bandwidth Efficiency: When operating high-resolution video streams through bandwidth, they can easily consume all available network resources. Edge Computing Platforms enable on-site data filtering, reducing bandwidth consumption by sending only essential alerts and metadata.

-

Enhanced Privacy: The retention of sensitive images on the device protects valuable visual data as this method eliminates their exposure during communication thereby strengthening privacy measures.

The future of AI lies at the edge, where real-time intelligence meets efficiency. Explore how Edge Computing Platforms are revolutionizing automation and AI processing.

Azure IoT Edge for AI-Powered Vision Processing

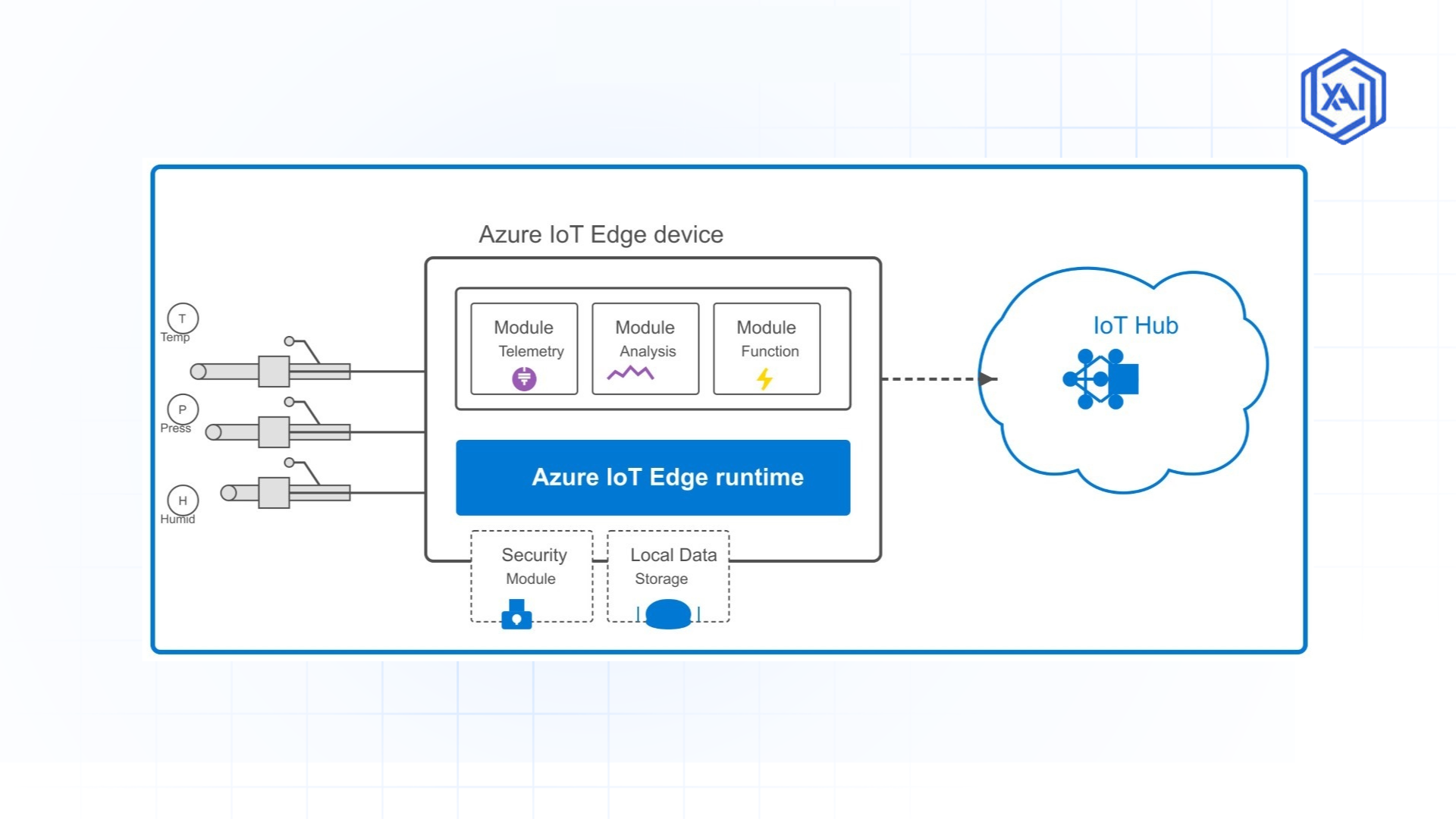

Azure IoT Edge extends the capabilities of Azure IoT Hub by enabling containerized workloads to run on Edge Computing Platforms. This allows for localized, real-time object counting and other computer vision tasks, shifting heavy processing from centralized data centers to distributed edge devices. The modular and scalable architecture supports deployments from a single Raspberry Pi to industrial-grade edge gateways.

Fig 1: Azure IoT Edge components

Fig 1: Azure IoT Edge components

Key components of the Azure IoT Edge runtime include:

-

IoT Edge Agent: Manages module deployments, monitors container health, and updates modules automatically.

-

IoT Edge Hub: Ensures secure, bidirectional communication between edge devices and Azure IoT Hub.

-

Edge Modules: These Docker‑containerized applications can host custom computer vision algorithms, stream analytics, or even third‑party services like Azure Cognitive Services. Modules can be independently updated and scaled, providing a flexible deployment model.

Setting Up Azure IoT Edge for Vision AI

For robust computer vision performance at the edge, choose hardware that meets these key criteria:

-

Processing Power: Devices such as Raspberry Pi 3B+ or industrial gateways provide sufficient CPU and memory. In more demanding applications, consider devices with integrated GPUs or dedicated accelerators.

-

Camera/Sensor Integration: Ensure your device supports camera inputs (via USB, CSI, or Ethernet) and can handle high‑resolution streams.

Installing and Configuring Azure IoT Edge Platform

Getting started involves:

-

Installing the Runtime: Follow Microsoft’s Azure IoT Edge documentation to install the runtime on your chosen Linux‑based device.

-

Container Setup: Ensure Docker is installed and configured to run Linux containers. For development, Visual Studio Code with the Azure IoT Edge extension streamlines the process.

-

Configuration: Securely register your device with Azure IoT Hub using the provided connection strings and certificates.

After installation, your device must be registered with Azure IoT Hub.

Best Computer Vision Models for Edge AI

There are two primary approaches to deploying computer vision models at the edge:

-

Pre-trained Models: Quick to deploy and ideal for general tasks like object detection or classification. They’re often available through services such as Azure Custom Vision.

-

Custom Models: Tailored to specific use cases, these models are trained on your own dataset to achieve higher accuracy in niche applications. Custom models often require more data and fine‑tuning but can deliver superior performance for domain‑specific tasks.

Model Optimization Techniques for Edge Devices

To ensure efficient inference on resource‑constrained devices, consider:

-

Quantization: Reducing model precision (e.g., from float32 to int8) to lower memory and compute requirements.

-

Pruning: Removing redundant model parameters to decrease model size.

-

Conversion to ONNX: This allows the model to be further optimized by hardware-specific accelerators like GPUs, FPGAs, or VPUs.

Developing Custom Vision Modules for Azure IoT Edge

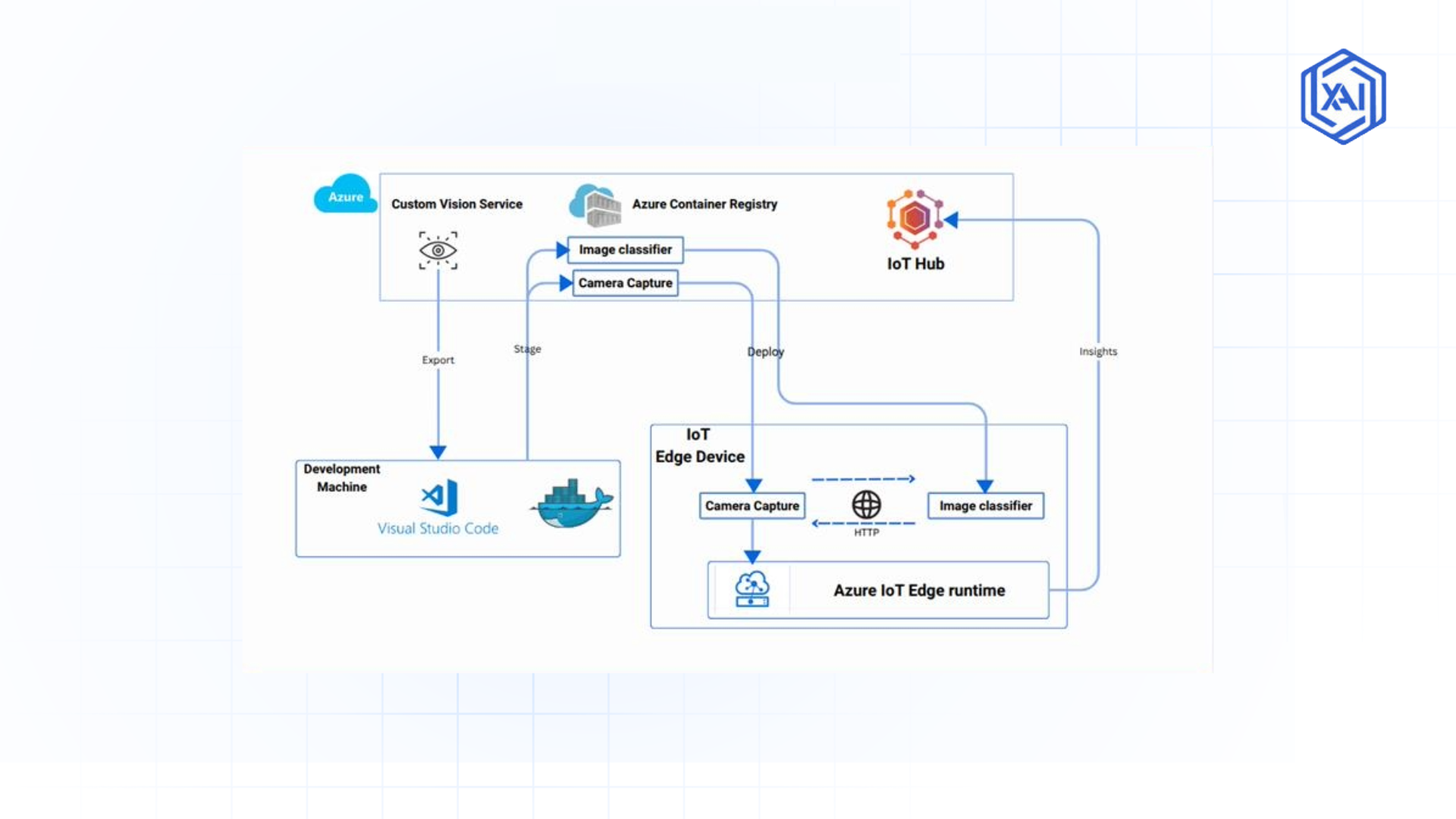

Building custom vision modules starts with training your model using Azure Custom Vision Service. Once you’ve collected and labeled your images, you train the model to recognize your target objects. After the training phase, you can export your model as a Docker‑compatible package.

Fig 2: Image classification at the edge

Fig 2: Image classification at the edgeContainerization Process

The trained model and its dependencies such as Python libraries alongside inference scripts and runtime configuration should be placed into a Docker container. The Azure IoT Edge extension inside Visual Studio Code helps developers construct new IoT Edge solutions through its scaffold function.

Model Export & Integration

The exported model from Custom Vision operates in the “compact” domain format that optimizes performance for edge device environments. The exported files need to be integrated properly into your module's file structure while modifications should be made to the Dockerfile to establish the runtime environment.

Testing and Debugging

Thorough testing of your container must happen before its deployment to an edge device. Confirm both the model loading process and the inference API response and the ability of the container to process sample images.

Real-Time Object Detection and Recognition at the Edge

Real-time detection is essential for scenarios like surveillance, retail self‑checkout, or autonomous systems. Edge solutions deploy lightweight yet powerful detection algorithms such as YOLO (You Only Look Once) or SSD (Single Shot Detector) within containerized modules.

-

Algorithm Selection and Trade-offs: Both YOLO and SSD perform quick commercial applications because they process complete images through a single analytical step. The decreased accuracy level for speed can be compensated by adjusting the algorithms through custom dataset training for distinctive application needs.

-

Integration with IoT Edge: The containerized model begins receiving real-time video feeds from its listening point. The system performs local pre-processing of incoming frames which includes resizing them along with normalization and potential OpenCV enhancement before executing inference operations.

-

Latency and Throughput: Achieving low‑latency responses is crucial. The pipeline becomes more efficient by storing the model in system memory while using replicated TensorFlow sessions or comparable runtime contexts repeatedly.

Advanced Edge CV Techniques

For applications that demand higher levels of contextual understanding or more complex analytics, advanced techniques can be deployed at the edge.

-

Multi-Camera Coordination: Multiple cameras need coordination to observe bigger monitoring zones and diverse viewing perspectives when one camera field cannot cover the areas effectively. The perspectives need calibration through algorithms followed by the use of homography or perspective transformation techniques to fuse the results.

-

Motion Detection and Tracking: Data motion detection functions through the use of three different methods including optical flow algorithms alongside Kalman filters and background subtraction techniques.

-

Sensor Fusion: Sensor detection accuracy can be increased by merging data obtained through vision modules together with LiDAR and thermal imaging sensor inputs. Sensor fusion algorithms work together to overcome the sensor deficiencies which become prominent during unfavorable conditions such as diminished visibility and thick background elements.

Data Pipeline for Edge-to-Cloud Visual Intelligence

Efficient data pipelines ensure that critical insights from your edge devices reach the cloud without overwhelming network resources.

Pipeline Design Strategies:

Security and Privacy Considerations in Azure IoT Edge Vision

Protection of sensitive data stands as an essential requirement during the deployment process of vision applications. In edge environments multiple difficulties occur because devices exist in dispersed locations and may also be accessible to humans.

Data Encryption and Secure Communication

Security protocols such as TLS/SSL should protect static and forwarding visual information through encryption standards. The built-in security features of Azure IoT Hub provide the option to secure edge cloud communication by using either SAS tokens or X.509 certificates.

Secure Boot and Firmware Updates

The implementation of secure boot protocols should be used to limit edge devices to boot authorized software applications only. The implementation of firmware updates and IoT Edge modules should be done on a regular basis .

Privacy-Preserving Techniques

Avoid transmitting raw images because their processing near their source prevents the exposure of personal information. Before cloud transmission process you must apply image anonymization and face blurring techniques to protect data privacy.

Optimizing Performance for Edge Computer Vision AI

Optimizing your vision modules is crucial for maintaining a responsive and reliable edge solution. Several techniques and tools can help you ensure peak performance.

Optimization Strategies

-

Hardware Acceleration: Utilize GPUs, FPGAs, or VPUs to speed up inference times. For example, quantizing and pruning your models can significantly reduce computational load while maintaining acceptable accuracy. Libraries such as TensorRT or OpenVINO can further optimize model performance on specific hardware.

- Resource Monitoring: Use Azure Monitor, IoT Hub diagnostics, and custom logging to track CPU, memory, and network usage. Profiling your modules in real‑time can help you identify bottlenecks such as slow image pre‑processing or inefficient memory usage.

-

Container and Code Optimization: Optimize your Docker containers by reducing image size, using multi-stage builds, and ensuring that only essential libraries are included. Refactor code to eliminate redundant operations, and consider asynchronous processing techniques to overlap image capture and inference.

Real-World Use Cases of Azure IoT Edge Vision

Examining real-world deployments can help illustrate the practical benefits of edge computer vision with Azure IoT Edge.

Manufacturing – Defect Detection and Quality Control

The manufacturing industry utilizes Azure IoT Edge to detect defects during quality control operations. The company uses vision modules across its production line to inspect printed circuit boards.

Smart Cities – Traffic Monitoring and Public Safety

Multiple edge devices across a busy urban region detect traffic activity at roads and walkways. Real-time detection algorithms process video footage through analysis to perform vehicle counting operations while identifying traffic rule violations together with detection of stalled vehicles.

Healthcare – Patient Monitoring and Safety Applications

Hospital patient rooms together with common areas utilize edge vision solutions for patient activity assessment and fall detection and unusual movements identification.

Retail – Automated Inventory and Checkout

The retail industry utilizes edge vision technology for automated checkouts . The overhead placement of cameras in grocery store price check zones enables them to image merchandise at those locations.

Future Trends in AI-Powered Edge Computer Vision

AI Model Evolution for Edge Devices

As hardware improves, expect:

-

Smarter Models: Continuous advances in deep learning will lead to models that are both more accurate and efficient.

-

Transfer Learning: Increased use of transfer learning allows for rapid adaptation to new environments without full retraining.

Emerging Hardware Accelerators

New accelerators (ASICs, next‑gen GPUs, dedicated VPUs) will:

-

Boost Efficiency: Provide faster inference at lower power consumption.

-

Expand Use Cases: Enable complex applications in constrained environments.

Integration with 5G and Next-Generation Networks

The advent of 5G has been a boon for these IoT devices as:

-

Reduce Latency Further: Even faster data transmission between edge and cloud.

-

Enable Real‑Time Collaboration: Support more dynamic, hybrid edge‑cloud workflows for enhanced decision‑making.

Why Choose Azure IoT Edge for Vision AI?

With the insights and best practices outlined above, you’re now equipped to start building your own edge computer vision solution using Azure IoT Edge. Whether you’re focused on manufacturing automation, smart city surveillance, or healthcare monitoring, the combination of local inference, cloud management, and scalable deployment can transform your operations.

How to Proceed?

-

Set Up Your Environment: Begin by installing the Azure IoT Edge runtime on a development device.

-

Experiment with Models: Use Azure Custom Vision to train a simple classifier and export it as a Docker container.

-

Deploy and Monitor: Utilize Azure IoT Hub to deploy your modules and monitor their performance using Azure Monitor.

-

Iterate and Optimize: Continuously refine your models and workflows based on real‑world performance and feedback.

Embrace the future of AI at the edge and unlock unprecedented efficiency and innovation in your organization.

Next Steps in Deploying Edge AI with Azure

Talk to our experts about implementing Edge AI systems. Learn how industries and different departments use Edge Computer Vision and Azure IoT Edge to enable Agentic Workflows and Decision Intelligence for real-time automation. Utilize AI at the edge to optimize IT support, industrial operations, and responsiveness.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)