How is the integration of artificial intelligence (AI) transforming business operations and driving innovation, automation, and efficiency? AI-driven solutions are revolutionizing industries by enabling organizations to make data-driven decisions, optimize processes, and enhance customer experiences. However, as businesses increasingly rely on AI agents to automate complex tasks, ensuring the security of these AI inference pipelines becomes a top priority.

The security of AI inference pipelines involves safeguarding data, model integrity, and system performance against potential threats, including cyberattacks, data leaks, and adversarial manipulations. Platforms like Databricks, leveraging Databricks Lakehouse and Databricks serverless, provide scalable solutions to manage and secure AI-driven workloads. When integrated with Agentic AI, following an agentic AI architecture and enabling agentic workflows, these technologies ensure both operational integrity and data protection.

This article explores the role of Databricks and Agentic AI companies in building secure AI inference pipelines, the challenges they address, and their practical applications across various industries.

Introduction to Databricks: A Comprehensive Data Analytics Platform

Databricks is a cloud-based, unified data analytics platform designed to process and analyze large datasets efficiently. It integrates seamlessly with AI models, enabling organizations to develop, train, and deploy AI applications at scale. The platform provides a collaborative environment where data engineers, data scientists, and business analysts can work together to build advanced analytics solutions.

Some of the key features of Databricks include:

-

Scalability: Allows for handling large datasets with ease, ensuring smooth model training and AI inference.

-

Collaboration: Supports seamless integration between teams working on AI and data analytics projects, enabling Databricks workflows.

-

Security: Offers built-in security features such as data encryption, role-based access control, and compliance with industry standards, ensuring Databricks serverless environments are protected.

-

Performance Optimization: Enables faster data processing and model inference through distributed computing using Databricks Lakehouse architecture.

With these capabilities, Databricks serves as a powerful foundation for AI-driven applications, ensuring that models are trained on high-quality data and deployed securely. Additionally, it integrates with Databricks MLflow for model tracking and management.

Exploring Agentic AI: Advancements in Autonomous Systems

Agentic AI refers to autonomous AI agents capable of reasoning, decision-making, and executing tasks without human intervention. Unlike traditional AI models, which operate based on predefined rules and static datasets, Agentic AI leverages real-time data to adapt to new information, improve decision-making, and optimize agentic workflows.

The increasing adoption of Agentic AI introduces new challenges in terms of security, governance, and ethical considerations. Since these AI agents operate autonomously, it is essential to implement strict security measures to prevent unauthorized access, data breaches, and adversarial attacks.

Key attributes of Agentic AI include:

-

Autonomy: The ability to operate without direct human intervention.

-

Adaptability: Continuous learning and improvement based on real-time data.

-

Decision-Making: Advanced reasoning capabilities to optimize processes and solve complex problems, similar to AI inference.

-

Security Considerations: Protection against manipulation, data leaks, and adversarial attacks is crucial for safeguarding AI inference pipelines.

By integrating Agentic AI architecture into business solutions, organizations can achieve more efficient, intelligent, and secure operations.

Comparing Traditional and Agentic Workflows in AI Inference Pipelines

The shift from traditional AI workflows to agentic workflows represents a fundamental change in how AI systems are designed and deployed. Traditional AI models typically follow a linear development cycle with fixed parameters and predefined workflows. In contrast, Agentic AI systems introduce more dynamic and flexible processes, leveraging real-time data to adapt, improve, and optimize AI inference pipelines.

|

Aspect |

Traditional AI Approaches |

Agentic AI Workflows |

|

Decision-Making |

Relies on predefined rules and static models |

Utilizes autonomous agents for dynamic decision-making |

|

Adaptability |

Limited ability to adjust to new data or scenarios |

Learns and adapts to new data in real-time |

|

Scalability |

Requires manual intervention for scaling |

Scales autonomously with increasing complexity and data volumes |

|

Security Considerations |

Focuses on securing static models and pipelines |

Requires advanced security measures for dynamic data flows |

This transition highlights the need for more advanced security frameworks to ensure AI inference pipelines remain robust and protected against evolving threats.

Strategies for Securing AI Inference Pipelines with Databricks

Integrating AI agents within the Databricks platform enhances the security and efficiency of AI inference pipelines. By leveraging Databricks' powerful data processing capabilities, organizations can ensure that AI agents operate in a secure and controlled environment. Key security measures implemented within Databricks include:

-

Data Encryption: Protects sensitive data throughout the AI lifecycle.

-

Access Control: Implements role-based permissions to restrict unauthorized access, ensuring secure Databricks workflows.

-

Monitoring and Auditing: Tracks AI agent activities to detect anomalies and prevent security breaches, ensuring Databricks serverless environments remain protected.

-

Scalable Infrastructure: Supports large-scale AI inference without compromising security, using Databricks lakehouse architecture.

For example, in a financial services application, an AI agent can analyze transaction patterns to detect fraudulent activities. Databricks facilitate the ingestion, processing, and analysis of this data, while security features ensure that the AI inference pipeline remains protected against cyber threats. By integrating Databricks MLflow, businesses can ensure efficient model tracking and deployment within a secure environment.

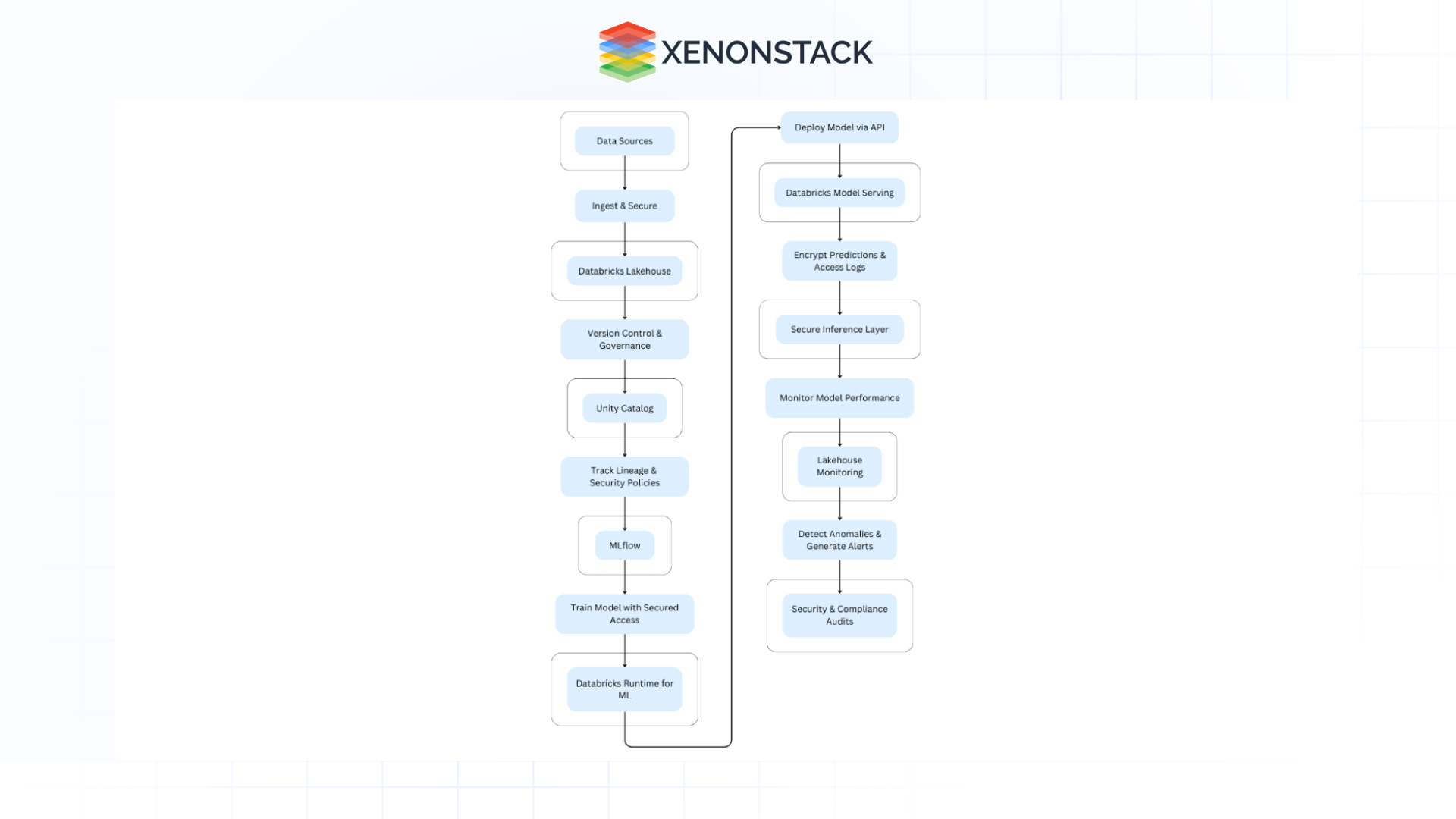

Implementing a Layered Security Architecture in Databricks

Securing AI inference pipelines is crucial in preventing vulnerabilities, unauthorized access, and data breaches while ensuring compliance with industry regulations. Databricks provides a robust framework that integrates security at multiple levels—data ingestion, model training, inference, and monitoring.

The security architecture follows a layered approach:

Data Ingestion & Secure Storage

-

AI inference begins with data ingestion from multiple sources such as databases, APIs, IoT devices, and enterprise applications.

- Databricks Lakehouse acts as the central repository for structured and unstructured data.

- Unity Catalog provides fine-grained access control, data versioning, and tracking of metadata lineage.

-

All data is encrypted at rest and in transit using AES-256 encryption to prevent unauthorized access.

Model Governance & Experiment Tracking

-

MLflow, integrated within Databricks, ensures secure tracking of model experiments, hyperparameters, and evaluation metrics.

-

Access controls are enforced via Role-Based Access Control (RBAC), ensuring that only authorized users can view or modify model artifacts.

Secure Model Training & Validation

-

AI models are trained in isolated Databricks Runtime for ML clusters to prevent unauthorized access.

-

Continuous validation detects bias, adversarial attacks, and data drifts, ensuring robustness before deployment.

Model Deployment & API Security

-

Once validated, models are deployed securely using Databricks Model Serving, ensuring low-latency, high-throughput predictions.

-

Token-based authentication, API gateways, and Transport Layer Security (TLS) encryption secure model access and prevent unauthorized API calls.

-

Containerized endpoints ensure model portability and scalability across different environments.

AI Inference & Secure Processing

-

The secure inference layer manages real-time model predictions, logging every inference request and response.

-

Access logs, anomaly detection, and compliance policies ensure data security at inference time.

-

This layer prevents model poisoning attacks and unauthorized data access.

Continuous Monitoring & Compliance

Lakehouse Monitoring tracks model performance in production, identifying data drift, concept drift, and model degradation.

-

Security alerts and real-time anomaly detection prevent fraud, unauthorized model access, or suspicious activity.

Real-World Applications of Agentic AI in Databricks

The integration of Agentic AI within Databricks has far-reaching applications across various industries:

Organizations leveraging these Agentic AI capabilities experience improved efficiency, reduced operational costs, and enhanced decision-making processes through scalable Databricks workflows and AI inference.

Future Trends in AI Security within Databricks

Looking ahead, the integration of Databricks and Agentic AI is expected to drive significant advancements in AI security, governance, and efficiency. Future trends include:

Greater AI Autonomy

AI agents will become more self-sufficient, minimizing the need for human intervention in processes like AI inference and model training.

Enhanced Security Protocols

Continuous improvements in AI security frameworks will mitigate emerging cyber threats, ensuring that Databricks workflows and AI pipelines remain robust.

Industry-Wide Adoption

More organizations across various sectors will integrate Agentic AI into their operations, transforming their AI-driven decision-making and optimizing processes.

Real-Time Learning

AI models will evolve to incorporate continuous learning mechanisms, enabling more accurate and adaptive decision-making powered by Databricks Lakehouse and Databricks serverless environments.

The Role of Securing AI Inference Pipelines for Future-Ready Businesses

Ensuring secure AI inference pipelines is a critical challenge as organizations continue to adopt AI-driven solutions. Platforms like Databricks, combined with the power of Agentic AI, provide a robust framework for securing AI workflows, protecting sensitive data, and optimizing decision-making. By implementing strong security measures, businesses can leverage the full potential of AI while mitigating risks and ensuring compliance with industry standards.

As AI continues to evolve, the role of Databricks in providing a secure and scalable environment for AI inference pipelines will become increasingly vital. Organizations that invest in secure AI infrastructures today will be better positioned to navigate the complexities of tomorrow’s AI-driven world.

Next Steps for Securing AI Inference Pipelines with Databricks and Agentic AI

Talk to our experts about implementing secure AI inference pipelines and leveraging Databricks for Agentic AI integration. Learn how industries and different departments utilize Agentic workflows and AI-driven decision intelligence to enhance security, governance, operational efficiency, and responsiveness.