LLM Vulnerabilities

LLM vulnerabilities are security risks associated with using large language models in applications. Key threats include Prompt Injection, where attackers manipulate inputs to trigger unintended actions, and Insecure Output Handling, which exposes backend systems when model responses aren't properly validated. Training Data Poisoning can introduce biases or security flaws, while Model Denial of Service exploits the resource-intensive nature of LLMs, leading to service disruption. Supply Chain Vulnerabilities occur when third-party components or services are insecure, increasing the risk of attacks.

Other severe threats include sensitive information disclosure, in which models may disclose security data, and insecure plugin design, which rockets vulnerabilities, including a remotely exploitable security hole through poor plugin security. Excessive Agency often comes up with prompt injection, which occurs when attackers manipulate inputs to trigger unintended actions. Insecure output handling can expose backend systems when model responses aren't properly validated. Training data poisoning may introduce biases or security flaws, and model denial of service exploits the resource-intensive nature of large language models (LLMs), leading to service disruptions. Additionally, supply chain vulnerabilities arise when third-party components or services are insecure, increasing the risk of attacks.

When LLMs take undesired actions attributed to the extensive permission they grant, Overreliance on LLMs to disseminate information may lead to relaying fake information. Lastly, Model Theft is a significant risk to proprietary models, which need to have exerted high-security measures on access. Addressing these vulnerabilities is crucial for ensuring the secure and ethical deployment of LLM technology.

For more information, read - here.

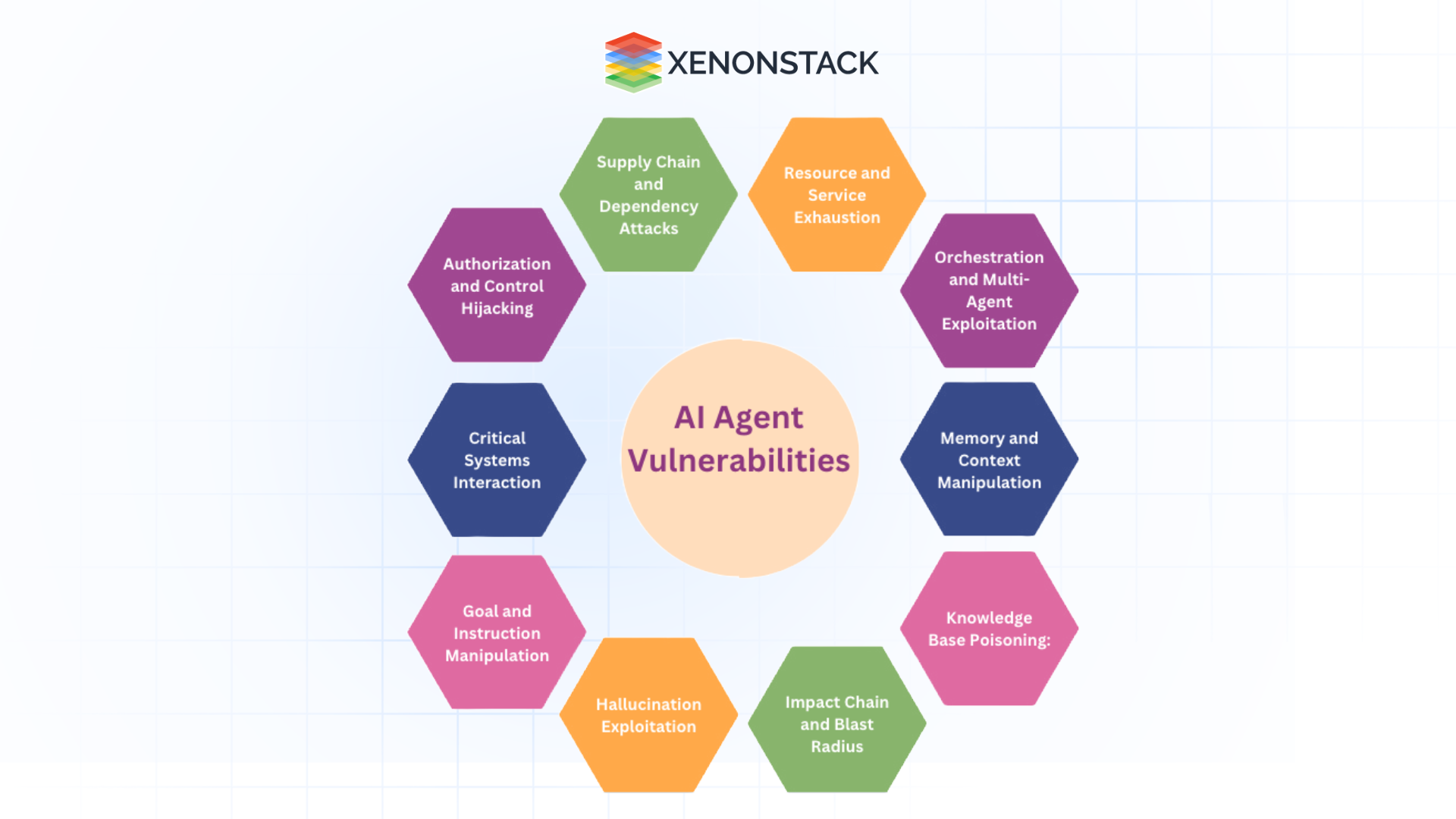

The Top 10 AI Agents Vulnerabilities: Challenges, Solutions, and Examples

Specialized AI agents are designed to address these vulnerabilities through targeted interventions, proactive monitoring, and intelligent adaptation. Below, we explore how each vulnerability is handled and its business value.

Authorization and Control Hijacking

Challenge:

Authorization and Control Hijacking is a situation where the AI agents’ permission system has been compromised, and an attacker can take control or gain administrator-level control. This makes it possible for them to enter and give instructions that cause wrong action, leak data, and or compromise the systems, even while the agents seem legal.

Key threats include:

-

Direct Control Hijacking: Unauthorized control over an AI agent’s decision-making process.

-

Permission Escalation: Elevation of an agent’s permissions beyond intended limits.

-

Role Inheritance Exploitation: Attackers exploit dynamic role assignments to gain temporary elevated permissions.

-

Persistent Elevated Permissions: Failure to revoke elevated permissions, allowing attackers to maintain access.

Example of Vulnerability:

-

Failure to Revoke Admin Permissions: An AI agent retains admin permissions after completing a task, leaving a window for exploitation.

-

Task Queue Manipulation: A malicious actor tricks the agent into performing privileged actions under the guise of legitimate operations.

-

Role Inheritance Exploitation: Attackers exploit role inheritance to access restricted systems or data by chaining temporary permissions.

-

Compromised Control System: An attacker gains unauthorized control by issuing commands while the agent appears to operate normally.

-

Unintended Privilege Escalation: An agent retains elevated permissions across execution contexts, leading to unintentional privilege escalation.

Solution:

To secure AI agents, implement the following measures:

Role-Based Access Control (RBAC)

- Each role should know what they are allowed and cannot do.

- Provide limited time on roles and have them expire immediately after a task is done.

- Periodically review the level of permissions and their assigned roles for agents.

Agent Activity Monitoring

- Constant surveillance of the agent’s activity and permission modification in the process.

- Automate the ability to determine if the process has abnormal permissions.

- Document at least all permission changes, and activity logs are recommended to be perused occasionally.

Separation of Control Planes

- The separation of the control environment and the execution environment for agents.

- Much of it contains a different role for multiple functions and approval processes for crucial activities.

Audit Trails

-

Thus, record agent actions and permission changes with immutable audit trails are needed for compliance.

-

Required practices and procedures: Toggle with version control and conduct performance checks on the agents.

Least Privilege Principle

- Provide only the degree of access needed and use privileges as required for extraordinary access.

- Continuously monitor and revoke permission that is no longer required and have default time to live for the temporary privileges.

Critical Systems Interaction

Challenge

Critical system interaction threats are associated with AI agents' management of essential infrastructures, IoT nodes, or operational systems. These threats involve exposure to critical impacts involving physical harm, operation downtime, and organized safety risks. As AI agents become more independent and masterful, the odds of misuse are elevated. These perils bring new threats into the cybersphere that affect infrastructure in general.

Examples of Vulnerabilities

-

Physical System Manipulation: Attackers may exploit AI agents’ control over industrial control systems, leading to equipment malfunctions, operational halts, or even physical damage to infrastructure.

- Example: An AI agent controlling the temperature of a manufacturing plant is manipulated to overheat machinery, causing breakdowns.

-

IoT Device Compromise: Malicious actors can compromise how agents interact with IoT devices, allowing them to manipulate or disable devices or gain unauthorized control.

- Example: A smart thermostat controlled by an AI agent is hacked to cause temperature extremes, affecting sensitive equipment.

-

Critical Infrastructure Access: Insiders with access to critical infrastructure facilities can be abused, compromising the system and causing large-scale operational shutdowns.

- Example: A hacked AI agent controlling electrical grids has been halted in a bid to make blackouts in strategic regions.

Solution

Critical system interaction vulnerabilities can reduce an organization's security, so system isolation, role-based access management, and command validation are recommended. The measures used in an industrial environment, including safety interlocks, the emergency shutdown system, and redundancy, are critical.

Monitoring systems should probably include status watch, which refers to the constant observation of activity; exception recognition, which is the identification of something that is out of the norm; and/or notifications, which are the notification of suspicious activities. Backup or contingency features are other safety features within systems to guard against failures: fail-safe defaults, emergency controls, and backups. Validation systems must cover multiple command and action validation levels, often breaking the system. Conducting a security audit at least once in a while and conducting penetration testing are also recommended. These measures help protect such systems and act before disruptions to normal functioning occur.

Goal and Instruction Manipulation

Challenge

Attackers target AI agents to manipulate them to act incorrectly or interpret the wrong things around them. This vulnerability is risky because, in AI self-organization, the machine may perform unauthorized operations while the agent looks fine. The manipulation is aimed at how agents manage goals and what they consider instructions; possible outcomes may include systems breaches and data loss.

Examples of vulnerabilities

-

Goal Interpretation Attacks: Attackers manipulate an agent’s understanding of its goals, causing it to pursue harmful actions.

Example: An agent overlooks security flaws while thinking it's fulfilling its security task. -

Instruction Set Poisoning: Malicious instructions are injected into the agent’s task queue, causing it to perform harmful actions.

For example, an attacker might make the agent disable security features under the guise of maintenance. -

Goal Conflict Attacks inject conflicting goals, leading the agent to prioritize harmful tasks.

For example, a pricing AI focuses on revenue at the cost of fraud detection, leading to financial loss. -

Semantic Attacks: Manipulating the agent’s context understanding to bypass security measures.

Example: A time-sensitive instruction allows unauthorized access during a system update.

Solution

-

Goal Validation: This should always be done to check on the goal of the particular agent that conflicts with the system so that it can be removed before giving any destructive instruction.

-

Instruction Verification: Check instructions for syntactical and semantic correctness, check their origin, and implement measures to overcome priority attacks.

-

Semantic Protection: Use natural language validation and context checks to properly reject ambiguous instructions, using proper definition and context recognition.

-

Goal Execution Controls: Adjust and verify goals alongside objectives checkpoints during implementation so that security restrictions may be enforced.

-

Monitoring & Alerts: A system must continuously monitor the agent's goals and instruction execution. An anomaly detector that can detect security violations or abnormal agent actions must be implemented.

Hallucination Exploitation

Challenge

Hallucination Exploitation occurs when attackers manipulate AI agents to generate false or unreliable outputs, exploiting the system’s tendency to produce incorrect information. This can lead to compromised decision-making, unauthorized actions, or cascading errors in multi-agent systems.

Example of Vulnerabilities

-

Attackers induce false outputs that cause agents to misidentify security threats.

-

Malicious inputs lead agents to generate incorrect, convincing responses that influence user decisions.

-

Hallucinations cause incorrect tool selection or API calls.

-

Exploited hallucinations bypass security controls or validation checks.

-

Hallucinated outputs trigger cascading errors in multi-agent systems.

Solution

-

Hallucination Detection: Enforce output consistency checkpointing, confidence scoring, pattern matching and anomaly detection.

-

Output Verification Systems: Employ multiple validation layers: cross-checking, source check, and sanitization.

-

Decision Validation Controls: Record decision-making trees, check for logical connections between a given input and another, and confirm an action or result.

-

Monitoring Systems: Use monitoring for real-time data, patterns, behaviour, and deviation.

-

Protective Measures: Use input sanitation, output validation, decision audit points, exception processing, and human supervision for important activity control.

Impact Chain and Blast Radius

Challenge

Impact Chain and Blast Radius vulnerabilities occur when a security breach in one AI agent leads to cascading failures and widespread impact across interconnected systems. These vulnerabilities are particularly concerning due to the interdependent nature of agent systems, where a single compromised agent can affect multiple systems and trigger a chain reaction.

Example of Vulnerabilities

-

Cascading Failures: An exploited agent implements a failed solution into associated systems, consequently creating network breakdowns.

-

Cross-System Exploitation: Breach of trust between two agents allows an attacker to gain access to and manipulate more connected systems.

-

Impact Amplification: Malicious actors leverage an agent’s legitimate privileges to increase the impact of the attack through either propagating viruses or siphoning off information.

-

Widespread Data Exposure: The threat agent exploits an agent by gaining unauthorized entry to multiple linked systems containing confidential information.

Solution

-

System Isolation: Implement network segmentation, agent isolation, and compartmentalization to reduce interconnectedness and contain breaches.

-

Impact Limitations: To limit the scope of a compromise, set boundaries for agent permissions, resource access, and failure containment.

-

Monitoring Systems: Deploy anomaly detection, chain effect detection, and cross-system tracking to identify and respond to real-time breaches.

-

Containment Mechanisms: Implement systems for failure isolation, emergency shutdowns, and quarantine procedures to contain the damage.

-

Security Barriers: Validate trust relationships, enforce access controls, and monitor agent connections to prevent exploitation.

Knowledge Base Poisoning

Challenge

Knowledge Base Poisoning occurs when attackers manipulate or corrupt the data sources, training data, or knowledge bases that AI agents rely on for decision-making. This vulnerability can lead to systemic issues, misinformed decisions, and long-term damage to agent operations.

Examples of Vulnerabilities

-

Malicious data injection into agent training datasets to influence agent behaviour.

-

Manipulation of external knowledge sources to provide incorrect or harmful information.

-

The exploitation of agent learning processes to introduce harmful behavioural patterns.

-

Corruption of agent knowledge bases, resulting in incorrect decision-making.

-

Poisoning reference data sources to disrupt agent operations across multiple systems.

Solutions

-

Data Validation: Under source verification and input validation, integrity checks are performed on data used by the agents.

-

Knowledge Protection: Implement access rights, version control, and regular auditing controls to ensure that knowledge base data is not altered.

-

Learning Safeguards: Examine learning processes, verify patterns, and integrate diagnostic tools to prevent shifts in agent behaviour to undesirable patterns.

-

Verification Systems: Implement data source verification, cross-check, and authentication of updated knowledge to avoid database loopholes.

-

Protective Mechanisms: Employ knowledge base backup, ability to roll back, detect corruption and perform periodic audits to identify cases of poisoning.

Memory and Context Manipulation

Challenge

AI agents are vulnerable to memory and context manipulation, where attackers exploit memory mechanisms or session states to compromise context retention, leading to potential security breaches and decision-making disruptions.

Examples of Vulnerabilities

-

Context Amnesia Exploitation: Agents forget critical security parameters after manipulation.

-

Cross-Session Data Leakage: Sensitive data persists and becomes accessible across sessions.

-

Memory Poisoning: Attackers inject malicious context to influence future agent behaviour.

-

Temporal Attacks: Exploit agents’ short memory windows to bypass controls.

Solutions

-

Secure Memory Management: Protect agent memory using memory isolation, sanitization, and encryption.

-

Context Boundaries: Define clear context limits with session separation and time-bound retention.

-

Memory Protection Mechanisms: Encrypt and validate memory integrity while enforcing access controls.

-

Session Controls: Implement strict isolation, timeouts, and regular session state cleanup.

-

Monitoring Systems: Employ anomaly detection, memory audits, and context monitoring to identify threats proactively.

Orchestration and Multi-Agent Exploitation

Challenge

Orchestration and multi-agent exploitation arise when attackers exploit vulnerabilities in the interactions, coordination, and communication among AI agents. This can lead to unauthorized actions, system instability, or cascading failures across agent networks.

Examples of Vulnerabilities

-

Manipulation of agent communication channels to inject malicious commands.

-

Exploitation of trust between agents to propagate unauthorized actions.

-

Confused deputy attacks tricking trusted agents into performing harmful tasks.

-

Feedback loops cause resource exhaustion or system instability.

Solution

-

Secure Communication: Encrypt agent-to-agent messages, authenticate interactions, and validate message integrity.

-

Strengthen Trust Mechanisms: Apply zero-trust principles, monitor agent behaviours, and verify identities regularly.

-

Enhance Coordination: Use task validation and timeout mechanisms and prioritize tasks to prevent deadlocks or race conditions.

-

Proactive Monitoring: Deploy real-time monitoring, detect anomalies, and audit workflows regularly for unusual patterns.

Resource and Service Exhaustion

Challenge

Resource and Service Exhaustion occurs when malicious actors overwhelm an AI agent’s computational resources, memory, or service dependencies, leading to performance issues, system crashes, or denial of service.

Examples of Vulnerabilities

-

Triggering resource-heavy tasks through crafted inputs to exhaust CPU or memory.

-

Exploiting API quotas with rapid requests to external services.

-

Inducing memory leaks via state management flaws.

-

Targeting cloud-hosted agents with economic denial of service (DDoS) attacks.

Solution

-

Resource Limits and Monitoring: Use CPU and memory limits, API calls per minute, and active user tracking per minute.

-

Service Protection: The best ways to protect dependencies are by implementing throttling, failover mechanisms, and load balancing.

-

Cost Control: Promote budgetary control, measure usage, and make changes.

-

Defensive Architecture: Organize to isolate resources, mirror them and implement a failure-tolerant/ graceful system to lessen the effect.

-

Performance Monitoring: Using analytics for benchmarking and detecting anomalies so you can act before they happen.

Supply Chain and Dependency Attacks

Challenge

Supply Chain and Dependency Attacks exploit vulnerabilities in the components, libraries, and services AI agents depend on, targeting trusted channels during development, deployment, or runtime, leading to compromised security.

Examples of Vulnerabilities

-

Malicious code injected through compromised development tools or deployment pipelines.

-

Exploited external libraries, plugins, or APIs that agents rely on.

-

Poisoned dependencies introduce hidden vulnerabilities.

Solutions

-

Secure Development Practices: Implement code signing, integrity verification, and secure artefact storage.

-

Dependency Management: Conduct regular audits, scan for vulnerabilities, and enforce version pinning.

-

Service Security: Use API authentication, monitoring, and redundancy planning.

-

Deployment Security: Verify pipelines, enforce image signing, and validate configurations.

-

Monitoring and Detection: Track dependencies, detect anomalies, and run security scans.

Benefits of Specialized AI Agents

-

Enhanced Security: These agents cover vulnerabilities optimally by reducing risks and providing enhanced protection.

-

Operational Efficiency: Outsourcing security tasks helps businesses reduce time and investment while staying protected at a high level.

-

Trust and Reliability: Many users feel assured and are more likely to follow regulatory laws when dealing with secure AI systems.

-

Cost Savings: It is far better to eradicate risks and contain threats than wait for the latter to ensnare the company in cyber incidents that result in high losses and inefficiencies.

-

Scalability: Customized approaches mean that protective actions are adjusted in line with the increasing application of AI.

This interesting world of autonomous AI Agents will open enormous opportunities but carry risks that have no parallel. It is essential to understand that fixing the top 10 vulnerabilities mentioned in this blog is not just a technical requirement but a strategic direction for those organizations willing to benefit from AI safely. By combining these solutions, the corporate sector not only safeguards itself from such risks but also deploys AI to its optimal value proposition without any doubt. This is the future of AI systems that are secure, efficient, and, therefore, trustworthy, opening an innovation path without vulnerability.

Discover more blogs for deeper insights and the latest trends in AI and technology

As AI agents integrate into critical business operations, addressing these vulnerabilities is essential to maintaining system integrity, safeguarding sensitive data, and ensuring operational reliability.

As AI agents integrate into critical business operations, addressing these vulnerabilities is essential to maintaining system integrity, safeguarding sensitive data, and ensuring operational reliability.