AI-Driven Industrial Transformation: Unlocking the Power of Vision

The industrial world is undergoing a revolution fueled by computer vision on edge and its applications. Today, deploying vision models on CPUs isn’t merely a technical endeavor—it’s a strategic imperative for industries aiming to improve quality, boost efficiency, and reduce operational costs. From the production line to warehouse automation, AI-powered vision models have become critical in making intelligent decisions in real time. Industries are leveraging computer vision for automated assembly line inspections to enhance defect detection and quality control, while computer vision in vehicle safety and monitoring is being adopted to improve real-time hazard detection and accident prevention.

Additionally, sectors like finance are automating financial document processing with computer vision, while healthcare leverages biomedical image analysis and diagnostics for more accurate disease detection. In this article, we explore how enterprises can harness Intel’s diverse portfolio—from low-power Movidius VPUs to high-performance Gaudi 3 accelerators and Xeon processors—by utilizing tools like the OpenVINO™ toolkit.

This deep dive will provide comprehensive insights into the entire deployment journey, from AI inference optimization, model conversion, and multi-modal datasets to integration in industrial systems.

Understanding AI Accelerators for Industrial Applications

AI accelerators are specialized hardware or software designed to speed up the processing of AI models, particularly machine learning (ML) and deep learning (DL) tasks. They optimize computation-heavy tasks like training and inference (making predictions) in AI applications, making them more efficient and faster. Many industries are also exploring essential insights into self-supervised learning for computer vision to improve model adaptability with minimal labeled data.

Common types of AI accelerators used are:

Graphics Processing Units (GPUs)

GPUs are widely used for AI workloads because they are designed to handle many parallel tasks simultaneously. They excel in tasks like training deep neural networks, as they can perform many operations in parallel, which is essential for processing large datasets. Parallel processing in computer vision is crucial for applications such as computer vision for monitoring classroom engagement, where real-time data analysis helps assess student participation.

Tensor Processing Units (TPUs)

TPUs are custom-built chips developed by Google specifically for accelerating machine learning tasks. They are designed to handle tensor operations, which are common in deep learning models. TPUs provide very high performance for training and inference in AI applications, such as computer vision for automated network infrastructure monitoring, which ensures seamless connectivity and security in large-scale IT environments.

Field-Programmable Gate Arrays (FPGAs)

FPGAs are programmable chips that can be configured to perform specific tasks efficiently. For AI, they can be customized to accelerate certain operations in machine learning models. Using computer vision for automated shelf management in retail stores is a key application, where FPGAs help optimize inventory tracking and enhance customer shopping experiences.

Application-Specific Integrated Circuits (ASICs)

ASICs are custom-designed chips tailored for specific tasks, such as AI processing. Unlike general-purpose processors (CPUs), ASICs are optimized for particular workloads, providing much higher efficiency for those tasks. They are widely used in computer vision for automated assembly line inspections, improving real-time defect detection and quality assurance in manufacturing.

Unlock the power of Edge and Vision AI for real-time intelligence and automation! Explore how AI-driven solutions enhance efficiency, from smart surveillance to automated inspections. Learn more here: XenonStack Edge and Vision AI Solutions!

Exploring Intel’s AI Accelerator Portfolio for Vision Workloads

Intel’s product lineup for AI vision workloads spans several families—each tailored to meet specific deployment needs, whether at the edge or in data centers. Let’s take a closer look at each component:

Intel® Movidius™ VPUs and Vision Processing Units

Movidius VPUs have been the cornerstone for low-power, high-efficiency computer vision applications. Originally developed by Movidius (now part of Intel), these processors are designed for ultra-compact form factors with power consumption low enough to be integrated into computer vision in vehicle safety and monitoring, enabling real-time object detection in autonomous driving systems. Additionally, computer vision for monitoring energy infrastructure benefits from VPUs by detecting faults and leaks in industrial facilities.

Intel® Xeon Processors with Integrated AI Enhancements

Modern Intel Xeon processors come with built-in AI acceleration features. By incorporating technologies like Intel DL Boost and Advanced Matrix Extensions (AMX), these CPUs accelerate complex neural network operations directly on the processor. This integration not only supports large-scale inference in data centers but also simplifies the system architecture by reducing the need for separate accelerator cards in many cases. Xeon processors offer scalability and robust reliability for mission-critical industrial applications.

Intel® Gaudi® AI Accelerators: The High-Performance Option

For enterprises requiring top-tier performance, the Gaudi family offers a specialized solution for training and inference at scale. The latest Gaudi 3 accelerator is engineered with state-of-the-art silicon using a 5nm process, delivering significantly enhanced throughput and power efficiency. With features like industry-standard Ethernet connectivity for seamless multi-node scaling, Gaudi 3 is optimized for both generative AI and computer vision workloads. Its architecture—with multiple tensor processor cores and matrix multiplication engines—enables faster model training and inference than many conventional GPU-based solutions.

The OpenVINO™ Toolkit: Bridging Hardware and Software

Intel’s OpenVINO™ toolkit plays a critical role in maximizing the performance of these accelerators. It allows developers to convert, optimize, and deploy deep learning models across Intel platforms—from CPUs and integrated graphics to VPUs and Gaudi accelerators. OpenVINO™ supports multiple frameworks (TensorFlow, PyTorch, ONNX, etc.), thereby ensuring that vision models can be efficiently executed with low latency and high throughout on Intel hardware

Its broad hardware support and ease of integration have made it a linchpin for accelerating AI inference optimization in industrial applications.

Ecosystem Integration: Enhancing AI Vision with Complementary Solutions

Intel’s accelerator portfolio is further strengthened by its ecosystem initiatives, such as oneAPI and open networking standards. These initiatives promote interoperability across heterogeneous architectures and ensure that solutions are future-proofed as new hardware generations emerge. Whether deploying at the edge or in the cloud, Intel’s portfolio offers flexibility, cost-effectiveness, and high performance to meet diverse industrial needs.

End-to-End Deployment Workflow for Vision Models

Deploying vision models on Intel AI accelerators is an end-to-end process that covers everything from model training and optimization to real-time AI inference optimization. Here’s a deeper dive into each step of the workflow:

Model Development and Training

Vision models are generally developed and trained on high-performance workstations or in cloud environments using popular frameworks like TensorFlow, PyTorch, or ONNX. During this phase, models are designed to solve specific AI vision workloads—such as object detection, segmentation, or classification—using convolutional neural networks (CNNs) or more advanced architectures like Generative Adversarial Networks (GANs). Intel’s support for these frameworks on its Xeon processors and Gaudi accelerators ensures that training can be accelerated while maintaining high levels of accuracy.

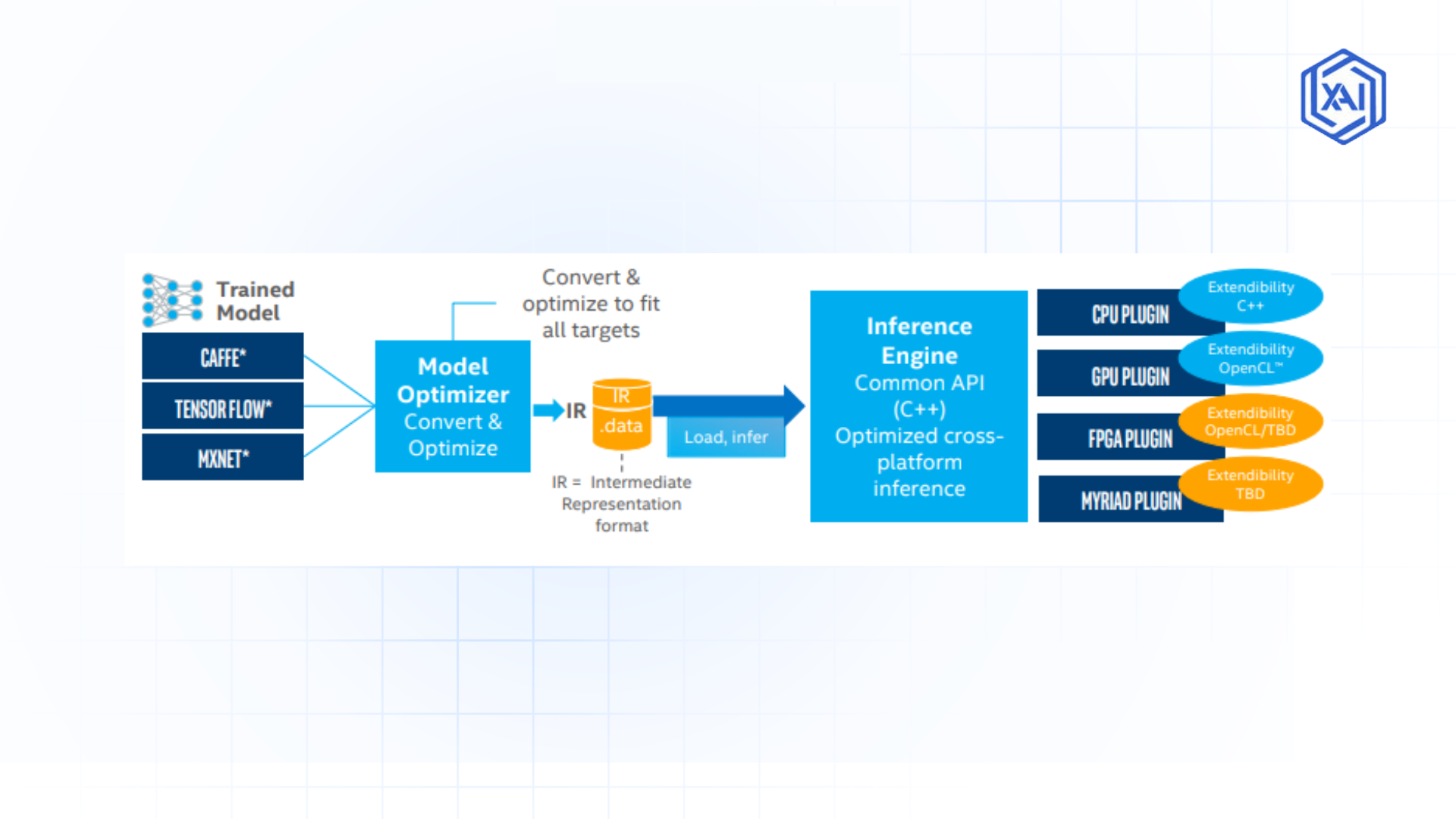

Model Conversion and Optimization with OpenVINO™

Once training is complete, the next step is to convert the model into an optimized format for deployment. Intel’s OpenVINO™ toolkit provides a robust conversion process that transforms models into an Intermediate Representation (IR). This IR format is tailored for Intel AI accelerators, ensuring that the model runs efficiently on various hardware configurations. Optimization techniques—such as quantization, parallel processing, and pruning—are applied to reduce model size and latency without significant accuracy loss. These optimizations are crucial for deploying models on ARM processors and other low-power edge devices where resources are limited.

Figure 1: OpenVino Workflow

Figure 1: OpenVino Workflow

-

Input Phase: Trained models from frameworks like CAFFE, TensorFlow, and ONNX are fed into the OpenVINO pipeline.

-

Model Optimization: The Model Optimizer converts these frameworks into a unified Intermediate Representation (IR) format, consisting of both network topology and weights data files.

-

IR Processing: These standardized IR files are passed to the Inference Engine, which provides a common C++ API interface for all hardware targets.

-

Engine Configuration: The Inference Engine identifies the appropriate hardware plugin based on the deployment target and configures the execution environment.

-

Plugin Execution: Hardware-specific plugins (CPU, GPU, FPGA, MYRIAD) run the optimized model with their respective extensibility options (C++, OpenCL, OpenCL/TBB, TBD).

-

Cross-Platform Inference: The entire architecture enables optimized model deployment across different hardware platforms while maintaining a consistent API.

Deployment Strategies: From Edge to Cloud

After optimization, the deployment strategy depends on the target environment:

-

Edge Deployment: For applications that require real-time inference—such as quality control on a production line or autonomous robotics—models can be deployed on low-power devices equipped with Intel Movidius VPUs or integrated Xeon solutions. Edge deployment minimizes latency by processing data locally.

-

Data Center and Cloud Deployment: In scenarios where large-scale inference is needed, models are deployed on high-performance Intel Gaudi accelerators or Xeon processors in data centers. With open-standard Ethernet networking and support for multi-modal datasets, these systems efficiently handle high data volumes and complex AI workloads.

Integration and System-Level Considerations

Integrating the vision model into an existing industrial system involves more than just deploying the model. It requires seamless integration with sensors, cameras, and control systems. Intel’s ecosystem, including tools like the Intel Tiber Developer Cloud and oneAPI, supports this integration by providing common APIs and libraries that enable interoperability across different hardware. This unified approach simplifies system maintenance, security, and scalability.

Continuous Monitoring and Updates

Once deployed, it is essential to continuously monitor model performance and update it as necessary. Real-world industrial environments can introduce variability (e.g., lighting changes, sensor noise) that may affect model accuracy. Implementing a feedback loop—where the model is periodically retrained with new data—ensures that the vision system remains robust over time.

How to Integrate AI Vision Models into Industrial Systems

Integrating the vision model into an existing industrial system involves seamless interoperability with sensors, cameras, and control systems. Intel’s ecosystem, including tools like oneAPI and the Intel Tiber Developer Cloud, simplifies this integration.

Overcoming AI Deployment Challenges: Best Practices for Success

While the benefits of deploying vision models on Intel AI accelerators are immense, industrial implementations do come with challenges:

Environmental Variability

Industrial settings often feature diverse lighting, temperature fluctuations, and physical vibrations. Robust data augmentation during model training can help vision models adapt to these variations. Techniques like gamma correction and adaptive contrast normalization ensure that models remain reliable despite environmental inconsistencies.

Balancing Performance, Efficiency, and Cost

Deploying vision models requires a balance between high computational performance and energy efficiency. Intel’s Gaudi 3, for instance, is engineered to offer superior performance per watt, making it an economical choice for large-scale AI deployments. Selecting the right hardware configuration—whether it’s a low-power VPU for edge tasks or a high-performance Gaudi accelerator for data centers—ensures optimal resource utilization.

Data Security and System Reliability

Industries handling sensitive operational data must prioritize security. Intel platforms incorporate advanced security features, such as hardware-based encryption and trusted execution environments, to safeguard data integrity. Regular system monitoring and robust failover strategies further ensure that deployed vision models remain reliable under all conditions.

Continuous Learning and Model Updates

Industrial environments are dynamic, and vision models must evolve accordingly. Implementing a continuous learning framework, where models are periodically retrained with fresh data, helps maintain accuracy over time. Leveraging cloud and edge integration enables seamless updates without disrupting ongoing operations.

Future Trends in Industrial AI Vision Deployments

The landscape of industrial AI is rapidly evolving, with several trends poised to shape the future of deploying vision models on Intel AI accelerators:

-

Advancements in Accelerator Technology: With Gaudi 3 setting new benchmarks for performance and efficiency, future iterations—such as the anticipated Falcon Shores—promise even greater computational power.

-

Convergence with IoT and Edge Computing: The shift toward edge computing will continue as industries require real-time processing without the latency of cloud-based solutions.

-

Open Ecosystem and Collaborative Standards: Intel’s commitment to open systems, exemplified by its open-source OpenVINO™ toolkit and community-driven initiatives, is fostering an ecosystem where innovation is shared.

-

Enhanced Software Toolchains and Developer Platforms: As the complexity of deploying AI vision models grows, enhanced software toolchains that simplify the conversion, optimization, and deployment processes will become increasingly important.

The Road Ahead for AI-Powered Industrial Vision

Deploying vision models on Intel AI accelerators represents a transformative shift in how industries harness artificial intelligence. By leveraging a diverse range of hardware—from low-power Movidius VPUs to high-end Gaudi 3 accelerators and Xeon processors—coupled with powerful tools like OpenVINO™, businesses can achieve unparalleled efficiency, reliability, and scalability in their industrial applications.

As innovation continues to accelerate, industries that embrace these technologies will not only remain competitive but also lead the charge in a smarter, more efficient future.

Next Steps in Adopting AI-driven Industrial Automation

Talk to our experts about implementing AI-driven industrial automation. Learn how industries and various departments leverage computer vision workflows and AI-powered decision intelligence to enhance operational efficiency. Utilize Intel AI accelerators to optimize and automate IT support and industrial processes, improving responsiveness and performance.